ChatGPT has quickly taken over the world. It’s become so embedded in our culture, in fact, that many industries have been forced to adapt. But none have gone on a more drastic change than our education industry.

Students have been using ChatGPT to cheat their way to higher grades. This has led to the development of AI detectors, which proved to be a good band-aid solution for some educators. But there’s a different side to this. Something that I believe is widely overlooked, but is equally worth talking about.

What happens if you’re falsely accused of using AI? More importantly, why does that happen and what can you do about it? All that, and more, in this article.

The Rise of AI Detection Tools

With the rise of ChatGPT came a pressing question, “how can we separate human writing from AI writing?” And the answer came in the form of AI detection tools.

Two of the earliest AI detectors in the market are OpenAI’s Classifier and TurnItIn. The former was discontinued because of its low accuracy while the latter’s still hanging on for better or worse, but we’ll get to that later.

Today, there’s no shortage of AI detection tools, free or otherwise. You’ve got the staples like Copyleaks, GPTZero, and Originality. Then you’ve got the worthy challengers like Sapling, Content At Scale, and Crossplag. There’s just so many of them that I can’t help but wonder…

How Accurate Are They?

Seamless transition. Nailed it.

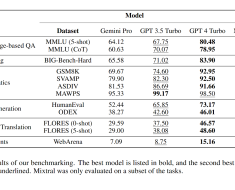

The most recent study I could find about the accuracy of AI detectors was from William H. Walters of Manhattan College. His methodology was simple: 126 test cases (42 each from GPT-3.5, GPT-4, and human) were analyzed by 16 popular AI detection tools. Here are the results:

|

GPT-3.5 Correctness Average |

GPT-4 Correctness Average |

Human Text Correctness Average |

Total Correctness Percentage |

|

However, it’s important to note that the table above represents only one set of testing. Despite low scores in this test, I personally find Sapling to be one of the most accurate when I test detectors.

It was also performed in August 2023, and both AI models and detectors have evolved drastically since then. So, always do your own research and, if possible, testing.

What Are False Positives?

One of the reasons OpenAI shuts their AI Classifier down is because they believe detectors are unreliable and — for the most part — they’re right on the money. This is largely because of false positives and negatives.

False positives occur when an AI detector wrongly identifies human writing as AI. Normally, this wouldn’t be a cause for concern but, with more teachers worrying about dishonesty in the age of AI, many have resorted to using AI detectors to monitor their students. The worst part is that some of them think that these tools are infallible, when they’re clearly not.

As for why this happens, think of it this way: all content originates from a human source. ChatGPT is, at best, a secondary source because they’re trained with human writing. Since it’s a natural language model, it’s really no wonder that false positives are prominent today.

Real-Life Cases of False Accusations

Receiving academic sanctions because of false positives is a nightmare for any student but, for these people, that nightmare has become a reality — and it is terrifying.

William Quarterman

William Quarterman was — by all standards — an outstanding student with a clear record. That is, until he opened his student portal and saw that he had received a failing grade after his professor used GPTZero and found that his history exam was plagiarized from ChatGPT. Not only that, but he was also summoned to the university’s Office of Student Support and Judicial Affairs for academic dishonesty.

The only thing is, he never used ChatGPT.

This took a toll on both his academic performance and mental health. Fortunately, his case was dismissed and the university admitted that they have no reasonable evidence against him.

The thing is, he got lucky. Schools don’t need “evidence beyond a reasonable doubt” to get you in trouble. If they think you cheated, you can most likely get in trouble for it.

Louise Stivers

Funnily enough, we don’t have to go far to see our next case of false positives in academia. Louise Stivers, also from UC Davis, was also accused of using generative AI to write a case brief for her class. The culprit this time is TurnItIn.

This time, help came in the form of William Quarterman, who was once classmates with Stivers. Together with his dad, they were able to give advice to Stivers and helped overturn her academic sanction.

Texas A&M University Students

A screenshot from a Texas A&M professor went viral in Reddit last May after he threatened to fail his entire class because their essays were flagged as AI. This was, fortunately, resolved after the professor gave a different writing assignment.

However, I believe this situation does raise an important question: Why is the burden of proof on the students when AI detectors are unreliable?

New Zealand Year 12 Students

Two unnamed students from New Zealand, both excellence-level, were also falsely accused of using ChatGPT for their assignments. This was more high stakes since, given their exceptional academic standing, it could result in them not getting into top universities if proven to be true.

This case was escalated to Research on Academic Integrity in New Zealand and both students were deemed innocent.

Non-Native English Speakers

James Zou, an assistant professor in Stanford, recently tested 91 essays against seven AI detection tools and here’s what he found:

On average, AI detectors are 8% more likely to flag human-written content from non-native English speakers than native English speakers. He cites that the most reasonable assumption is that non-native speakers write with less complexity, similar to popular generative AI, than native speakers.

This does raise a somewhat amusing thought. According to scientists, if this is truly the case then AI detectors could actually “compel non-native writers to use GPT more to evade detection.”

How To Protect Yourself Against False Accusations?

Record Your Writing Process

The best way to avoid false accusations is by showing your work. Always keep the following in arm’s reach in case your work gets flagged as AI:

- Drafts of your work

- List of sources

- Brainstorming notes

- If applicable, an outline

I also highly suggest using Google Docs since it saves versions of your article at different points in time. This would make it easy to retrace your progress from ideation to completion, and could serve as a valuable proof of your integrity.

Personalize & Remove AI Identifiers

Don’t write like a robot — instead, write as yourself. Your writing quirks will help prevent detectors from falsely classifying your text as AI. You should also avoid common AI identifiers such as:

- Repetition. ChatGPT can sometimes use the same words over and over again, especially for transition.

- Common AI Words. Some examples of this include leverage, utilize, and transitional words.

- Lists. ChatGPT tends to list several items in a line instead of breaking them down into sentences. Bard likes to create bullet points, no matter the prompt topic.

- Lack of Flow. Don’t be monotone! Experiment with sentence lengths, use different punctuations, improve your command of language.

Use AI Bypass Tools

You can also use AI bypass tools to avoid detection. These are aimed for AI users but, if you don’t have time to fix your work, this is a quick and easy way to humanize your writing.

I recommend using Undetectable AI or HideMyAI for this. I’ve found that these tools are incredibly good at imitating human writing. Just make sure that you clean up their output since they sometimes use grammar errors to spelling mistakes to avoid getting flagged.

Always Double Check Against Detectors

I can’t stress this enough. Always, always use AI detectors on your own writing. This saves you the surprise of waking up one day like Quarterman and discovering you’ve been accused of using ChatGPT. With this, you can identify the parts of your paper that are most similar to AI, and you can tweak them until they’re rightly classified as human.

My pick would have to be between Copyleaks and Sapling AI. The former has been cited many times as one of the most accurate AI detectors today.

So, What Now?

There’s no stopping AI. Unfortunately, that comes with a bit more bad than good — at least for now.

As a student myself, I can attest to the difficulty of clearing your name from false accusations, especially if they’re coming from faculty.

Receiving academic sanctions for this can absolutely derail a student’s journey. But, here’s what I’ll say:

We have to tough it out and adapt.

Growing pains are a natural part of innovation, especially for something as groundbreaking as AI. In a year or two, we’ll figure something out. We, as humans, always do.

For now, just keep the things I’ve said in this article in mind. Some of them might end up saving your grades in the near future.