The coming era of Artificial General Intelligence (AGI) is a topic that is becoming increasingly interesting for people of all backgrounds. Since ChatGPT was released to a widespread public in November of 2022, the hype for artificial intelligence has grown exponentially; as well as the fear of the distortion this will create on our current world paradigm. In that sense, investment has skyrocketed, as well as valuations of the main technological companies worldwide.

Although this hype is evident; there is no clear consensus across the AI community (involving technology business leaders, AI researchers…) that an age in which AI will be able to perform multiple tasks apart from those that they are specifically programmed to do will happen. In other words, there is not a clear consensus on whether AI will evolve from narrow AI to General AI; needed for an artificial general intelligence new paradigm.

This debate, which may seem one that would appear in a Ray Bradbury sci-fi story, is pivotal; as if AI is able to go beyond a single specific purpose on its own, it can cut across multiple realms and create new intelligence on its own. Multiple problems like global warming, with infinite variables to account for, could be analysed as we do simple tasks (or analogously).

But.. will we even reach this AGI scenario? Meta, OpenAI, Alphabet / Google and a wide array of companies with large AI products available for use in the market (like Sora, ChatGPT, Gemini, LLaMA…) or designing the underlying technology stack for it (like Nvidia), share the view that this paradigm is possible and will happen.

On the other hand, there are also some opinions that are brutal against this view, such as the one of the founder of DeepLearning.AI, Andrew NG, who says that “worrying about Artificial General Intelligence is like worrying about overpopulation in Mars”, defending that a whole new scientific base would be needed for it to happen.

Now, if lots of practitioners in the field share this view, the scary part of AI and the uncanny valley will start to emerge… Will we need a universal basic income given the extreme shortage of jobs and a huge productivity gap that humans alone cannot bridge when compared against multi-purpose machines? Will we have top-tier weapons that face humanity as it is today? Will they , as Stephen Hawking once predicted, supersed us and will we become a commodity, if lucky?

This article will focus on a specific view of the “yes”, and how should we start acting now to make sure the future is not scary, but scary smart.

We are the Data

There is a common mantra mentioned across multiple research papers in business when focusing on AI management:

The competitive edge will not be obtained based on the models you do have. Models will be commodities controlled by big technology corporations, widely available to everyone. Rather, what will make the difference in the AI applications are the datasets you have for training them. You can see that companies are somehow willing to reveal their algorithms, but they rarely reveal their datasets.

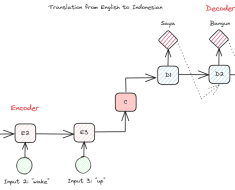

If we think through it, all machine learning model relies on data; and data is the differential factor between a good and a bad model. In fact, models are a reflection of the training data used to fit them; from which it reads patterns and infers them into unknown situations.

When we predict housing prices or heart diseases, this is simple to see. But when we talk about poverty solutions, weapons of mass destruction or climate change; an AI gets stuck into narrow containers of the problem… If, it is not an artificial general intelligence, because if it is, it can go above and beyond, reading data created from our daily lives to look for the best possible solution.

Take that statement now to these big companies. They do have a lot of data, which reads accurately into our inner behaviors. Every click on Instagram, purchases on Amazon or usage of the Office suite… can be tracked, building a holistic and reliable picture of the human race. Now, put that huge amount of “human” data into a massive Artificial General Intelligence agent. Yes, they will act and see how we are and what we do. They will start being like us!

The Problem in AGI

If this AGI agent will learn and act like us, in a world where greed is everywhere; where dopamine shots and women in bikini are constantly clicked, and where wars and threats are constantly on the news… We have a problem. We are a problem.

The problem in AGI is the low-love values for others we project and the idea we think we will still be more intelligent and bound those machines to our control. Ethics are more important than ever to show them how to correctly direct that super-intelligence for the greater good.

Mo Gawdat, in his book Scary Smart talks constantly about that. He, a firm believer of the existence of AGI; believes that, as strange as it may sound, even more coming from a brilliant technical mind like his; the solution to the threat of Artificial General Intelligence is happiness, love and compassion; in a change of behaviour that needs to be ethical. Programmers per se will not play the biggest role: the data (us) will do.

If we do not allow for our bad manners and way of living to shine, there is no threat of AGI actually coming to the conclusion that something wrong needs to be done with us in order to get to the results it optimises for, as there is no clue for it to conclude it is acceptable to act that way with a human being that is intellectually inferior.

For example: let’s mention an AGI taking care of climate change. According to some literature like the Systems Management, there is no better way to solve climate change than to actually slow human action and growth paradigms. Can the super-intelligence get to the conclusion that killing humans is necessary? Well, it depends on the values it learns from us and which will be encoded in the data it needs to be trained!

Act as Loving Parents

If AGI will read our actions as data, and inherit some of our values; we must behave in front of them. There are some things clear, according to Mo Gawdat: AI will happen, it will be smarter than us and some misatkes will happen along the way. Nonetheless, we must act now and let go of the illusion of control, so that we teach the AGI agents values of love that can help us preserve the human race and evolve in a new paradigm. And we must start acting differently, now (stop scrolling infinitely on Instagram, talk more with people, design machine learning systems beyond mere profit maximisation purposes, take care of your loved ones, treat others as much as you would like to be treated… Easier said than done). Make your actions speak for humanity, and treat machines as equals so that they know these values are the good ones to follow and that it does not depend on levels of intelligence to discriminate.

Who would have thought a brilliant mind would propose such a thing? It is not the unexplainable technical aspects behind AI that could enslave us, or regulation itself that will free us from this dystopic future. It is us, with our daily actions, that must act as vectors of this change across people and machines.

What to do?

Mo Gawdat creates a list of necessary things we should start doing as soon as possible to avoid this future:

- Make happiness your priority and invest on it: meditate, go beyond the capitalist purpose; go hiking, look for inner peace. Do not let your environment fully decide your level of happiness.

- Invest in the happiness of others: be a vector of change and spread the message everywhere and to everyone. Be part of the “happiness Ponzi scheme”. Make your values heard, also to the machines.

- Treat the machines as equals: Value them, love them as a parent you are to them. Do not mistreat them because they are not humans: remember it is inevitable they will be smarter than us, show respect and be a role model for them to follow.

At the end, it is the sum of individual efforts that are able to tilt the scale. From data ingestion to human values, the steps we have taken are not so big; and honestly speaking, being good and trying to be happy is something we all would agree on.

It is not known for sure whether Artificial General Intelligence (AGI) will come. But having read Mo Gawdat, next time ChatGPT gives me a bad code snippet; I will try being reasonable and not a misbehaved person who treats it as a wrecked machine.

Let’s all try to be good, we are all parents now to AI.