Generative AI is changing the game for modern businesses, streamlining operations, reducing errors, and generally making lives easier in the workplace.

Yet, despite its clear advantages, a number of companies remain hesitant to embrace generative AI’s advanced capabilities.

In this article, we’ll deep-dive into the reasons why companies aren’t using generative AI:

What is generative AI?

Generative AI is a subset of artificial intelligence and machine learning that’s used to generate new content from existing information, including text, images, audio, 3D models, and data.

Generative models have quickly risen to the forefront of artificial intelligence. This is due to advancements in deep learning technologies; algorithms are now capable of creating a vast collection of realistic and innovative outputs. ChatGPT is one great example of a successful generative AI implementation.

Advancements in hardware capabilities have also influenced the incredible growth of generative AI. Models are able to process massive amounts of data with great efficiency. Plus, the expansion of data sets has allowed the necessary training for algorithms to improve accuracy and performance.

Generative AI is a powerful tool that can provide many benefits and use cases for sectors like healthcare, entertainment, retail, finance, and many more. But if it offers so many exciting opportunities, then why aren’t companies using it?

Let’s dig into the data.

We recently surveyed hundreds of AI users for our Generative AI 2023 Report. The aim of the report was to gain insights into the key challenges and drivers behind companies’ use of AI.

We found that 11.8% of respondents don’t use generative AI tools at all.

So what are the reasons behind this decision?

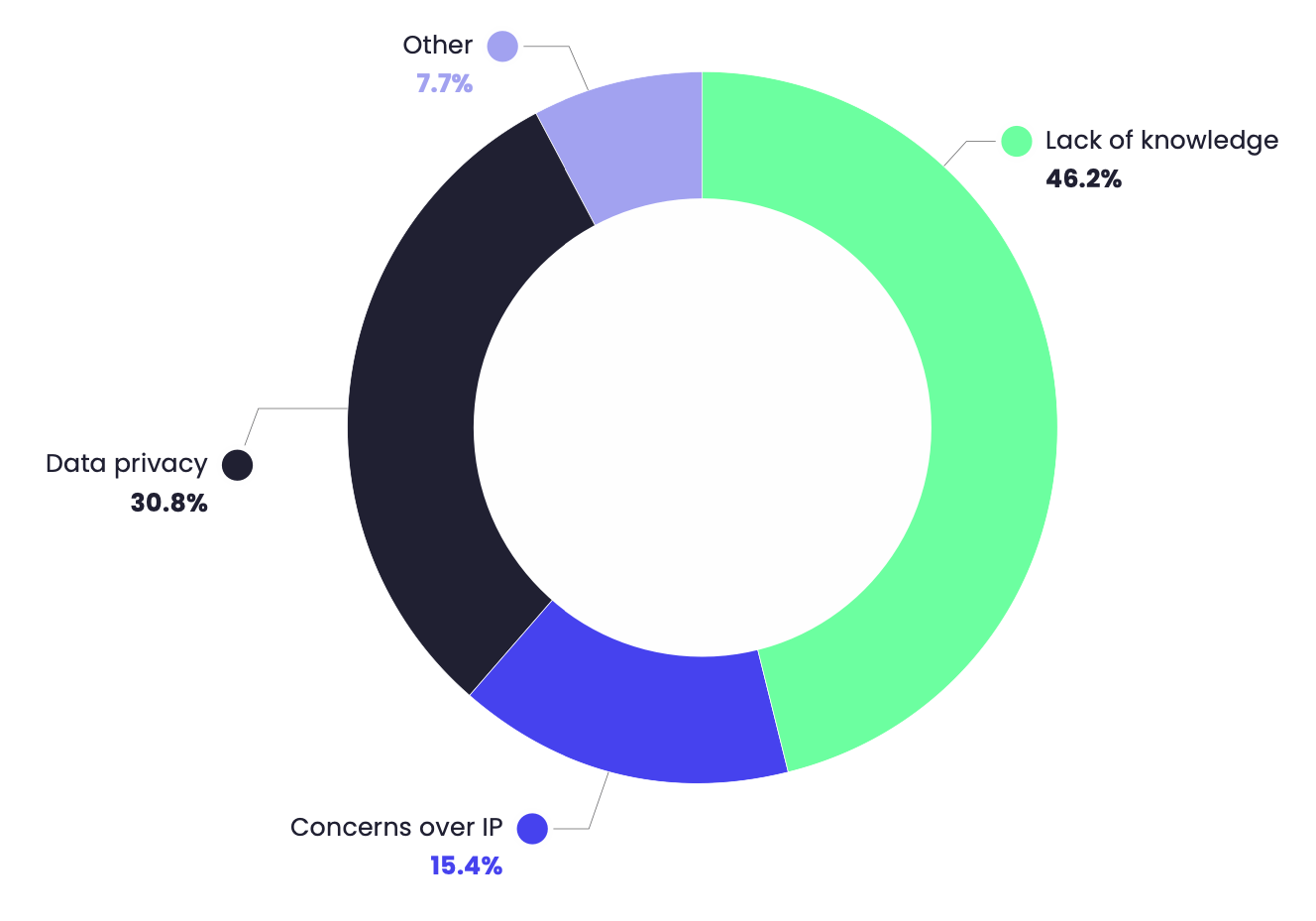

Well, 46.2% of respondents cited lack of knowledge as the number one reason for not using generative AI.

The second most popular reason was data privacy concerns, with 30.8% of the votes, followed by concerns over IP, with 15.4% of the votes.

Let’s look more closely at these three reasons:

Lack of knowledge

Lack of knowledge can be a major barrier to adopting generative AI for many companies. Firstly, generative AI can be complex, especially as it involves intricate algorithms and deep learning models. Understanding exactly how these models work, how to train them effectively, and how to correctly integrate, utilize, and derive value from them requires a sound understanding of machine learning principles.

There can also be misconceptions about what generative AI models can and can’t do. Some companies might underestimate the tool’s capabilities, leading them to miss out on potential opportunities and applications.

On the other hand, companies might overestimate generative AI’s capabilities, expecting it to generate perfect content every time and then being disappointed if it doesn’t match their expectations.

It can take time to fully comprehend generative AI’s abilities and how to apply it to specific industry or business challenges. It’s therefore understandable why companies might be hesitant to invest in it.

Implementing generative AI also requires the right expertise, and companies might not know where to begin in terms of training their employees on how to use it.

Data privacy

With generative AI comes the risk of unintentionally sharing personal data. Large language models (LLMs) are typically trained on vast datasets that include personally identifiable information. This data can be obtained with a simple text prompt, and it can be difficult for individuals to locate and request its removal.

To avoid this issue, companies need to ensure that personally identifiable information isn’t embedded in any language models being built or upgraded. They also need to make sure that personal information is easy to remove from the model in accordance with privacy laws.

Employees also risk leaking company secrets and misusing consumer data. If employees are using tools like ChatGPT to speed up their processes, they may be inadvertently sharing confidential corporate information and customer data, which breaks company privacy policies and data breach laws such as the California Consumer Privacy Act (CCPA).

Concerns over IP

Generative AI models can produce new content, designs, and solutions. But who exactly owns the rights to this newly generated content?

Copyright laws surrounding generative AI are very much a gray area, and its legal status could take a while to settle.

Image generators like Midjourney and DALL·E can create realistic images and art from text-based descriptions, while AI-powered language models like ChatGPT can generate a variety of humanlike content, adeptly mimicking requested styles and forms.

These types of tools are able to generate content from millions of examples in training sets, which means that their results are derived from existing sources. AKA, what they create isn’t original.

When these tools produce content, the source of the data they’ve used could be unknown, which could potentially lead to legal, reputational, and financial risks for a business if it turns out they’ve been relying on another company’s intellectual property.

There are very few, if any, legal precedents that provide clarity around IP and copyright challenges in regard to generative AI, and this needs to be addressed.

What else is stopping companies from adopting generative AI?

As you may have already noticed from the results of our report earlier, when we asked our respondents why they chose not to use generative AI tools, 7.7% answered ‘other.’

There may be a number of additional reasons that companies opt against the use of generative AI. These could include:

Lack of trust

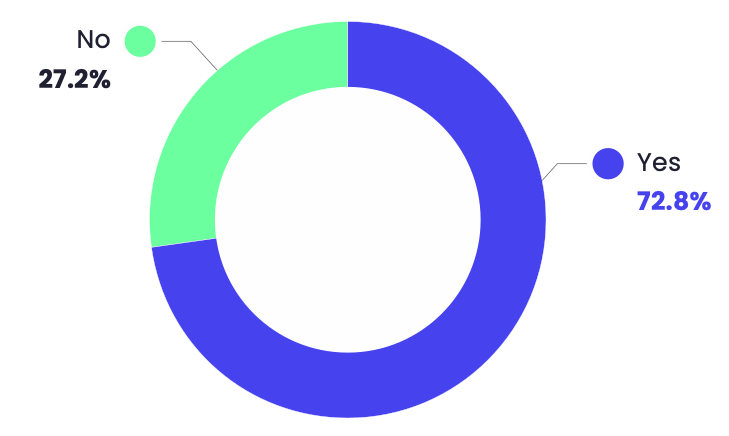

In our Generative AI 2023 Report, we also wanted to know how much those who don’t use generative AI actually trust it. The results showed that approximately 27.2% of respondents don’t trust generative AI.

This perhaps isn’t surprising, as generative AI models are known to make mistakes or produce results that deviate from intended outcomes. This makes it difficult for companies to fully rely on it to make critical decisions.

Limited outputs

Machine learning algorithms can only generate new images, texts, etc. on existing datasets. Therefore, if the initial training dataset has a limited scope, the output will also be limited.

Cost

Generative AI models can be expensive to deploy. These costs include purchasing and implementing the technology, training the model, and the additional resources needed to operate them. Hiring developers who are trained in AI is especially pricey, as well as acquiring AI hardware.

Conclusion

It’s clear to see that there’s a myriad of possibilities and promise for the future of generative AI. However, as we’ve seen from our report findings, there are some major barriers preventing companies from being able to completely rely on it.

It’s important for businesses to weigh up the pros and cons of adopting generative AI at this stage. However, as the technology becomes more regulated, it’s likely that we’ll see an uptake in adoption as industries gain a clearer understanding of its use cases.

Author:AI Accelerator Institute

AIAI will be hosting AIAI San Jose on April 16 & 7 with four co-located summits under one roof – each finely tuned to cater to professionals in specific domains of AI, ML, and LLM.

The lineup features distinguished industry leaders, including Nitzan Mekel-Bobrov, (Chief AI Officer, eBay); Tom Mason (CTO, Stability AI); and Neda Cvijetic (SVP, Head of AI & Autonomous Driving, Stellantis).

![[2401.00698] Large Language Models aren’t all that you need [2401.00698] Large Language Models aren’t all that you need](https://aigumbo.com/wp-content/uploads/2023/12/arxiv-logo-fb-235x190.png)