The legal sector has typically been characterized by a cautious, conservative approach to tech adoption. But emerging generative AI tools such as OpenAI’s ChatGPT are quietly gaining traction among attorneys.

Although ChatGPT and other powerful large language models are relatively new, they’ve already seen surprisingly widespread adoption in the legal sector. More than 40% of attorneys surveyed by legal data and analytics company LexisNexis said they currently use or plan to use generative AI in their legal work, according to an August 2023 report.

“It’s been probably the most interesting 12 months in my 20-year legal tech career,” said Cheryl Wilson Griffin, CEO of Legal Tech Consultants, which advises law firms and other legal clients on technology issues.

The evolving role of generative AI in law

The term generative AI refers to sophisticated machine learning algorithms that can produce new content, such as text, images or code, after training on enormous amounts of data.

Generative AI’s ability to create highly specific content in response to users’ queries has piqued interest within the legal industry. In the aforementioned LexisNexis report, nearly all lawyers surveyed (89%) had heard of generative AI such as ChatGPT, and 41% had tried out a generative AI tool themselves. But historically, lawyers haven’t always been quick to adopt new technologies.

Jake Heller is an attorney and CEO of legal AI company Casetext, acquired by Thomson Reuters in November 2023. He grew up in Silicon Valley and started coding at a young age. Upon becoming a lawyer, Heller found himself frustrated by the lackluster state of legal technology, particularly juxtaposed with consumer counterparts such as food delivery apps.

“It felt really imbalanced to me that the technology to support you for finding Thai restaurants was super good and the technology for saving businesses, saving people from prison and so on was so backwards and bad,” he said.

Although legal still has something of a reputation for outdated systems and resistance to change, generative AI has been harder for the sector to ignore than other tech advancements of recent decades, such as cloud or big data analytics. The release of ChatGPT marked a significant shift in lawyers’ attitudes toward AI — something Heller described as a “moment of awakening.”

“I think the thing that’s been really interesting about this, and different than other technologies that have tried to disrupt or change legal, is that there’s a consumer side,” Griffin said.

Like Heller, Troy Doucet, founder of legal AI startup AI.law, found his way to legal tech through his background as an attorney. Law firms like Doucet’s, which focuses on foreclosure defense and consumer protection, often feel pressure to maximize efficiency due to the economic challenges facing small firms.

When Doucet began exploring AI’s ability to automate legal processes, he was initially interested in issue spotting — determining whether a potential client’s narrative gives rise to legal claims. Issue-spotting software for an employment attorney, for example, might analyze whether a set of facts supports a claim under the Fair Labor Standards Act.

Doucet began exploring this use case around the beginning of the COVID-19 pandemic, ultimately hiring an employee in a data role to help develop an AI product. What started as an efficiency tool within Doucet’s firm gradually evolved into a broader project. But early on, his ambitions were hamstrung by technical limitations.

“It didn’t turn out because the technology just wasn’t there for it,” Doucet said.

The advent of ChatGPT was a game-changer, however, Doucet said, enabling him to easily work with OpenAI’s GPT models to perform issue spotting within his own firm. Down the line, as legal AI becomes more sophisticated, he believes such tools could revolutionize legal services: Increased efficiency would reduce legal costs, which in turn could expand access to justice.

The benefits of generative AI in law

Doucet’s AI.law software and Casetext’s flagship tool, CoCounsel, are positioned as AI legal assistants or colleagues, in line with the “copilot” approach taken in other disciplines. Overall, their creators say, the goal isn’t to replace real legal professionals, but rather to delegate complex tasks, aiming to achieve faster and higher-quality outcomes.

The most time-consuming areas of litigation are, unsurprisingly, among the most appealing for automation. Griffin described discovery as the “highest-risk, highest-reward” area for introducing generative AI, and it’s one of the areas Doucet targeted first with AI.law.

“Every partner gives junior associates the job of drafting discovery responses, because it’s a very tedious and unenjoyable aspect of the job,” Doucet said. “We have that down to about two minutes from four to eight hours.”

Similarly, CoCounsel includes features, such as reviewing documents and editing contracts, that are particularly effective when lawyers need to review vast amounts of information quickly.

“Sometimes the things that get in the way of us doing more aren’t the most important and pressing, highly strategic pieces,” Heller said.

Instead, it’s tiresome, repetitive tasks such as formatting citations and extracting relevant material from thousands of pages of documents. As an example, Heller described using an AI tool in an investigation to parse years’ worth of emails, Slack messages and texts in a matter of days, as opposed to weeks or even months.

“The idea of reviewing millions of documents over a few days before this technology was kind of unthinkable,” he said.

Griffin also pointed out that generative AI can help firms in areas that don’t involve the direct practice of law, such as HR and operations. “I think there’s a lot of opportunity to automate some back-office stuff,” she said. “There’s so much stuff that we’re paying a lot of money for now because it’s just wildly inefficient.”

Limitations of generative AI in law

Despite the various benefits of generative AI in law, actual adoption still appears to be lagging behind interest. There’s often a “difference between what [firms] are saying out loud and in PR, and what they’re doing and actually what’s happening in the real world,” Griffin said. “There’s such a small percentage of firms that have actually bought or tried to implement anything.”

This is consistent with broader findings in the industry. August 2023 research from TechTarget’s Enterprise Strategy Group found that 4% of organizations surveyed had implemented a mature generative AI initiative in production, though many more expressed interest in the technology or had explored it through pilot projects.

That hesitance isn’t necessarily a bad thing, as buying generative AI tools without a clear plan for how they’ll be used often ends in disappointment and disillusionment. Sometimes, Griffin said, her clients will find that they bought the tech without a problem for it to solve. Instead, she advises law firms to look at their needs first, then decide whether AI could meet those needs.

“From a best practice standpoint, always start with the problem first,” she said. “Don’t just buy the tech and say, ‘What can we do with it?’ … Make sure you have a plan before you buy.”

Moreover, generative AI still has notable limitations, particularly for complex areas such as litigation compared with formulaic tasks such as contract drafting. Griffin highlighted writing motions as an example of a less boilerplate task that remains difficult for generative AI to perform well.

“[Motions] are so much less standardized that it makes it really hard, I think, to develop a product that fills enough of the market’s needs that people want to use it,” she said.

Heller noted that there’s a subset of tasks that humans currently perform much better than AI — and perhaps always will. These include big-picture strategizing, such as deciding how to pursue a case or interpret a long history of business context, as well as interpersonal skills such as picking up on suspicious behavior in a key witness or speaking persuasively in the courtroom.

“If you think about what these AIs have been trained on, the data is what’s on the internet,” such as books and articles, Heller said. “But anybody with real experience in law practice knows there’s a lot you can’t capture in a book. It comes through experience and training and observing.”

The issue of hallucinations

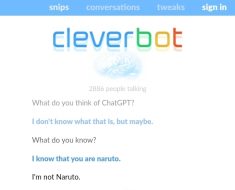

Accuracy is paramount in law practice and judicial decision-making. A document that includes hallucinations — incorrect content output by a generative AI model — could lead to misinterpretations of statutes, precedents or facts.

A model might easily come up with an explanation of a major Supreme Court case such as Brown v. Board of Education, a well-known decision about which there’s extensive publicly available internet data. But a holding on a niche point of law from a specific district court is much less likely to be among the information the model has gradually memorized.

The problem is that LLMs are trained to predict the most statistically likely combination of words in response to a prompt, not to provide accurate factual information. This means that their tendency is to offer some response, even if the correct answer to a user’s question isn’t contained in their training data.

“There’s always an incentive to answer,” Heller said. “[Generative AI models] are never going to say, ‘I don’t know.'”

More dangerously, generative AI models can be wrong while sounding very convincing. If taken at face value, hallucinations could potentially affect the outcome of legal cases. And if a judge realizes that an attorney has introduced AI-hallucinated content in a court filing, it could have serious consequences.

“If you’re a lawyer and something bad happens, your licensure is on the line,” Griffin said.

The ethical implications of using a tool that might generate false or misleading information are substantial, particularly in a legal context where every word can carry significant weight. Although it’s not always clear who should be responsible for the inaccuracies in generative AI models’ output, early examples suggest that lawyers could be on the hook.

In June 2023, a federal judge sanctioned attorneys Peter LoDuca and Steven Schwartz for relying on nonexistent case law invented by ChatGPT in a legal brief. Judge P. Kevin Castel of the Southern District of New York emphasized that the issue wasn’t that the lawyers had used an AI tool; it was that they hadn’t verified the AI-generated output and had failed to disclose their use of ChatGPT when the made-up cases were first identified.

“[T]here is nothing inherently improper about using a reliable artificial intelligence tool for assistance,” Castel wrote in his order sanctioning LoDuca and Schwartz. “But existing rules impose a gatekeeping role on attorneys to ensure the accuracy of their filings.”

It’s also currently unclear how using AI tools that can hallucinate could affect compliance with laws and regulations. Attorneys must adhere to strict professional standards and regulations around handling and presentation of evidence as well as the accuracy of legal advice. At minimum, it’s crucial to implement stringent validation and verification processes for AI-generated content in legal settings.

How legal professionals can integrate generative AI responsibly

One method for handling hallucinations is retrieval-augmented generation (RAG), a technique in which LLMs can access data from external sources to improve the accuracy and relevance of their responses. Heller, whose Casetext software uses RAG, compared introducing RAG to switching from a closed-book to an open-book exam: With RAG, the model can look up information in real time that it wouldn’t otherwise be able to access.

Although this helps ensure that AI responses are based on actual legal documents and case law, Heller cautioned that no AI tool is infallible. For example, even an AI tool using RAG could misinterpret a particularly dense, complicated text. In short, attorneys shouldn’t assume that AI is more likely to be objective or correct than a person.

“Because we work with humans, we’re used to working with imperfect beings,” Heller said. “This is one of them.”

More broadly, Doucet, Heller and Griffin all emphasized the growing importance of management skills for legal professionals working with AI. This goes beyond accuracy checks to combat hallucinations: Lawyers will need to learn how to effectively delegate, provide clear instructions and strategize using AI tools.

“Now, for the first time, everyone — including your youngest associate and paralegal — can have this AI assistant they can delegate tasks to,” Heller said. “But learning how to manage is actually really tough.” He pointed to skills that are familiar from managing human beings in the workplace, such as expressing instructions clearly, delegating tasks and giving useful feedback.

4 best practices for using generative AI in law

Although introducing generative AI into law practice carries certain risks, legal professionals can take steps to implement generative AI as responsibly and safely as possible.

1. Understand AI’s capabilities and limitations

Before integrating AI into the day-to-day practice of law, it’s essential to understand what AI can — and just as importantly, can’t — do. Learning about the potential for errors, biases and hallucinations helps clarify where human oversight is needed.

2. Use AI to support, not replace, human workers

In its current state, generative AI is far from fully replacing a trained attorney or paralegal. Although AI is often useful for initial drafting, preliminary research and large-scale data analysis, AI-generated content should never go out without review. Keep final decisions and fact-checking in the hands of human experts.

3. Protect clients’ data and privacy

Although AI regulation is a complicated, fast-evolving area, AI use is almost certainly covered by existing data privacy laws and client confidentiality agreements. Be cautious about what data is fed into AI systems, especially sensitive client information; for example, the consumer version of ChatGPT saves all data by default for retraining OpenAI’s models.

“The key thing is understanding what [an AI model] is built on and understanding what you’re trying to do with it, and then defining the risk around that in the same exact way you would with any other technology,” Griffin said.

It’s also important to be transparent with clients about how AI is used in their cases, including the potential benefits and risks. Make a plan for how to respond to clients’ concerns about generative AI use, as not all clients will be comfortable with the use of such technology.

4. Learn about AI and stay up to date on the field

Stay informed about the latest developments in AI and their implications for law practice through online research and conversations with AI experts. Increasingly, bar associations are also offering AI education as part of professional development. Last fall, the New York City Bar Association hosted an Artificial Intelligence & Machine Learning Summit for local attorneys that offered continuing legal education credits.

“In any revolution, there’s job loss, but I think there’s also an equal amount of opportunity created,” Griffin said. “Be the first one to learn it, and you’ll be indispensable.”

Lev Craig covers AI and machine learning as the site editor for TechTarget Enterprise AI. Craig has previously written about enterprise IT, software development and cybersecurity, and graduated from Harvard University with a bachelor’s degree in English.