Last Updated on February 21, 2024 by Arnav Sharma

The recent advancements in machine learning have led to the development of large language models, which are capable of generating text by predicting the most likely next word in a sequence. These models have shown remarkable performance in a variety of natural language processing tasks, such as language translation, text summarization, and question-answering. However, the inner workings of these models can be mysterious and difficult to understand, even for experienced machine learning practitioners.

Introduction to large language models

Large language models have been making waves in the field of natural language processing and artificial intelligence. These sophisticated algorithms have the capability to understand and generate human-like text, revolutionizing various applications such as chatbots, language translation, content generation, and even creative writing.

But what exactly are large language models, and how do they work? In simple terms, these models are complex neural networks that have been trained on vast amounts of text data. They learn the statistical patterns, grammar, and semantics of language, allowing them to generate coherent and contextually relevant text.

The training process for these models involves exposing them to massive datasets, often comprising millions or even billions of sentences. This enables the models to learn the intricacies of language and develop a deep understanding of the relationships between words, phrases, and sentences.

One of the key components of large language models is the use of attention mechanisms. These mechanisms allow the models to focus on relevant parts of the input text while generating output. By assigning different weights to different words and phrases, the models can effectively capture the context and generate meaningful responses.

Moreover, large language models often employ techniques such as self-attention and transformer architecture. These techniques enable the models to process and analyze input text in parallel, making them highly efficient and capable of handling large volumes of data.

It is important to note that large language models are not programmed with explicit rules or predefined templates. Instead, they learn from the data and generate text based on the patterns and structures they have observed. This makes them highly adaptable and capable of producing diverse and contextually appropriate responses.

What are language models And why are they important?

Language models are powerful tools that have revolutionized natural language processing and understanding. At their core, language models are algorithms or neural networks that are trained to predict or generate human language based on patterns and examples in large datasets. These models have the ability to understand and generate human-like text, making them invaluable for a wide range of applications, from voice assistants and chatbots to machine translation and content generation.

Language models are important because they enable machines to process and understand human language, bridging the gap between humans and computers. By capturing the intricacies of grammar, syntax, and context, these models can generate coherent and contextually appropriate responses, making interactions with technology more seamless and natural.

One of the key advancements in recent years has been the development of large language models, such as OpenAI’s GPT-3. These models are trained on massive amounts of text data, allowing them to capture a vast array of language patterns and nuances. With billions of parameters and the ability to generate highly realistic and contextually appropriate text, large language models are pushing the boundaries of what machines can do with language.

Large language models have the potential to revolutionize various industries and domains. They can be used to enhance customer service experiences, automate content creation, aid in language translation, and even assist in scientific research. However, understanding how these models work and their limitations is crucial to ensure their responsible and ethical use.

The evolution of language models

The evolution of language models has been a remarkable journey that has revolutionized natural language processing and AI research as a whole. From humble beginnings to the sophisticated systems we have today, language models have come a long way.

Early language models focused on rule-based approaches, where linguists manually crafted grammatical rules and dictionaries to interpret and generate text. These rule-based models had limitations in capturing the complexity and nuances of language. They struggled to handle ambiguity, context, and the ever-changing nature of language.

However, with the advent of machine learning and neural networks, a new era of language models emerged. Statistical language models, such as n-grams, utilized probabilities to predict likely sequences of words based on observed patterns in large corpora. While these models were an improvement, they still lacked a deep understanding of language semantics and struggled with longer-range dependencies.

The breakthrough came with the rise of deep learning and the introduction of neural language models. These models, such as recurrent neural networks (RNNs) and later, transformers, brought about a paradigm shift in natural language processing. They allowed for the learning of complex patterns and dependencies in vast amounts of text data, enabling more accurate and context-aware language generation.

The most recent milestone in the evolution of language models is the development of large-scale pre-trained models, such as GPT-3. These models are trained on massive amounts of diverse text data, allowing them to capture a wide range of language patterns and knowledge. By fine-tuning these models on specific tasks, they can generate highly coherent and contextually appropriate text.

As we continue to push the boundaries of language models, researchers are exploring ways to enhance their capabilities further. This includes incorporating external knowledge sources, improving reasoning abilities, and addressing ethical concerns like bias and fairness.

Understanding the challenges of large language models

Large language models, such as GPT-3, have gained significant attention and popularity in recent years. These models, with their impressive capabilities, have the potential to revolutionize various fields, from content generation to natural language processing. However, it is important to understand the challenges that come with harnessing the power of these models.

One of the primary challenges is computational resources. Large language models require substantial computational power to train and fine-tune. The sheer size of these models, often consisting of billions of parameters, necessitates specialized hardware and infrastructure. This can pose a significant barrier for individuals or organizations with limited resources.

Moreover, the training process itself can be time-consuming. Training a large language model requires extensive datasets and iterations, which can take days, weeks, or even months to complete. This time investment adds to the overall cost and complexity of utilizing these models effectively.

Another challenge is data quality and bias. Language models are trained on vast amounts of text data from the internet, which inherently contains biases and inaccuracies. These biases can be reflected in the generated outputs of the models, potentially perpetuating societal stereotypes or misinformation. It is crucial to carefully curate and preprocess the training data to mitigate these biases and ensure ethical and responsible use of the models.

Furthermore, large language models can sometimes generate outputs that are plausible-sounding but factually incorrect or misleading. This raises concerns about the reliability and trustworthiness of the information generated by these models. Implementing effective mechanisms for fact-checking and verification becomes essential to address these challenges and maintain the integrity of the generated content.

Lastly, the deployment and integration of large language models into real-world applications can be a complex task. Adapting these models to specific domains or fine-tuning them for specific tasks requires domain expertise and careful consideration. Additionally, ensuring efficient and scalable deployment of these models is crucial for their practical usability.

Components of large language models

To understand how large language models work, it’s essential to break down their components. These models consist of several key elements that work together to generate coherent and contextually appropriate text.

1. Pretrained Model: Large language models are initially trained on massive amounts of text data, such as books, articles, and websites. This pretrained model serves as the foundation and contains a vast amount of knowledge and language patterns.

2. Encoder-Decoder Architecture: Many large language models adopt an encoder-decoder architecture. The encoder receives input text and transforms it into a numerical representation, known as embeddings. The decoder then takes these embeddings and generates output text based on the learned patterns.

3. Attention Mechanism: Attention mechanisms allow the model to focus on relevant parts of the input text while generating the output. It enables the model to understand the dependencies and relationships between different words and phrases, enhancing the coherence of the generated text.

4. Transformer Blocks: Transformers are crucial building blocks of large language models. These self-attention mechanisms enable the model to capture dependencies between words in a more efficient way, considering both local and global contexts. Multiple transformer blocks are stacked together to form the model architecture.

5. Fine-tuning: After the initial pretrained model, large language models are further fine-tuned on specific tasks or domains. This fine-tuning process helps the model adapt and specialize in generating text for specific applications, such as chatbots, translation, or content generation.

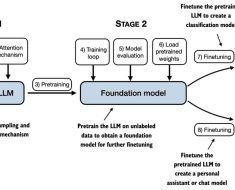

a) Pre-training and fine-tuning

To understand how large language models work, we need to delve into the two key processes they undergo: pre-training and fine-tuning. These processes are crucial in equipping models with the ability to generate coherent and contextually relevant text.

Pre-training is the initial step, where the model is exposed to a massive amount of text data from the internet. This data includes books, articles, websites, and more. During pre-training, the model learns to predict the next word in a sentence based on the context it has been exposed to. By doing so, it acquires a broad understanding of grammar, syntax, and even some factual knowledge. This phase is resource-intensive and requires substantial computational power and time.

Once pre-training is complete, the model moves on to fine-tuning. Fine-tuning refines the pre-trained model using specific datasets tailored to desired tasks, such as language translation, question answering, or text completion. Fine-tuning involves exposing the model to task-specific data, training it to generate relevant responses or predictions based on the input it receives. This process allows the model to adapt its language generation abilities to the specific requirements of the task at hand.

The combination of pre-training and fine-tuning empowers large language models to generate human-like text responses. The pre-training phase provides the model with a strong foundation of language understanding, while the fine-tuning phase tailors its capabilities to specific tasks. This approach enables the model to generate contextually coherent and contextually relevant text, making it a powerful tool in various natural language processing applications.

b) Architecture and model structure

When it comes to large language models, their architecture and model structure are key components in understanding how they work. These models are designed with intricate layers and structures that enable them to process and generate human-like text.

One common architecture used in large language models is the transformer-based architecture. This architecture relies on self-attention mechanisms, which allow the model to weigh the importance of different words within a given sentence. By attending to different parts of the input text, the model can capture important contextual information and generate coherent and contextually relevant responses.

The transformer architecture consists of multiple layers, typically composed of encoder and decoder layers. The encoder layers analyze the input text and create a representation of it, while the decoder layers generate the output text. Each layer in the transformer architecture performs operations like self-attention and feed-forward neural networks, which help capture dependencies between words and generate accurate predictions.

Model structure refers to the specific configuration and size of the language model. Large language models are typically composed of millions or even billions of parameters, allowing them to learn complex patterns and generate high-quality text. The structure of these models is carefully designed to balance computational efficiency and performance, taking into account factors like memory requirements and training time.

c) Tokenization and vocabulary

Tokenization and vocabulary play a crucial role in understanding how large language models work. When it comes to processing text, language models break down the input into smaller units called tokens. These tokens can be as short as individual characters or as long as entire words or even longer phrases, depending on the model’s design.

The tokenization process involves dividing the text into these meaningful units, which allows the model to analyze and process the information more effectively. For example, the sentence “I love ice cream” might be tokenized into [“I”, “love”, “ice”, “cream”].

Each token is assigned a unique numerical representation, known as an ID, which allows the model to handle and manipulate the text as numerical data. This conversion from text to numerical tokens is vital because machine learning models, including large language models, primarily operate on numerical inputs.

The vocabulary of a language model refers to the collection of unique tokens it has been trained on. This vocabulary encompasses all the words, phrases, and other textual elements that the model has learned from its training data. The size of the vocabulary can vary depending on the model’s training corpus and its specific domain.

Handling out-of-vocabulary (OOV) tokens is an essential consideration in large language models. OOV tokens are encountered when the model encounters a token that is not present in its vocabulary. To address this, the model typically employs techniques like subword tokenization or using a special token to represent unknown words.

d) Attention mechanisms

Attention mechanisms play a crucial role in the functioning of large language models, contributing to their impressive capabilities in understanding and generating human-like text. These mechanisms enable the model to focus its attention on relevant parts of the input sequence while processing and generating output.

In essence, attention mechanisms allow the model to assign different weights to different parts of the input sequence, emphasizing the important elements that contribute to the generation of accurate and contextually relevant text. This process mimics the human ability to selectively attend to specific information when comprehending or producing language.

The attention mechanism operates through a series of computations that involve comparing each element of the input sequence with the current state of the model. By calculating similarity scores between the elements and the model state, the attention mechanism identifies the most relevant information to consider during each step of the generation process.

One popular form of attention mechanism is the self-attention mechanism, also known as the Transformer model. In this architecture, the model attends to different positions within its own input sequence, allowing it to capture dependencies and relationships between words or tokens. This self-attention mechanism enables the model to consider the context of each word by attending to other words in the sequence, resulting in more coherent and contextually accurate text generation.

How do large language models generate text?

At the heart of these models is a technique called deep learning, specifically a type of neural network known as a transformer. These models are trained on massive amounts of text data, learning patterns and relationships within the language.

When it comes to generating text, these models utilize a process called “autoregression.” This means that they predict the next word or phrase based on the context they have been given. The model takes in an initial prompt or input, and then generates a sequence of words that it believes would come next in the text.

But how does the model decide what to generate? It uses a technique called “sampling,” which involves selecting the next word probabilistically. This means that the model considers the likelihood of different words based on their training data, and then makes a choice based on those probabilities. This allows for some level of randomness in the generated text, making it more diverse and interesting.

Additionally, the model can be fine-tuned for specific tasks or domains by providing it with more specialized training data. This helps the model generate text that is more relevant and specific to the desired context.

However, it is important to note that these language models are not perfect. They can sometimes produce text that is nonsensical or grammatically incorrect. Bias in the training data can also lead to biased or problematic outputs. Therefore, it is crucial to carefully evaluate and review the generated text to ensure its quality and appropriateness.

The role of data in training language models

The success of large language models is heavily dependent on the role of data in their training. These models require massive amounts of diverse and high-quality data to learn patterns, grammar, semantics, and context. The more data they are trained on, the better they become at generating coherent and contextually relevant text.

Data for training language models can come from various sources such as books, websites, articles, social media posts, and more. It is crucial to have a wide range of data to ensure that the model learns from different perspectives and writing styles. This diversity helps the model to generalize well and produce more accurate and nuanced responses.

The data is typically preprocessed to remove noise, correct errors, and standardize the format. This preprocessing step ensures that the model focuses on the relevant information and reduces biases or inaccuracies that may be present in the raw data.

Once the data is prepared, it is used to train the language model using techniques like unsupervised learning. During training, the model learns to predict the most probable next word or sequence of words given a context. It learns the statistical patterns and dependencies in the data, which enables it to generate coherent and contextually appropriate responses.

Training a large language model requires substantial computational resources and time. High-performance GPUs and distributed computing systems are often used to accelerate the training process. The model goes through multiple iterations of training, fine-tuning, and evaluation to improve its performance.

It is important to note that the quality and diversity of the training data directly impact the output of language models. Biased or incomplete data can result in biased or inaccurate responses. Therefore, it is essential to carefully curate and validate the training data to ensure the model’s reliability and fairness.

Evaluating the performance of large language models (LLMs)

Evaluating the performance of large language models is a crucial step in understanding their capabilities and limitations. With their increasing complexity and size, it becomes essential to have a systematic approach to assess their performance accurately.

One common evaluation metric is perplexity, which measures how well a language model predicts a given sequence of words. A lower perplexity indicates better performance, as the model can more accurately predict the next word in a sequence. However, perplexity alone may not provide a comprehensive evaluation, as it does not consider other aspects of language understanding, such as coherence and semantic accuracy.

To assess the quality of generated text, human evaluation is often employed. Human evaluators can rate the generated output based on criteria such as fluency, relevance, and overall coherence. These evaluations provide valuable insights into the strengths and weaknesses of large language models, allowing researchers to fine-tune and improve their performance.

Additionally, benchmark datasets and tasks are used to objectively evaluate the performance of language models. These datasets contain specific linguistic challenges, such as question-answering, summarization, or sentiment analysis. By comparing the model’s performance against human-generated or pre-existing solutions, researchers can gauge the model’s effectiveness and identify areas for improvement.

It is worth noting that evaluating large language models is an ongoing research area, as the complexity and capabilities of these models continue to evolve. It requires a combination of quantitative metrics, human evaluation, and task-specific benchmarks to gain a comprehensive understanding of their performance.

Ethical considerations and potential risks

As we delve deeper into the realm of large language models, it is crucial to address the ethical considerations and potential risks associated with their use. These models have the power to generate incredibly realistic and convincing text, but with such power comes responsibility.

One of the primary concerns is the potential for misuse or manipulation of these models. As language models continue to improve, there is a risk that they could be used to generate malicious content, spread misinformation, or even impersonate individuals. This raises questions about accountability and the need for safeguards to prevent the misuse of these models.

Another ethical consideration is the bias that can be embedded within these models. Language models are trained on vast amounts of data, which means that they can inadvertently learn and perpetuate biases present in the training data. This can result in biased or discriminatory outputs, reinforcing existing societal inequalities and prejudices. It is essential for developers and researchers to actively address and mitigate these biases to ensure fair and equitable outcomes.

Privacy is also a significant concern when it comes to large language models. These models require access to large amounts of data to be trained effectively, often including personal or sensitive information. Safeguarding this data and ensuring proper consent and privacy protections is crucial to prevent any potential breaches or misuse.

Furthermore, the environmental impact of training and running these large models should be considered. The computational power required to train and run these models can have a significant carbon footprint. Finding ways to minimize energy consumption and explore more sustainable alternatives is essential for responsible usage.

To mitigate these risks and address the ethical considerations, it is vital for organizations and researchers to adopt transparent and accountable practices. This includes openly discussing the limitations and potential biases of these models, actively involving diverse stakeholders in the development process, and implementing robust ethical guidelines and review processes.

Applications and future possibilities of large language models

Large language models have quickly become a game-changer in various fields and industries, revolutionizing the way we interact with technology. The applications and future possibilities of these models are incredibly vast, promising advancements that were once considered science fiction.

One of the most prominent applications of large language models is in natural language processing and understanding. These models have the ability to comprehend and generate human-like text, making them invaluable in chatbots, virtual assistants, and customer service applications. They can engage in conversations, provide accurate responses, and even adapt their language style to match the user’s preferences.

Furthermore, large language models have found their way into content creation and generation. From writing articles and blog posts to crafting marketing copies and product descriptions, these models can produce high-quality content that is indistinguishable from human-written text. This not only saves time and resources but also opens up new possibilities for content generation at scale.

In the field of education, large language models can act as intelligent tutors, providing personalized learning experiences to students. They can answer questions, explain complex concepts, and even adapt the curriculum based on individual needs and learning styles. With their vast knowledge and ability to learn from vast amounts of data, these models have the potential to revolutionize education and make learning more accessible and engaging.

Large language models also hold promise in the realm of research and development. They can assist scientists and researchers in analyzing vast amounts of data, generating hypotheses, and even predicting outcomes. This opens up new avenues for accelerating discoveries and advancements in fields such as medicine, climate science, and beyond.

Looking ahead, the future possibilities of large language models are even more exciting. As these models continue to evolve and improve, we can expect them to play a significant role in areas like content moderation, language translation, creative writing, and even ethical decision-making. Their potential impact spans across industries, driving innovation, and transforming the way we interact with technology and information.

Q: What are large language models?

A: Large language models, also known as LLMs, are advanced AI models that are capable of understanding and generating human-like text. These models are trained on vast amounts of data and use powerful algorithms, such as transformers, to process and generate language.

Q: How do large language models work?

A: Large language models work by using a transformer architecture, which consists of an encoder and a decoder. The encoder takes in input text and converts it into a vector representation, while the decoder takes the vector representation and generates text based on it. This allows the model to understand and generate natural language.

Q: What is the use case of large language models (LLM)?

A: Large language models have a wide range of use cases. They can be used for text summarization, content generation, language translation, conversational AI, and much more. These models are versatile and can be adapted to suit various tasks that involve natural language processing.

Q: How are language models trained?

A: Language models are trained using a large dataset consisting of text from various sources, such as books, websites, and more. The model is trained to predict the next word in a sentence based on the context provided by the input text. This process helps the model learn patterns and generate text that is coherent and relevant.

Q: What is generative AI?

A: Generative AI refers to AI models or systems that are capable of creating new content, such as text, images, or music, based on patterns and data they have been trained on. Large language models are an example of generative AI as they can generate human-like text based on the input given to them.

Q: What is the future of LLMs?

A: The future of large language models is promising. As these models continue to evolve and improve, they have the potential to revolutionize various fields, such as content creation, customer service, and education. Their ability to understand and generate natural language opens up numerous possibilities for AI applications.

Q: How do large language models like ChatGPT work?

A: Large language models like ChatGPT work by processing input text and generating relevant responses. They use a combination of techniques, including self-attention and transformer models, to understand and generate language. These models are trained on vast amounts of data to ensure their responses are coherent and contextually appropriate.

Q: What are the capabilities of language models?

A: Language models are capable of various tasks, such as text generation, text completion, translation, summarization, and more. They can understand context, generate coherent responses, and adapt to different styles of writing. With advancements in AI research, language models are becoming more sophisticated and capable.

Q: What is reinforcement learning from human feedback?

A: Reinforcement learning from human feedback is a technique used to improve the performance of language models. In this approach, human reviewers review and rate model-generated responses based on predefined guidelines. The model then learns from this feedback and improves its future responses, making it more aligned with human preferences and ethical considerations.

Q: How are large language models trained for AI chatbot applications?

A: Large language models are trained for AI chatbot applications by using a combination of techniques. The models are first pre-trained on a large dataset consisting of internet text and then fine-tuned using a specific dataset generated by human reviewers. This process helps the model generate more accurate and contextually appropriate responses in chatbot scenarios.

keywords: openai and gpt-4 to summarize content based encode foundation models in largest models masked language for number of parameters scraped from the internet