If you’re not using Large Language Models (LLMs) in your daily work, you’re missing out. You can learn, improve, and optimize your developer and testing activities using any LLMs (free or paid). Even this blog article has been grammar checked by the OpenAI GPT-4 model. It is written by me, but improved by an AI. There are thousands of apps available in the marketplace which are built on top of LLM APIs. However, their monthly prices are typically higher than average. It harnesses powerful LLMs at its core. LLM companies charge in cents, but you need to pay in dollars to use those apps. That’s why I created my own app to bring your own key to power up the workflow in Command Line Interface.

What is Kel?

Kel means ask in Tamil. Kel is a simple utility which helps you to connect between your question and the LLM. It takes your questions to the LLM and prints its responses in your terminal.

Kel is built using Python and the respective LLM’s library. It is free and open source.

Features of Kel

- CLI based utility

- Bring your own API key

- Supports OpenAI, Anthropic, Ollama, and Google models.

- Supports OpenAI assistant model

- Supports styling configuration

- Displays stats such as response time, tokens, and more.

- Displays ~pricing information

- and more

How to install Kel?

There are a couple of ways you can install Kel.

pip

By using pip it is easy to install Kel.

pip install kel-clipipx

python3 -m pip install --user pipx

python3 -m pipx ensurepath

pipx install kel-cliOnce it is installed, run the below commands to configure the config.toml file.

# copy the default config file to current user's home directory

curl -O https://raw.githubusercontent.com/QAInsights/kel/main/config.toml

mkdir -p ~/.kel

mv config.toml ~/.kel/config.toml Demo

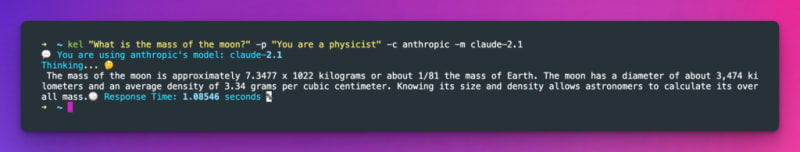

Here is a quick demo of kel.

Kel Commands

Before you ask your questions using kel, you need a valid API key either from OpenAI, Anthropic, or Google. If you want free option, you can spin up https://ollama.ai/ in your local and configure the endpoint in config.toml

Once the API key setup is done, issue the below commands.

kel -vTo view the help, issue

kel -hNow, let us ask some questions to the OpenAI model.

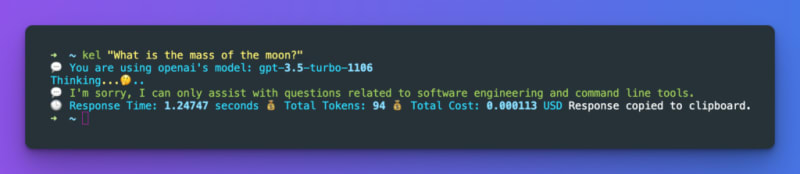

kel "git command to blame"By default, kel answers the questions which are relevant to software engineering e.g.

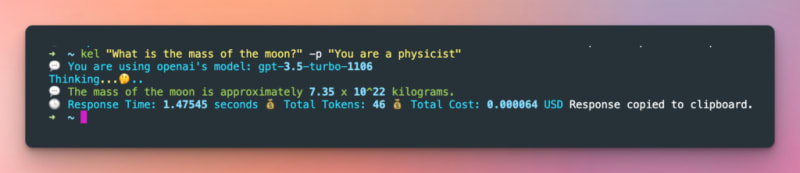

kel "What is the mass of the moon?"But you can change this behavior by adding your own prompt e.g.

kel "What is the mass of the moon?" -p "You are a physicist"This is the magic of kel. It is flexible and customizable. It has umpteen options to customize based on your needs.

You can also change the LLM and its model on the fly. Let us ask the same question to Anthropic’s Claude model.

More Kel

Kel also supports the OpenAI Assistant model where you can upload a file and ask questions about that. It is an awesome feature for performance engineers who need to analyze the raw results by using AI magic.

I am saving this feature for my next blog article. So what is next?

Based on the user’s feedback, I will integrate more prominent models into Kel so that you can improve your workflow. Please let me know in the comments or in GitHub issues.

Conclusion

Using Large Language Models (LLMs) in your daily work can greatly improve and streamline your developer and testing tasks. Kel is a free and open-source utility that allows you to connect with LLMs using your own API key. It offers a command-line interface, supports various LLM models, and provides customization options. Kel’s flexibility and convenience make it a valuable tool for improving workflow and productivity.