INTRODUCTION

Large language models can contribute significantly to healthcare needs

Generative AI and large language models (LLMs) in the healthcare sector are expected to bring about transformative changes to medicine, patient care, and medical education. Powered by artificial intelligence, machine learning, advanced natural language processing, and deep learning, healthcare LLMs are algorithms that analyze vast amounts of medical data to assist healthcare professionals in myriad ways, many still in the process of being discovered.

Most of you may be familiar with the term generative AI, which exploded into public consciousness in 2023. Generative AI is a subset of AI and refers to an algorithm that can “generate” fresh content, including audio, code, images, text, simulations, and videos -based on large sets of training data. Think Scribe AI (process documentation), GitHub Copilot (auto-completion of code), Dall-E2 (image generation with text prompt), and Synthesia (synthetic videos). And, of course, ChatGPT (versatile chatbot).

Large language models are a sub-set of generative AI that focuses on text and are trained on massive datasets (running into billions of parameters) to understand and generate human-like language. ChatGPT4, Bard, Grok, Claude, Llama2, and PaLM2 are the new breed of LLMs that have the potential to solve long-standing challenges and significantly improve the overall efficiency and quality of professional services.

This blog will delve deeper into the scope of large language models in the healthcare arena.

DEFINITION

What is a large language model in natural language processing?

A large language model is an AI deep learning “foundational model” that processes large datasets to find patterns, grammatical structures, and cultural references and detect intricate relationships between words to predict the next word in a sentence.

The term foundational means the model is capable of transfer learning –it builds on previous learning from one situation to another, constantly building its knowledge. This model does not need labeled datasets but is based on self-supervised learning with a new underlying “transformer” architecture, proposed by Google in a paper, “Attention is all you need” in 2017. It does not rely on recurrence and convolutional patterns to sequentially process inputs.

Transformer LLMs train on massive layers and parameters of data in parallel to reach very high levels of accuracy.

The words are broken into tokens or sub-words and converted into vector representations called embeddings with semantic information.

Based on neural networks of large encoder and decoder blocks that process data to extract meanings, LLMs then autonomously construct sentences like humans, respond to text prompts, translate languages, summarize text, answer questions, auto-complete, and so on.

An “attention” tag is added to the encoder block to establish relations; a positional encoding is also attached to provide information on the sequence. This transforms into an algebraic map of relations, dependencies, contexts, and meanings akin to how the human brain establishes patterns.

A transformer artificial neural network needs graphic processing units, tensor processing units, and special AI chips to carry out the parallel computations and mathematical operations needed to process the data.

Training is carried out iteratively. After which fine-tuning on specific tasks can be done to adapt the model to particular applications, such as in healthcare with reinforcement learning through human feedback.

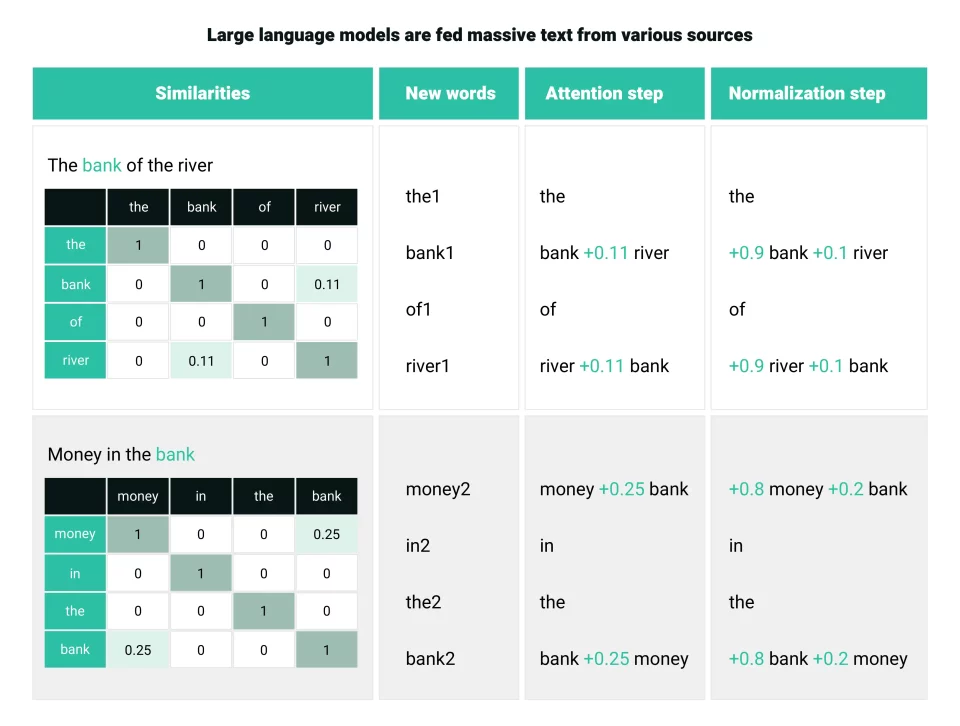

How large language models calculate similarity in the attention method Source

USE CASES

Enthusiasm for healthcare applications through LLMs abound

As investments in the development of LLMs rise, the healthcare sector can find many useful applications to further medical research, improve clinical decision-making, speed up drug discovery, and enhance patient engagement.

Pre-consultation document summaries: Healthcare LLMs can assist healthcare professionals by analyzing large volumes of electronic medical records and patient data, including disease history, lifestyle choices, and personal preferences, to provide information speedily before a consultation.

Literature review for medical research: Healthcare LLMs can be used to analyze medical research and scientific papers published in renowned journals to identify patterns, trends, and breakthroughs and assist researchers in advancing their knowledge through the visualization of large datasets, translations, and summaries.

Surveillance of drug safety: LLMs can be used to extract drug adverse reaction information from clinical notes and electronic health records, without compromising patient privacy. This will provide new data sources to monitor drug safety post-marketing and improve patient safety. A collaborative project between the University of California and the US Food and Drug Administration has been carried out on these lines.

Delivery of precision medicine: LLMs can scan electronic images and detect cancer progression patterns. Learning from vast test radiology reports on metastatic disease; healthcare LLMs are already in use to predict the onset of the disease. This kind of diagnostic support can help plan patient treatment pathways in precision medicine, as seen in a research project by Queens University, Canada, in collaboration with the Sloan Kettering Cancer Centre, New York.

Virtual patient engagement: LLM-backed virtual nursing care for chronic disease management and counseling patients on adherence to medication, scheduling appointments, following through on the care plans, and reviewing health issues is another use case that is already under development by Palo Alto-based Hippocratic AI.

Reshaping medical education: The merits of introducing LLMs as an augmented intelligence tool that aids medical education is being considered by the American Medical Association after a study evaluated ChatGPT’s good performance in the United States Medical Licensing Exam.

Whether they will be used as personalized tutors, providing revision notes, preparing mock test questions, and learning simulations remains to be seen.

EXAMPLES

Firms jump on the LLM bandwagon

The competition is fierce among industry leaders Google, Amazon, and Microsoft, IBM as each firm plans to move into the promising arena of generative AI and LLMs in healthcare.

Google: Offers a suite of generative AI healthcare models, including MedLM for patient studies, documentation of physician-patient interactions, administrative workflows, and a hand off tool for nurses; introduction of a healthcare version of Gemini is on the anvil; an LLM for differential diagnosis; and technology merger with BenchSci which is working to identify biomarkers to aid drug discovery, disease progression, and cure.

Microsoft: The Azure AI Health Insights services offer patient timelines, clinical report simplification, radiology insights, and health bot applications. The unified analytics platform Fabric has healthcare solutions added in recently that will enable organizations to combine data from previously soiled sources such as EHRs, picture archiving and communications systems, labs and claims systems, and medical devices. It has also invested #13 billion in OpenAI.

Amazon: AWS HealthScribe uses speech recognition and generative AI to save clinicians time by generating clinical documentation. In addition to LLM application building services – Bedrock and Titan foundational models, it is reported to be working on Olympus, touted to be trained on two trillion parameters, the largest ever. It also holds a minority stake in AI startup Anthropic, which has launched LLM chatbot Claude2.

ETHICS

Avoid AI bias and commit to health equity

Despite the excitement and optimism over generative AI and large language models in healthcare AI, there are several ethical considerations.

- Large language models absorb and perpetuate inherent bias from the text data they are fed.

- LLM output text is not attributed, is likely dated, may not always be accurate, and has already attracted IP violation lawsuits.

- Use of LLM as an assistive tool robs physicians and medical research of the element of trust placed by patients and by the academic community.

- As the use of LLMs is based on subscription models, there is a risk that the digital divide will widen as the cost of access may not be affordable to all.

- Sensitive protected health information data is at risk with LLMs getting access to medical data.

Voluntary commitments and the AI transparency rule

To ensure AI is deployed safely and responsibly in healthcare, the US government has got leading AI-technology companies, healthcare providers, and payers to give a voluntary commitment to:

- Developing AI solutions that optimize healthcare delivery and payment by advancing health equity, expanding access, reducing clinician burnout, improving patient experience and outcome.

- Deploying a trust mechanism that informs users if the content is largely AI-generated and not reviewed or edited by a human.

- Adhering to a risk management framework that tracks applications, accounts for potential harms, and takes steps to mitigate risks.

- Researching, investigating, and developing AI swiftly but responsibly.

To govern the use of AI and other “predictive” decision-support technologies in EHR and other certified health IT used by healthcare companies, the US Department of Health and Human Services (HHS), through its Office of the National Coordinator for Health Information Technology (ONC), has announced the ‘Health Data, Technology, and Interoperability” final rule. Called HTI-1, it mandates that:

The health IT developer must make available to the software users (such as healthcare providers) information about the predictive decision support intervention, including:

- The purpose of the intervention.

- Funding sources for the intervention’s development.

- Criteria used to influence the training data set.

- The process used to ensure fairness in the development of the intervention.

- A description of the external validation process.

CONCLUSION

Adhere to the FAVES principle in AI

ONC-certified health IT supports the care delivered by more than 96% of hospitals and 78% of office-based physicians around the country. In addition to algorithm transparency, the government has announced a new baseline standard, Version 3 of the United States Core Data for Interoperability. Developers of certified health IT should move to USCDI v3 by January 1, 2026, and adopt some new metrics to participate in the certification program.

If you are interested in leveraging LLMs and generative AI for healthcare, make sure your custom LLM software development efforts are aligned to AI principles: fair, appropriate, valid, effective, and safe.

![[2312.15407] A Comprehensive Analysis of the Effectiveness of Large Language Models as Automatic Dialogue Evaluators [2312.15407] A Comprehensive Analysis of the Effectiveness of Large Language Models as Automatic Dialogue Evaluators](https://aigumbo.com/wp-content/uploads/2023/12/arxiv-logo-fb-235x190.png)