@inproceedings{rawte-etal-2023-troubling,

title = "The Troubling Emergence of Hallucination in Large Language Models - An Extensive Definition, Quantification, and Prescriptive Remediations",

author = "Rawte, Vipula and

Chakraborty, Swagata and

Pathak, Agnibh and

Sarkar, Anubhav and

Tonmoy, S.M Towhidul Islam and

Chadha, Aman and

Sheth, Amit and

Das, Amitava",

editor = "Bouamor, Houda and

Pino, Juan and

Bali, Kalika",

booktitle = "Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2023",

address = "Singapore",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.emnlp-main.155",

doi = "10.18653/v1/2023.emnlp-main.155",

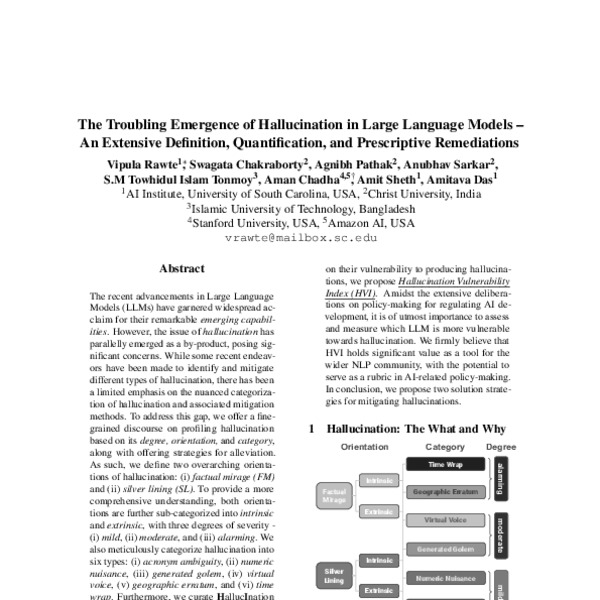

pages = "2541--2573",

abstract = "The recent advancements in Large Language Models (LLMs) have garnered widespread acclaim for their remarkable emerging capabilities. However, the issue of hallucination has parallelly emerged as a by-product, posing significant concerns. While some recent endeavors have been made to identify and mitigate different types of hallucination, there has been a limited emphasis on the nuanced categorization of hallucination and associated mitigation methods. To address this gap, we offer a fine-grained discourse on profiling hallucination based on its degree, orientation, and category, along with offering strategies for alleviation. As such, we define two overarching orientations of hallucination: (i) factual mirage (FM) and (ii) silver lining (SL). To provide a more comprehensive understanding, both orientations are further sub-categorized into intrinsic and extrinsic, with three degrees of severity - (i) mild, (ii) moderate, and (iii) alarming. We also meticulously categorize hallucination into six types: (i) acronym ambiguity, (ii) numeric nuisance, (iii) generated golem, (iv) virtual voice, (v) geographic erratum, and (vi) time wrap. Furthermore, we curate HallucInation eLiciTation (HILT), a publicly available dataset comprising of 75,000 samples generated using 15 contemporary LLMs along with human annotations for the aforementioned categories. Finally, to establish a method for quantifying and to offer a comparative spectrum that allows us to evaluate and rank LLMs based on their vulnerability to producing hallucinations, we propose Hallucination Vulnerability Index (HVI). Amidst the extensive deliberations on policy-making for regulating AI development, it is of utmost importance to assess and measure which LLM is more vulnerable towards hallucination. We firmly believe that HVI holds significant value as a tool for the wider NLP community, with the potential to serve as a rubric in AI-related policy-making. In conclusion, we propose two solution strategies for mitigating hallucinations.",

}

<?xml version="1.0" encoding="UTF-8"?>

<modsCollection xmlns="http://www.loc.gov/mods/v3">

<mods ID="rawte-etal-2023-troubling">

<titleInfo>

<title>The Troubling Emergence of Hallucination in Large Language Models - An Extensive Definition, Quantification, and Prescriptive Remediations</title>

</titleInfo>

<name type="personal">

<namePart type="given">Vipula</namePart>

<namePart type="family">Rawte</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Swagata</namePart>

<namePart type="family">Chakraborty</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Agnibh</namePart>

<namePart type="family">Pathak</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Anubhav</namePart>

<namePart type="family">Sarkar</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">S.M</namePart>

<namePart type="given">Towhidul</namePart>

<namePart type="given">Islam</namePart>

<namePart type="family">Tonmoy</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Aman</namePart>

<namePart type="family">Chadha</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Amit</namePart>

<namePart type="family">Sheth</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Amitava</namePart>

<namePart type="family">Das</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<originInfo>

<dateIssued>2023-12</dateIssued>

</originInfo>

<typeOfResource>text</typeOfResource>

<relatedItem type="host">

<titleInfo>

<title>Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing</title>

</titleInfo>

<name type="personal">

<namePart type="given">Houda</namePart>

<namePart type="family">Bouamor</namePart>

<role>

<roleTerm authority="marcrelator" type="text">editor</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Juan</namePart>

<namePart type="family">Pino</namePart>

<role>

<roleTerm authority="marcrelator" type="text">editor</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Kalika</namePart>

<namePart type="family">Bali</namePart>

<role>

<roleTerm authority="marcrelator" type="text">editor</roleTerm>

</role>

</name>

<originInfo>

<publisher>Association for Computational Linguistics</publisher>

<place>

<placeTerm type="text">Singapore</placeTerm>

</place>

</originInfo>

<genre authority="marcgt">conference publication</genre>

</relatedItem>

<abstract>The recent advancements in Large Language Models (LLMs) have garnered widespread acclaim for their remarkable emerging capabilities. However, the issue of hallucination has parallelly emerged as a by-product, posing significant concerns. While some recent endeavors have been made to identify and mitigate different types of hallucination, there has been a limited emphasis on the nuanced categorization of hallucination and associated mitigation methods. To address this gap, we offer a fine-grained discourse on profiling hallucination based on its degree, orientation, and category, along with offering strategies for alleviation. As such, we define two overarching orientations of hallucination: (i) factual mirage (FM) and (ii) silver lining (SL). To provide a more comprehensive understanding, both orientations are further sub-categorized into intrinsic and extrinsic, with three degrees of severity - (i) mild, (ii) moderate, and (iii) alarming. We also meticulously categorize hallucination into six types: (i) acronym ambiguity, (ii) numeric nuisance, (iii) generated golem, (iv) virtual voice, (v) geographic erratum, and (vi) time wrap. Furthermore, we curate HallucInation eLiciTation (HILT), a publicly available dataset comprising of 75,000 samples generated using 15 contemporary LLMs along with human annotations for the aforementioned categories. Finally, to establish a method for quantifying and to offer a comparative spectrum that allows us to evaluate and rank LLMs based on their vulnerability to producing hallucinations, we propose Hallucination Vulnerability Index (HVI). Amidst the extensive deliberations on policy-making for regulating AI development, it is of utmost importance to assess and measure which LLM is more vulnerable towards hallucination. We firmly believe that HVI holds significant value as a tool for the wider NLP community, with the potential to serve as a rubric in AI-related policy-making. In conclusion, we propose two solution strategies for mitigating hallucinations.</abstract>

<identifier type="citekey">rawte-etal-2023-troubling</identifier>

<identifier type="doi">10.18653/v1/2023.emnlp-main.155</identifier>

<location>

<url>https://aclanthology.org/2023.emnlp-main.155</url>

</location>

<part>

<date>2023-12</date>

<extent unit="page">

<start>2541</start>

<end>2573</end>

</extent>

</part>

</mods>

</modsCollection>

%0 Conference Proceedings %T The Troubling Emergence of Hallucination in Large Language Models - An Extensive Definition, Quantification, and Prescriptive Remediations %A Rawte, Vipula %A Chakraborty, Swagata %A Pathak, Agnibh %A Sarkar, Anubhav %A Tonmoy, S.M Towhidul Islam %A Chadha, Aman %A Sheth, Amit %A Das, Amitava %Y Bouamor, Houda %Y Pino, Juan %Y Bali, Kalika %S Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing %D 2023 %8 December %I Association for Computational Linguistics %C Singapore %F rawte-etal-2023-troubling %X The recent advancements in Large Language Models (LLMs) have garnered widespread acclaim for their remarkable emerging capabilities. However, the issue of hallucination has parallelly emerged as a by-product, posing significant concerns. While some recent endeavors have been made to identify and mitigate different types of hallucination, there has been a limited emphasis on the nuanced categorization of hallucination and associated mitigation methods. To address this gap, we offer a fine-grained discourse on profiling hallucination based on its degree, orientation, and category, along with offering strategies for alleviation. As such, we define two overarching orientations of hallucination: (i) factual mirage (FM) and (ii) silver lining (SL). To provide a more comprehensive understanding, both orientations are further sub-categorized into intrinsic and extrinsic, with three degrees of severity - (i) mild, (ii) moderate, and (iii) alarming. We also meticulously categorize hallucination into six types: (i) acronym ambiguity, (ii) numeric nuisance, (iii) generated golem, (iv) virtual voice, (v) geographic erratum, and (vi) time wrap. Furthermore, we curate HallucInation eLiciTation (HILT), a publicly available dataset comprising of 75,000 samples generated using 15 contemporary LLMs along with human annotations for the aforementioned categories. Finally, to establish a method for quantifying and to offer a comparative spectrum that allows us to evaluate and rank LLMs based on their vulnerability to producing hallucinations, we propose Hallucination Vulnerability Index (HVI). Amidst the extensive deliberations on policy-making for regulating AI development, it is of utmost importance to assess and measure which LLM is more vulnerable towards hallucination. We firmly believe that HVI holds significant value as a tool for the wider NLP community, with the potential to serve as a rubric in AI-related policy-making. In conclusion, we propose two solution strategies for mitigating hallucinations. %R 10.18653/v1/2023.emnlp-main.155 %U https://aclanthology.org/2023.emnlp-main.155 %U https://doi.org/10.18653/v1/2023.emnlp-main.155 %P 2541-2573

Markdown (Informal)

[The Troubling Emergence of Hallucination in Large Language Models – An Extensive Definition, Quantification, and Prescriptive Remediations](https://aclanthology.org/2023.emnlp-main.155) (Rawte et al., EMNLP 2023)

ACL

- Vipula Rawte, Swagata Chakraborty, Agnibh Pathak, Anubhav Sarkar, S.M Towhidul Islam Tonmoy, Aman Chadha, Amit Sheth, and Amitava Das. 2023. The Troubling Emergence of Hallucination in Large Language Models – An Extensive Definition, Quantification, and Prescriptive Remediations. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pages 2541–2573, Singapore. Association for Computational Linguistics.