The use of Large Language Models (LLMs) in digital health and medicine has seen a significant increase in PubMed citations over time, with the year 2024 setting an impressive trajectory for the adoption and advancement of LLMs in the field of healthcare. This indicates a growing interest and recognition of the potential of LLMs in revolutionizing healthcare practices and research.

Understanding LLMs in Healthcare

Large Language Models in healthcare and medicine have the potential to support clinical diagnosis, reduce administrative burdens, automate communication between patients and clinicians, educate students, accelerate scientific discovery, and enhance health literacy. As highlighted by the U.S. government’s official review, LLMs can be instrumental in transforming healthcare delivery and practice, making it more efficient and personalized.

Applications of LLMs in Medicine

LLMs have found potential applications in primary care, aiding medical practitioners, streamlining administrative paperwork, and empowering patients. They are capable of understanding and generating human-like text, making them valuable for interpreting medical literature and assisting in clinical decision-making. Furthermore, LLMs are making significant strides in healthcare and medicine, improving efficiencies and patient outcomes.

LLMs in Cognitive Decline and Depression

One of the key use cases of LLMs in healthcare is the diagnosis and management of cognitive decline and depression. LLMs have been used to predict dementia from spontaneous speech and diagnose differential diagnoses in neurodegenerative disorders. They also show potential in managing postpartum depression, providing personalized healthcare, and augmenting clinical tasks, as highlighted in a study funded by Xunta de Galicia and the University of Vigo.

The Pitfalls of LLMs

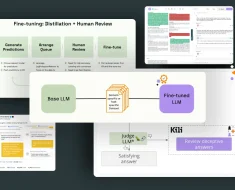

Despite their potential, LLMs also have shortcomings such as inaccuracies, lack of explainability, and security risks. Inaccuracies in LLMs can lead to incorrect or misleading statements, especially in the field of medicine where contradictory data and patient-specific decision making are common. To address this, models need to be trained with greater emphasis on factual correctness, involving domain experts in the refinement process, and constructing datasets with enhanced salience of ground truths.

Ensuring Explainability and Security

Explainability in LLMs is crucial in healthcare, as the rationale behind the model’s output is as important as the output itself. Several indirect strategies such as Shapley Additive Explanations and prompt engineering can be considered to enhance explainability. Security concerns for LLMs in healthcare include data poisoning and data privacy issues, which can compromise user privacy and model security. Regulatory oversight is crucial to ensure their safe and effective use in medical practice.

Overall, while there are challenges to be addressed, the potential of LLMs in revolutionizing healthcare and medicine is immense. With further development and careful calibration, these models could significantly enhance the quality of care and patient outcomes in the future.

![[2401.06081] Improving Large Language Models via Fine-grained Reinforcement Learning with Minimum Editing Constraint [2401.06081] Improving Large Language Models via Fine-grained Reinforcement Learning with Minimum Editing Constraint](https://aigumbo.com/wp-content/uploads/2023/12/arxiv-logo-fb-235x190.png)