Azure Machine Learning is a service that enables you to create, train, deploy, and manage machine learning models and experiments. You can also use it to run HPC applications, such as simulations, rendering, or data analysis.

Autodock is a powerful tool for molecular simulation, essential for researchers who work with large-scale computational tasks in HPC environments. It is especially useful for drug design and biomolecular interaction studies.

This article will guide you through the process of running Autodock Vina docking simulation scripts on the Azure Machine Learning platform. You will also learn how to provision Azure NetApp Files (ANF) volumes for the Machine Learning notebooks, which can provide persistent storage for most HPC applications.

The steps are:

- Prepare your Azure Machine Learning environment

- Create ANF volume

- Install Autodock Vina on the ANF volume

- Create a compute cluster to run your job

- Create your Autodock Vina docking script

- Create your command job to run the docking script on the compute cluster

At the end, we will conclude this tutorial and highlight the benefits of using AML environment for HPC applications. We will also explore some customization options based on various needs and factors.

Let’s begin!

- Prepare your Azure Machine Learning environment

- Follow Quickstart: Get started with Azure Machine Learning to create an Azure Machine Learning workplace, sign in to studio and create a new notebook.

- Set your kernel and create handle to workplace. You’ll create ml_client for a handle to the workspace. Enter your Subscription ID, Resource Group name and Workspace name and run in your notebook.

from azure.ai.ml import MLClient

from azure.identity import DefaultAzureCredential

# authenticate

credential = DefaultAzureCredential()

# Get a handle to the workspace

ml_client = MLClient(

credential=credential,

subscription_id="<SUBSCRIPTION_ID>",

resource_group_name="<RESOURCE_GROUP>",

workspace_name="<AML_WORKSPACE_NAME>",

)

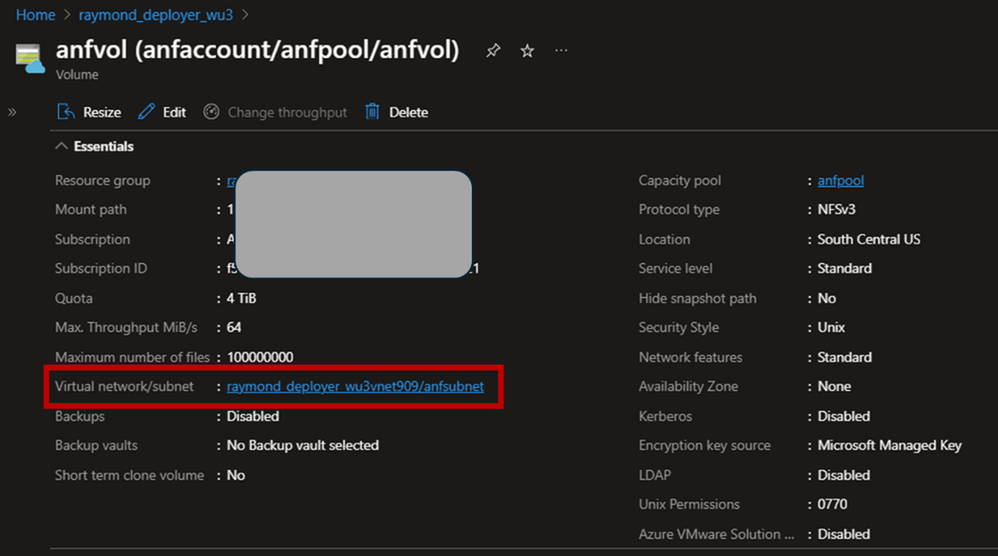

- Follow Create an NFS volume for Azure NetApp Files with Service Level “Standard” and NFSv3 protocol to create a 4TiB size volume.

To ensure accessibility from Azure Machine Learning compute to the ANF volume, we will place both the Azure Machine Learning compute resources and the Azure NetApp Files subnets within the same VNET.

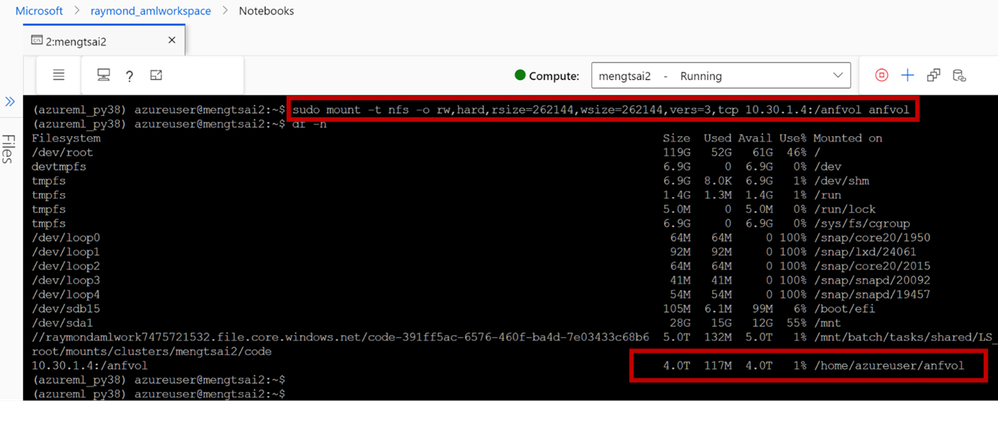

2. Open terminal of your notebook. Follow Mount NFS volumes for virtual machines | Microsoft Learn to test if you can mount the ANF volume from the Compute node successfully.

- Install Autodock Vina on the ANF volume

Follow Installation — Autodock Vina 1.2.0 documentation (Autodock-vina.readthedocs.io) to install Autodock Vina. We will download the executable for the latest release (1.2.5):

$ cd anfvol\

$ wget https://github.com/ccsb-scripps/AutoDock-Vina/releases/download/v1.2.5/vina_1.2.5_linux_x86_64

$ chmod +x vina_1.2.5_linux_x86_64

- Create a compute cluster to run your job

from azure.ai.ml.entities import AmlCompute, NetworkSettings

# Name assigned to the compute cluster

cpu_compute_target = "cpu-dockingcluster"

try:

# let's see if the compute target already exists

cpu_cluster = ml_client.compute.get(cpu_compute_target)

print(

f"You already have a cluster named {cpu_compute_target}, we'll reuse it as is."

)

except Exception:

print("Creating a new cpu compute target...")

# Let's create the Azure Machine Learning compute object with the intended parameters

# if you run into an out of quota error, change the size to a comparable VM that is available.

# Learn more on https://azure.microsoft.com/en-us/pricing/details/machine-learning/.

network_settings = NetworkSettings(vnet_name="/subscriptions/<SUBSCRIPTION_ID>/resourceGroups/<RESOURCE_GROUP>/providers/Microsoft.Network/virtualNetworks/<VNET_NAME>", \

subnet="/subscriptions/<SUBSCRIPTION_ID>/resourceGroups/<RESOURCE_GROUP>/providers/Microsoft.Network/virtualNetworks/<VNET_NAME>/subnets/<SUBNET_NAME>")

cpu_cluster = AmlCompute(

name=cpu_compute_target,

# Azure Machine Learning Compute is the on-demand VM service

type="amlcompute",

# VM Family

size="STANDARD_HB120RS_V3",

# Minimum running nodes when there is no job running

min_instances=0,

# Nodes in cluster

max_instances=4,

# How many seconds will the node running after the job termination

idle_time_before_scale_down=180,

# Dedicated or LowPriority. The latter is cheaper but there is a chance of job termination

tier="Dedicated",

network_settings=network_settings

)

print(

f"AMLCompute with name {cpu_cluster.name} will be created, with compute size {cpu_cluster.size}"

)

# Now, we pass the object to MLClient's create_or_update method

cpu_cluster = ml_client.compute.begin_create_or_update(cpu_cluster)

- Create your Autodock Vina docking script (basicdocking.sh)

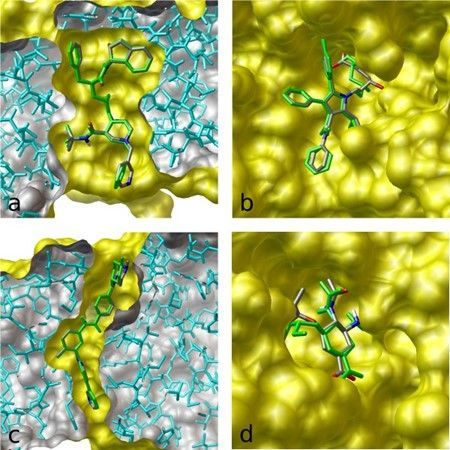

In this example, we will dock the approved anticancer drug imatinib (Gleevec; PDB entry 1iep) in the structure of c-Abl using AutoDock Vina. The target for this protocol is the kinase domain of the proto-oncogene tyrosine protein kinase c-Abl. The protein is an important target for cancer chemotherapy—in particular, the treatment of chronic myelogenous leukemia. We set exhaustiveness value to 32 in order to get a more consistent docking result.

import os

train_src_dir = "./src"

os.makedirs(train_src_dir, exist_ok=True)

%%writefile {train_src_dir}/basicdocking.sh

#!/bin/bash

git clone https://github.com/ccsb-scripps/AutoDock-Vina.git; \

cd AutoDock-Vina/example/basic_docking/solution ;\

~/anfvol/vina_1.2.5_linux_x86_64 --receptor 1iep_receptor.pdbqt --ligand 1iep_ligand.pdbqt --config 1iep_receptor_vina_box.txt --exhaustiveness=32 --out 1iep_ligand_vina_out.pdbqt

This script will run AutoDock Vina to perform molecular docking and generate a PDBQT file named 1iep_ligand_ad4_out.pdbqt. This file will include all the poses that the script discovered. The script will also display docking information on the terminal window.

- Create your command job to run the docking script on the compute cluster

from azure.ai.ml import command, MpiDistribution, Input

registered_model_name = "autodock_basicdocking"

job = command(

code="./src/", # location of source code

command="apt-get -y install nfs-common; \

mkdir ~/anfvol; \

mount -t nfs -o rw,hard,rsize=262144,wsize=262144,vers=3,tcp 10.30.1.4:/anfvol ~/anfvol; \

cp basicdocking.sh ~/anfvol/; \

~/anfvol/basicdocking.sh;",

environment="aml-vina@latest",

instance_count=2,

# distribution=MpiDistribution(process_count_per_instance=2),

compute="cpu-dockingcluster"

if (cpu_cluster)

else None, # No compute needs to be passed to use serverless

display_name="autodock_basicdocking",

)

ml_client.create_or_update(job)

The file 1iep_ligand_ad4_out.pdbqt or the terminal window will display results similar to the ones below after the job has run successfully. The predicted free energy of binding is approximately -13.22 kcal/mol, as shown.

Scoring function : vina

Rigid receptor: 1iep_receptor.pdbqt

Ligand: 1iep_ligand.pdbqt

Grid center: X 15.19 Y 53.903 Z 16.917

Grid size : X 20 Y 20 Z 20

Grid space : 0.375

Exhaustiveness: 32

CPU: 32

Verbosity: 1

Computing Vina grid ... done.

Performing docking (random seed: -835730415) ...

0% 10 20 30 40 50 60 70 80 90 100%

|----|----|----|----|----|----|----|----|----|----|

***************************************************

mode | affinity | dist from best mode

| (kcal/mol) | rmsd l.b.| rmsd u.b.

-----+------------+----------+----------

1 -13.22 0 0

2 -11.37 1.553 1.987

3 -11.19 2.983 12.44

4 -11.04 3.899 12.3

5 -10.61 2.54 12.63

6 -9.859 1.836 13.68

7 -9.639 2.919 12.58

8 -9.5 2.422 13.49

9 -9.427 1.625 2.682

Summary and discussion

- As illustrated in this article, you can use the Azure Machine Learning studio, a web interface that provides a user-friendly and collaborative environment for building and managing your machine learning and HPC projects. You can also use the Azure Machine Learning SDKs and CLI to programmatically interact with the platform and automate your tasks.

- You can utilize the scalability and flexibility of Azure to run your HPC applications on demand, without investing in costly and complex on-premises infrastructure. You can pick from various VM sizes and configurations that fit your needs, including the latest NVIDIA and AMD GPUs with high-bandwidth InfiniBand interconnect.

- Azure Machine Learning pricing is transparent and easier for customers to estimate cost. The pricing is based on the amount of compute used, and there are no additional charges for using Azure Machine Learning. You can pay for compute capacity by the second, with no long-term commitments or upfront payments. You can also save money across select compute services globally by committing to spend a fixed hourly amount for 1 or 3 years, unlocking lower prices until you reach your hourly commitment. Azure also provides a pricing calculator that allows you to estimate the cost of using Azure Machine Learning running your HPC applications.

- Storage options. In our example, we have implemented Azure NetApp Files (ANF) as the persistent storage solution. Azure offers a range of alternative storage options tailored to specific performance requirements such as IOPS, throughput, and cost considerations. Including Azure Managed Lustre Storage, Azure Files, and Azure Blob, each serving distinct needs based on your application’s demands and budget constraints.

Please note that Azure Machine Learning is not inherently designed for running HPC applications. Therefore, you may have to modify or rewrite some parts of your code during the migration process. For example, you may need to create a parallel pipeline or manually handle the logic for distributing the workload among nodes.