From AI Hype to Implementation

For companies that have already adopted Generative AI, the identification of suitable use cases presents a considerable challenge – how do we use this technology in real world scenarios?

Here are some simple yet impactful use cases which demonstrate the power of Generative AI.

- Chat with your data

You can save many hours sifting through documents, such as Legal forms and customer reviews, in search of specific pieces of information. Just by securely uploading these documents as context to the LLM (Large language Model) and posing the right questions (Prompts), you can get specific answers or even the entire document summary if needed.

But not all the information in these documents may be relevant, and it can be rather costly to pass a lot of data to LLMs. To solve this challenge, we can use Retrieval Augmented Generation (RAG), a solution designed to selectively bring in only relevant information for insertion into the model.

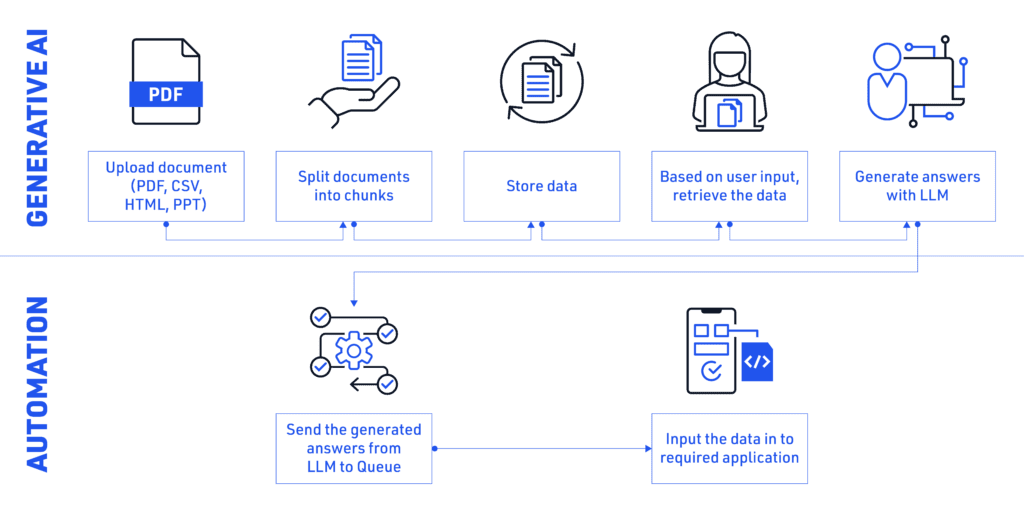

The illustration below demonstrates how Process automation and Generative AI can revolutionize document automation:

In summary:

- Load documents from data sources

- Split documents into manageable chunks

- Store in vector stores for effective data processing

- Retrieve relevant data from documents based on user questions

- Generate final answers through LLM

- Queue the generated data from LLM as required

- Utilize the generated data for further automation

- Generate unique test data

LLM facilitates the generation of test data, accelerating the work of testers and saving a huge amount of time. By running an LLM locally, we can tailor the model to specific requirements and keep sensitive data within its own secure network.

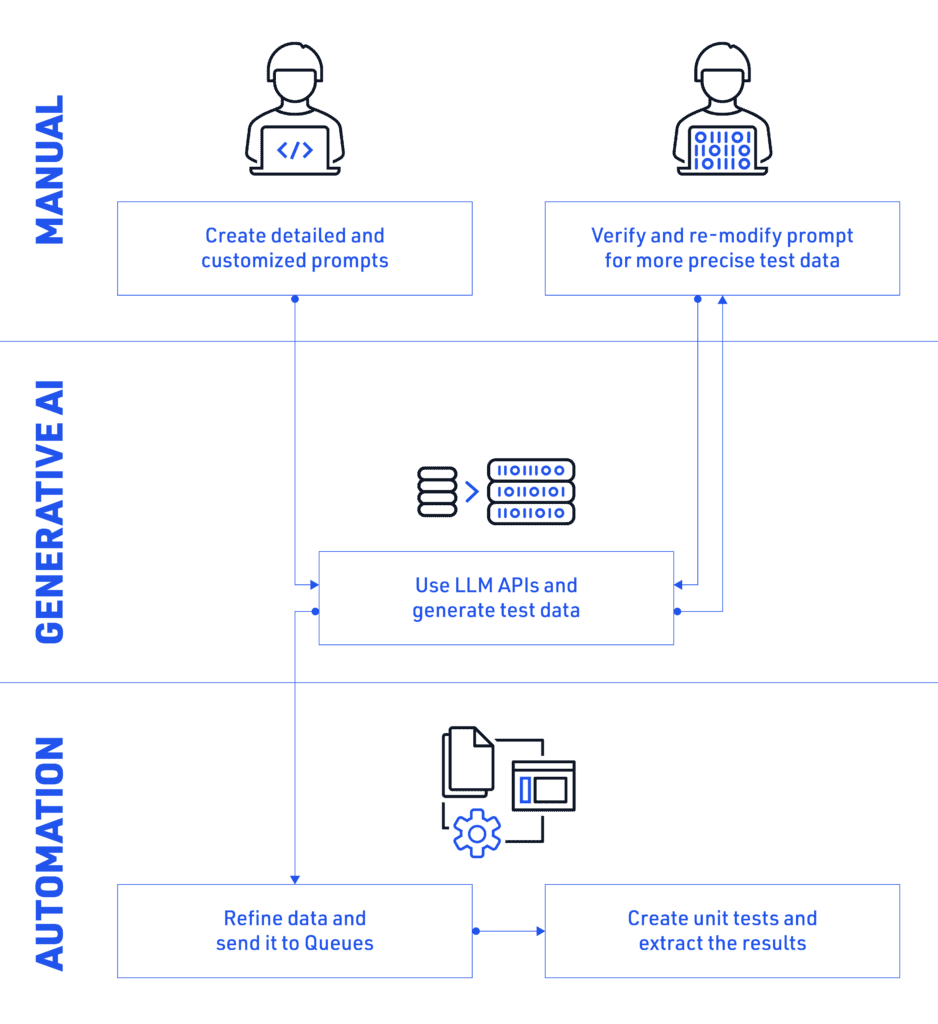

The combination of Generative AI, Automation, and human expertise (something we call Human+) produces a number of customized test cases quickly and easily:

Below is a short summary of the use case:

- Create detailed and customized prompts

- Generate test data by passing the prompt to LLM API

- Refine prompts based on the output test data

- Repeat the generation process for satisfactory and customized results

- Create test cases using the generated test data from LLM