Monitoring locations

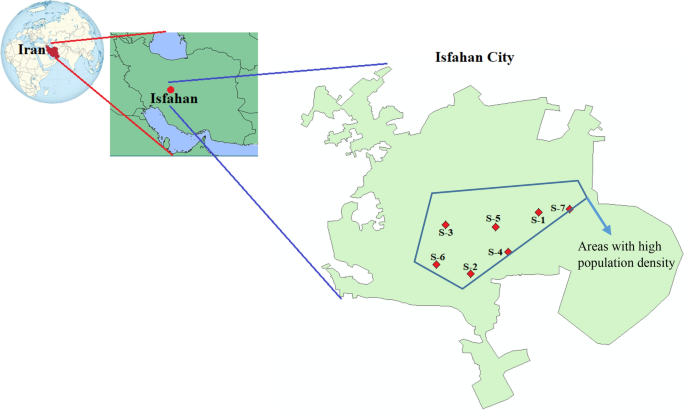

Isfahan, located in central Iran, is one of the most populous and industrialized cities with approximately 2 million residents and an average population density of 3560 people per km2. However, Isfahan has been grappling with a significant air pollution issue. The geographical location of Isfahan province and the selected 7 monitoring sites are shown in Fig. 1, including, Site 1 (32.664 N, 51.702 E), Site 2 (32.622 N, 51.660 E), Site 3 (32.656 N, 51.643 E), Site 4 (32.638 N, 51.684 E), Site 5 (32.655 N, 51.675 E), Site 6 (32.629 N, 51.637 E) and Site 7 (32.667 N, 51.720 E). The monitoring stations are mainly located in the densely populated area of the city which are considered and operated by the Isfahan Department of Environment. In all of these stations, the real-time beta attenuation continuous mass monitor system was used to measure the PM2.5 concentrations.

The daily meteorological data corresponding to the 7 stations was collected from the Isfahan Meteorological Administration. Meteorological factors such as maximum, minimum and average daily air temperature, relative humidity, total rainfall and /or snowmelt, wind direction, and average and maximum sustained wind speed, are utilized in the calculation and screening processes.

The information regarding the collected data can be found in Table 1. Data from January 2011 to December 2019 was collected and analyzed, amounting to a total of 3285 days, which is equivalent to 9 years. The 24-h average of PM2.5 was calculated through modelling, utilizing daily meteorological data.

To ensure accurate modelling results, it is important to address the potential collinearity among the input variables mentioned above. Collinearity occurs when there is a high correlation between two or more variables, which can adversely affect the model’s performance. To identify and mitigate this issue, the data was inputted into SPSS software, and collinearity statistics analysis was conducted. The Variance Inflation Factor (VIF) was then calculated to assess the extent of collinearity. Higher VIF values indicate stronger collinearity between a variable and the others. Typically, values exceeding 10 are indicative of moderate to high collinearity. Consequently, based on these findings (Table 1), the Tmax, Tmin, and WM variables were excluded from the model’s input to prevent collinearity-related complications from impacting the model’s output.

Based on the EPA classification of AQI and PM2.5, as well as the range of recorded PM2.5 (11.05–98.10 µg/m3) during the study period15, PM2.5 concentrations were categorized into 4 grades as follows:

-

1.

Good: 0–12 µg/m3 (AQI: 0–50)

-

2.

Moderate: 12.1–35.4 µg/m3 (AQI: 51–100)

-

3.

Unhealthy for sensitive groups: 35.5–55.4 µg/m3 (AQI: 101–150)

-

4.

Unhealthy for all people: 55.5–150.4 µg/m3 (AQI: 151–200)

According to the received data, wind speed was recorded in 8 directions, including North, South, East, West, North East, North West, South East and South West.

Modelling techniques

In this study, four artificial intelligence models were compared to identify the optimal model for predicting the concentration ranges of PM2.5, based on the metrological conditions. These models were Artificial Neural Network (ANN), Ensembles of Classification Trees Random Forest (RF), Support Vector Machine (SVM) and k-nearest Neighbor Classification (KNN).

SVM

The Support Vector Machine (SVM) is a supervised machine learning algorithm that can be employed for classification problems. The SVM algorithm treats each data item as a data point in an n-dimensional space, where n represents the number of features. Through the process of receiving labelled training data and conducting supervised training, the SVM algorithm constructs a hyperplane which is then utilized to classify new instances. The vectors or data points that are nearest to the hyperplane and influence its position are referred to as support vectors. SVM always constructs a hyperplane with a maximum margin, representing the greatest separation between data points16. The effectiveness of SVMs for classification greatly relies on their parameter settings, particularly the penalty factor C and the kernel parameter σ2. These parameters play a crucial role in determining the classification outcome. Typically, fine-tuning these parameters is based on empirical knowledge and experience3.

RF

A decision tree is a straightforward model used to classify examples, and learning it is considered one of the most effective approaches for supervised classification learning. Ensemble methods are utilized to enhance predictive performance by combining multiple decision trees. The fundamental concept behind ensemble models is that a collection of weaker learners can collectively create a stronger learner. Bagging and boosting are commonly employed techniques for implementing ensemble decision trees17.

KNN

The k-nearest neighbours (KNN) algorithm is an uncomplicated and practical supervised machine learning technique that is capable of addressing both classification and regression predictive tasks. However, the k-nearest neighbours (KNN) algorithm is primarily utilized in pattern recognition and regression problems. The underlying principle of KNN is based on the assumption that similar data points tend to be close to each other in the feature space. To determine the appropriate value of K, which represents the number of neighbouring data points, the algorithm is executed multiple times with varying values of K. The aim is to find the value of K that minimizes the number of errors encountered, while still ensuring the algorithm’s capability to accurately predict outcomes when presented with new, unseen data18.

ANN

The concept of an artificial neural network involves programming computers to mimic the interconnected behaviour of brain cells. Here, the multilayer perceptron (MLP) was applied. An MLP is a class of feedforward artificial neural networks. The MLP architecture is composed of three layers. The input layer accepts inputs in various formats given by the programmer. The hidden layer lies between the input and output layers. It carries out calculations to identify concealed features and patterns. The output layer transforms the input through the hidden layer, ultimately producing the final output conveyed by this layer19.

Modelling for PM2.5 prediction

The objective of this study was to assess the PM2.5 pollution level. While the RF, SVM, and KNN models directly address this task through modelling, the ANN model takes a different approach. It first predicts the concentration of PM2.5 and subsequently determines the corresponding pollution level based on the criteria outlined in section “Monitoring locations“. The implementation of ANN, RF, SVM, and KNN models utilized MATLAB R2020b software with version 9.9.0.1467703. For the models developed in this study, the input data included the following parameters: T, Tmax, Tmin, RH, PP, WS, VM, WD, Day (Day of the month is a number between 1 and 30), month (a number between 1 and 12), Year (between 2011 and 2019), station (between 1 and 7). In SVM, RF and KNN models, PM2.5 grades were introduced to the model as an output variable. In the ANN model, the concentration of PM2.5 was given to the model as a continuous numerical output variable.

The ANN model in Matlab software was constructed using the Neural Net Toolbox (nntool). To forecast PM2.5 concentrations, a multilayer feed-forward backpropagation network was employed. The hidden layer utilized a tangent sigmoid activation function, while the output layer utilized a pure linear activation function. It should be noted that the number of neurons in the hidden layer greatly affects the performance of the ANN. The equations from previous studies were employed to calculate the ideal quantity of neurons in the hidden layer20,21. Afterwards, a total of 2299 datasets (70%) were randomly selected for training the ANN model, while 493 datasets (15%) were used for validation, and another 493 records (15%) were reserved for testing. The Levenberg–Marquardt backpropagation training algorithm (trainlm) was employed to train the ANN. After designing the optimal ANN architecture, the model was utilized to estimate the concentration of PM2.5 in the test dataset. The output predicted by the model was ranked according to the grades defined in this study so that the performance of the ANN model is comparable to the classification models.

The MATLAB software’s classification learner app is designed to train models for data classification. This app includes various high-performing classification model types such as nearest neighbours, discriminant analysis, decision trees, logistic regression, support vector machines, naive Bayes, ensemble classification, and kernel approximation22. After conducting a preliminary evaluation, three classification algorithms namely SVM, RF, and KNN were chosen from various algorithms available in the toolbox. The performance of these classifiers was assessed for predicting PM2.5 grades in this research.

The optimal SVM model structure was determined by optimizing kernel function, box constraint level, kernel scale, and multiclass method parameters. Also, to determine the best KNN model, the number of neighbours, distance metric and distance weight parameters were optimized. The parameters of the RF model, such as the ensemble method, maximum number of splits, number of learners, and learning rate, were optimized. Parameter tuning was conducted by stepwise optimization.

To prevent overfitting during the implementation of SVM, KNN, and RF models, a holdout validation is conducted. In this process, 15% of the original dataset is set aside as a test dataset. Then, the holdout validation method is applied, where 15% of the dataset is allocated for validation and the remaining portion is used for training. This helps ensure the models’ performance is not biased by the training data. Holdout Validation divides the input data into the training set and the validation set of the obtained model. These two sets are complementary. Holdout Validation is utilized for big datasets23. The test dataset was used to extract and evaluate the trained classifier models.

Confusion matrix, also known as an error matrix, and cross-entropy loss, also referred to as log loss, are used in machine learning to evaluate the effectiveness of classification models. In this particular study, both of these techniques were utilized to assess the performance of an ANN for classification purposes. A confusion matrix is a summary table that shows how well the model has performed in predicting samples of different classes. When optimizing classification models and generally calculating the difference between the predicted and actual responses, cross-entropy can be used as a loss function20. After analyzing the confusion matrix, various performance parameters, including sensitivity, specificity, F1 score, accuracy, precision, and the area under the curve (AUC), are calculated to assess the models’ performance.

Accuracy is defined as the proportion of correct predictions out of all the predictions made. Sensitivity, also known as the true positive rate, is calculated as the proportion of correctly predicted positive instances to the total number of actual positive instances. Specificity, also known as the true negative rate, refers to the proportion of accurately predicted negative instances in relation to the total number of actual negative instances. Precision, also known as positive predictive value, refers to the proportion of correctly predicted positive instances compared to the total number of instances that were predicted as positive. The F1 score can be defined as the harmonic mean of sensitivity and precision, two important parameters in data analysis. The receiver operating characteristic curve (ROC) is a graphical representation of the probability of a model’s performance. The area under the curve (AUC) quantifies the separability or discriminative power of the model in distinguishing between different classes24. Equations (1–5) can be utilized to compute these parameters25.

$$Sensitivity=\frac{TP}{TP+FN}$$

(1)

$$Specificity=\frac{TN}{FP+TN}$$

(2)

$$Precision=\frac{TP}{TP+FP}$$

(3)

$$Accuracy=\frac{TP+TN}{TP+TN+FP+FN}$$

(4)

$$F1 Score=2*\frac{Sensitivity* Precision}{Sensitivity + Precision}$$

(5)

Here, TP, TN, FP, and FN are True Positive, True Negative, False Positive, and False Negative, respectively20,26.

The parameters used to evaluate the performance of ANN were the correlation coefficient (R) and the mean squared error (MSE), as shown in Eqs. (6) and (7)27,28.

$$R=\sqrt{\frac{1-\sum_{i=1}^{n}{({Y}_{p}-{Y}_{e})}^{2}}{\sum_{i=1}^{n}{({Y}_{p}-{\overline{Y} }_{e})}^{2}} }$$

(6)

$$MSE=\frac{1}{n}\sum_{i=1}^{n}{({Y}_{e}-{Y}_{p})}^{2}$$

(7)

where n is the number of experimental data, and \({Y}_{p}\), \({Y}_{e}\), and \({\overline{Y} }_{e}\) represent the predicted value, the experimental value, and the average of experimental data for the output variable, respectively.

As mentioned earlier, the output predicted by the model was graded according to the stratification defined in this study, so that the performance of the ANN model becomes comparable with the classification models. Afterwards, the confusion matrix of the neural network was also determined. After determining the best model, for external validation, meteorological data for 2020 was received and introduced to the optimal model, and PM2.5 was predicted for monitoring stations for each month in 2020. Finally, by entering the data of 2020 into the ArcGIS 10.8 Version 10.7.0.10450 software, the pollution map was created for each month of the year.

![[2312.16702] Rethinking Tabular Data Understanding with Large Language Models [2312.16702] Rethinking Tabular Data Understanding with Large Language Models](https://aigumbo.com/wp-content/uploads/2023/12/arxiv-logo-fb-235x190.png)