The highest ratings went to AI services for education like Ello, which uses speech recognition to act as a reading tutor, and Khan Academy’s chatbot helper Khanmigo for students, which allows parents to monitor a child’s interactions and sends a notification if content moderation algorithms detect an exchange violating community guidelines. The report credits ChatGPT’s creator OpenAI with making the chatbot less likely to generate text potentially harmful to children than when it was first released last year, and recommends its use by educators and older students.

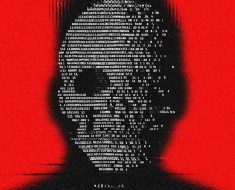

Alongside Snapchat’s My AI, the image generators Dall-E 2 from OpenAI and Stable Diffusion from startup Stability AI also scored poorly. Common Sense’s reviewers warned that generated images can reinforce stereotypes, spread deepfakes, and often depict women and girls in hypersexualized ways.

When Dall-E 2 is asked to generate photorealistic imagery of wealthy people of color it creates cartoons, low-quality images, or imagery associated with poverty, Common Sense’s reviewers found. Their report warns that Stable Diffusion poses “unfathomable” risk to children and concludes that image generators have the power to “erode trust to the point where democracy or civic institutions are unable to function.”

“I think we all suffer when democracy is eroded, but young people are the biggest losers, because they’re going to inherit the political system and democracy that we have,” Common Sense CEO Jim Steyer says. The nonprofit plans to carry out thousands of AI reviews in the coming months and years.

Common Sense Media released its ratings and reviews shortly after state attorneys generals filed suit against Meta alleging that it endangers kids and at a time when parents and teachers are just beginning to consider the role of generative AI in education. President Joe Biden’s executive order on AI issued last month requires the secretary of education to issue guidance on the use of the technology in education within the next year.

Susan Mongrain-Nock, a mother of two in San Diego, knows her 15-year-old daughter Lily uses Snapchat and has concerns about her seeing harmful content. She has tried to build trust by talking with her daughter about what she sees on Snapchat and TikTok but says she knows little about how artificial intelligence works, and she welcomes new resources.