Large language models are advanced programs that enable machines to understand and create text in human language. LLMs form the core of natural language processing. They are designed with complex neural network architecture of transformer models inspired by the human brain. NLP can handle vast amounts of data efficiently. Large Language Models are formed with a unique combination of NLP and advanced ML techniques.

The model is trained on bulk data to generate coherent outputs while answering a question or creating a story. In simple words, a large language model is an advanced generative AI model, created to analyze and produce output resembling human language. LLMs have the ability to perform certain tasks like text generation, speech-to-text, and sentimental analysis efficiently.

The model incorporates the Transformer model architecture into their design. By incorporating the self-attention mechanism, Transformers can simplify the complex relationship between words and sub-words. They can comprehend languages meaningfully by grasping the grammar, semantics, and context of text data. With the introduction of ChatGPT, the demand for LLM has proliferated.

Several large organizations, small businesses, and open-source communities are working their best to develop the most advanced large language model. To generate coherent and context-based text output, the model adopts a layered approach, allowing information to pass through multiple layers. Let us check the top 10 large language models that have already gained popularity due to their outstanding capabilities.

1. GPT-4

GPT-4 is the latest generative AI innovation by OpenAI. It has eight distinct models, each infused with 220 billion parameters, trained on 1.76 trillion parameters. GPT-4 can perform complex reasoning and multiple tasks with ease. The model closely resembles certain features of artificial general intelligence. Not only it generates text output but also processes images and videos effortlessly. Another essential feature of this model is a “system message” through which users can specify the voice tone and type of task they want.

2. PaLM 2

PaLM 2 is a large language model powered by Google AI. It outperforms GPT-4 regarding reasoning evaluation and code generation in multiple languages. The model utilizes large datasets to make inferences and conduct research in the fields of AI, NLP, and Machine Learning. PaLM 2 can produce codes in multiple programming languages such as Python, Java, and C++. Another notable feature of this LLM is quick responsiveness to customer queries.

3. GPT-3.5

GPT-3.5 is another extensive language model that generates responses within seconds. It offers prompt responses for almost every task, from writing an essay to suggesting top ways to make money using ChatGPT. The best part is that it is available for free to use. It is a cloud-based service, ensuring maximum scalability to meet user’s needs irrespective of size. Apart from generating human-like text, GPT-3.5 utilizes analytical tools to understand human behavioral patterns.

4. Cohere

Cohere is a robust model of generative AI that stands out for its high level of accuracy. The model is designed for enterprise generative AI and trained on extensive text and code datasets. The model is also utilized to conduct research in the fields of AI, NLP, and Machine Learning. Cohere is the best choice for businesses seeking task automation and improved customer services.

5. XLNet

XLNet utilizes a permutation-based training method to capture dependencies beyond the limitations of traditional context windows. It can handle complex languages with ease. XLNet also focuses on structuring and framing a sentence. All possible word order permutations are considered during the training to enable the model to achieve better results in tasks with long-range dependencies.

6. Falcon

Falcon is an open-source language model with three variants: Falcon 40B, Falcon 7B, and Falcon 1B. It was termed the best language model on the Hugging Face LLM leader board. Trained on high-quality data, Falcon is a highly autoregressive model that includes a vast range of dialects and languages. Based on the Transformer architecture, the model can process bulk data efficiently and make better predictions. It is available on GitHub for free of cost.

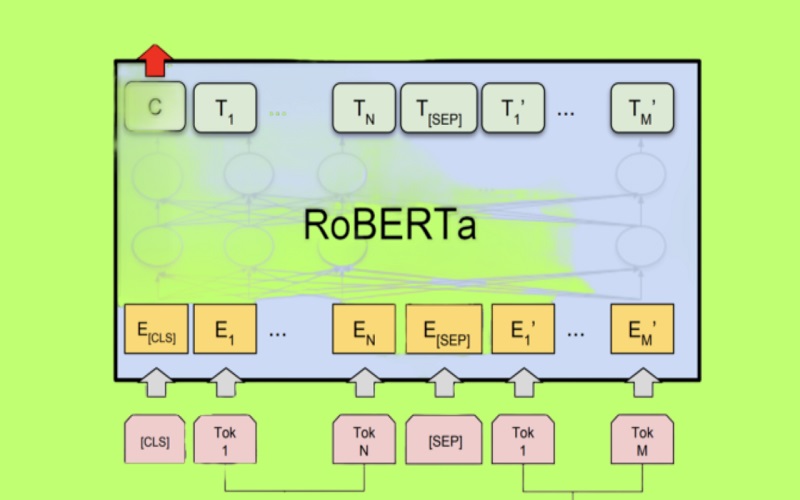

7. RoBERTa

RoBERTa is a large language model pre-trained on large raw data sets without any human labeling. It utilizes a wide range of public data to generate inputs and labels. The model incorporates an extensive training technique to produce coherent responses by predicting the most likely next word based on the previous words in a sentence. RoBERTa is mainly used for masked language modeling, which involves masking a few words in a sentence and then running the entire masked sentence through the model. The model is offered with a challenge to identify the masked words.

8. Guanaco-65B

Derived from Meta’s Llama, the Guanaco-65B is an open-source model trained on OASST1 datasets. With only a few computational resources, it can generate text quickly. The model has been trained on 65 billion parameters and a single GPU with 48GB of VRAM. Another exciting feature of this LLM is that it can even be used offline, making it the most preferred model for businesses.

9. UniLM

The Unified Language Model is a multimodal model that can comprehend and process information from multiple input modes, including text, images, and audio. It combines various sensory inputs to develop a deeper understanding of data. UniLm can interpret the relationship between several different models and generate coherent output based on visual and text content input. The model is known for its exceptional capabilities in tasks such as image captioning, text-to-image synthesis, and question-answering.

10. T5: Text-to-Text Transfer Transformer

T5 is a versatile large language model that can handle tasks with a simple text-to-text transfer approach. It can comprehend language patterns and semantics accurately. T5 can handle multiple tasks in one go without compromising performance quality. Each task involves inputting a text into the model and training it to produce a desired output.