00:11 Stephen Hibbs: Welcome to this episode of the HemaSphere podcast. My name is Stephen Hibbs and I’m a hematologist and clinical research fellow, based at Queen Mary University of London, and I am one of the scientific editors for HemaSphere.

So today I am joined by Adrian and Juan Carlos, who are both hematologists based in Spain. They’ve recently published a HemaSphere paper titled: Machine Learning Improves Risk Stratification in Myelofibrosis: An Analysis of the Spanish Registry of Myelofibrosis.

I’m really glad today to have them join us; both to hear specifically about this study, but also to think about machine learning more widely and its potential to change practice.

So my first question is to Adrian. I’d like to ask you: How did you first come across the method of machine learning?

00:56 Adrian: Well, this is quite an interesting question. As a clinician that’s also trained in diagnostics and bioinformatics, I had quite a good idea about the need for improved prognostication based on further parametrization of the data of patients. So, eventually the solution came out of artificial intelligence.

So when you are trying to think about developing complex models that can accommodate complex data for many patients into a single score, then you need some robust statistical technique.

This can be a traditional regression model such as Cox, but also others based on machine learning, such as this one which is based on Random Forest.

So it is interesting. And probably, the nice part of this is that you can pick large heterogeneity in patient evolution over time; and use that to create a single prognostic score that is quantitative. It’s not a prognostic score based on different groups. And therefore, the position is higher, and the uncertainty of the positions are lower than the traditional scoring methods.

02:20 Stephen Hibbs: For those of us who are kind of new to this idea of machine learning… And I’ve got to admit, it’s something that (when I’ve tried to read about it in the past) has often seemed quite confusing. Would you be able to kind of just describe, in simple terms, what machine learning is; and perhaps give an example of where it’s being applied in a completely different medical context?

02:41 Adrian: Oh yes, sure. So there is a broad field of artificial intelligence. This is quite interesting, because AI is specialists, that don’t like the term “artificial intelligence”. So we have to start from the point that even these experts don’t consider this as a clear field, because there is no clear definition of what intelligence is.

So, assuming that artificial intelligence are all these devices that simulate human cognitive activity, such as learning and problem solving, then we have several subfields into artificial intelligence. And the broadest one is machine learning. This has become quite common recently, because (in machine learning) you have as many applications as your imagination can limit you.

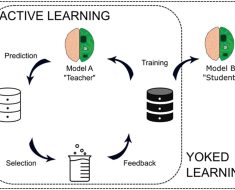

And the idea is to train predictors to identify some hidden layers, or hidden variables (sorry) in your data – this is unsupervised machine learning -or to be capable of predicting some class, or some variable that you know about, such as [indecipherable: cervical/Serviços].

This is what we did, actually, in this paper. It was a bit interesting, in machine learning, and this has been even broadened by the implementation of ChatGPT. So ChatGPT; the GPT is “Generative Pre-trained Transformer”. This is a complex assistant that is based on machine learning, but also traditional mathematics. This has led to the — in machine learning. But this is probably more of a very different field, because ChatGPT has been developed to simulate human language and human conversation.

However, in our field we were working on predicting patient outcomes better, to better risk stratify patients and to better predict, in some cases, differential drug response in different onco-hematological tumors.

So the field is quite different, actually.

When one thinks about artificial intelligence, you have to keep in mind that there are very different domains of applicability; and this requires not just a lot of information or a lot of knowledge about the field, but also a lot of knowledge about the field you want to apply it to. It will make much more sense to do this work, for example, without the involvement of experts in myelofibrosis such as Juan Carlos, who is here with us.

05:40 Stephen Hibbs: So just so I check I’ve understood… So in simple terms, comparing, I guess, traditional development in a prognostic score would be humans trying out all sorts of different regression models to say “Let’s put in this, and this, and this and see how well it fits”. And here, you’re giving a computer the chance to do all those experiments for you and to say “Please try out these variables and find what has the best fit”.

But there’s kind of more autonomy given to the computer to do all of those different experiments. Would that be a fair summary?

06:19 Adrian: Yeah. We try to limit the human intervention into the development of the scores, so it is not biased by our preconceived ideas about the prognostic factors in myelofibrosis. This has led indeed to some interesting discoveries that we can further discuss later on.

But the idea is basically to be capable of implementing, in a current way, these artificial intelligence tools, so as to avoid too much human influence during the tuning of the algorithm; and then to be capable of making an interpretation of the results.

The nice thing is that this is something you can also team with Cox regression, for example. But the risk prediction… we didn’t want to do closed risk groups, but rather a survivor prediction. So we can extrapolate which will be the survival of a particular patient according to the prognostic information, compared to the entire Spanish cohort of myelofibrosis, in this case.

alculate this [indecipherable:

But that creates a lot of uncertainty in the predictions of some groups, and in this way, by creating an individual risk score, an individual risk prediction, that you can then extrapolate into survival or cure, and the predictions appear to be much better.

08:25 Stephen Hibbs: Thank you. Juan Carlos, a question for you, then. I know that one really crucial part of this study that made it possible was the really large Spanish registry of myelofibrosis patients. Would you be able to say a bit more about this and the benefits that have come from having this registry?

GEMFIN, and it was created in:

or instance in myelofibrosis,:

In the time, what we have done mainly is to try to assess the results of treatments in real life; treatments that are usually not even approved in myelofibroris, but are commonly chosen. For instance, Erythropoietin, Danazol and so on (steroids). And also, we have made a few contributions trying to validate the prognostic scoring system that was developed in centres of excellence.

from patients diagnosed from:

Although, of course, the physicians are the ones who enter the data in the registry, so there may be a difference in the conceptions and things. So the quality of the data in this place could be quicker in some aspects, but it is not biased, I mean. All the patients are diagnosed in a single centre. They want to put the patient here.

So I would say that sixty centers contribute to the Spanish MPN Registry – sixty. And I’d say that fifteen centres contribute more or less to half of the population of the registry. So there are big recruiters. But there are many centers that have recruited three, four or five patients. So the small centers.

I think this information; it is interesting because it gives you a real sense of the prognosis and the treatment of patients with this disease. So it was, I think, a very interesting data set to be analyzed with new techniques like the one that Adrian and Juan offer us at that time.

11:32 Stephen Hibbs: So when you first heard about this, the idea of machine learning and using it with this registry that you’ve been working on for some time, what were your hopes for what might emerge from this particular piece of research? I’ll ask that to you first, Juan Carlos.

11:50 Juan Carlos: Yes. Actually, well, I manage the patients with myelofibrosis from the beginning through all the sorts of therapies including transplantation. I also am a transplant physician.

And transplant is the only curative treatment for this disease, but it’s very toxic. The transplant-related mortality goes nearly to 30% – and this is one year after the procedure, in the first year.

So to have something that is curative, but on the other side, it can kill the patient. So the decision to send a patient to transplant in such a chronic disease like with myelofibrosis, because it is very heterogenous; some patients live more than ten years or fifteen years, and others are relieved from the beginning… the decision has to based on as much information, prognostic information, as you have.

Usually it is commonly said that in myelofibrosis we have many scoring systems than patients, but actually the reality is that none of them works perfectly, of course, and you have to base your decision on the information that you have, which is not always complete. And the patient is in different clinical settings, not always in centres of excellence. You may not have cytogenetic studies, because there is a dry tap. You don’t have –

So there is still a need for more accurate tools, and I thought artificial intelligence could be one of those, because it can give you an individualized prediction. I think that takes into account what Adrian has said. Everybody knows that the number of blasts in myelofibrosis are very important prognostic features – and in the scoring systems you have a cut-off. It’s 1% or 2%, but it’s not the same as 2% or 5% or 10%.

In this type of platform, you can account for these important prognostic values. So I thought it was a good opportunity to try to test. I don’t think this is the end of the road. Of course, we can improve this. But it was like a first experience in myelofibrosis, and I think it was very important. It was worth publishing it. Although we had to struggle a little bit with the reviewers, I should say.

14:12 Stephen Hibbs: Yeah. Okay. What about you, Adrian? When you were first conceiving of this idea, what did you hope would come from it?

14:18 Adrian: So at least — Well, at the very, very beginning, I thought this was a very interesting opportunity to work closely with the Spanish group. They are a very professional group of colleagues.

Although I don’t have so much experience in treating myelofibrosis, because I am clinically focused on other lymph disorders, I thought this could be a really interesting project, because I knew perfectly that the scoring systems that are traditionally used in myeloid disorders and also in lymphoid disorders, and even in myeloma, are working quite suboptimally, because they don’t exploit the full granularity of the data.

And this limits the reproducibility and creates great uncertainty about some predictions. This is just to mention, for example, the patients with myeloma who are classified to the intermediate risk group in the revised diagnosis, that they have huge heterogeneity in their outcomes; and this happens over and over in different disorders.

So we thought internally that this was a great opportunity to exploit simple information that is available to any hematologist taking care of any patient with myelofibrosis anywhere. You just need a hemogram – and a patient – to apply the model, and to create a new machine learning-based risk scoring system that uses data from a very diverse background of centres.

As Juan Carlos mentioned, this is important, because this is expected to be much closer to the reality of patients in the universal healthcare system, as compared to only prognostic systems that are developed in very particular centres.

And the other thing that I found very interesting at the very beginning, was to evaluate to what extent this phenotypic information; because in myelofibrosis, you have a lot of inflammation about the phenotype of the disease, with a hemogram.

So to what extent is this providing information that could be overlapping with genomic mutation? So, to what extent do we need the genomics to risk thirty five patients, or not?

And this is obviously not a question of yes or no, but there is some overlap that you can further exploit with the traditional and simple clinical, or hemogram information, that was not currently being done. And this was another interesting area of development that we thought could be of interest for many physicians taking care of myelofibrosis patients around the globe.

17:17 Stephen Hibbs: Just for listeners, just to clarify. The term hemogram is a full blood count? It’s not a term I’m familiar with using.

17:26 Adrian: Yeah, sorry. It’s that. A blood count.

17:30 Stephen Hibbs: Sticking with you for a minute, Adrian. I’m going to ask you both this question. Juan Carlos has already alluded to the difficulty of the kind of “review” process, but just kind of thinking about this whole project, I’ll be interested to know – what’s been some of the most challenging aspects of seeing a project through like this, from its initial conception through to getting it published and beyond?

What would you say has been the most difficult part for you, Adrian?

17:55 Adrian: The first interesting point was an internal debate. So, with the hematologists in the Spanish group, because there is a need for a change in mindset. That needs to be done progressively and with a lot of discussion, so we can all get accustomed to using the same language and to accommodate our expectations about the technology.

And then, once we’ve done this, we started to work with the data and within some interesting models and we started to filter out some information that; this is very important. I mean, you can’t do this project without co-operating with the specialists in myelofibrosis, because some of the information in the database had some limitations in its interpretability and this is something that needs to be accounted for.

And then, I think once we achieved the final model and we prepared the paper and submitted it, the main limitation that we found is the lack of available reviewers capable of making a high quality peer review of such a type of paper. This is not their fault. I mean, they are not prepared for machine learning yet.

So probably, the people who are doing the greatest developments in AI technologies are all very young. However, in the medical world, it is the other way around. So the seniors are the leader, the reviewers of the papers.

So there is a need to develop some kind of specialized information technology reviewers in this process. Both to ensure that these papers are reviewed correctly, but also to ensure that the results are real, because there can be some biases.

Roaming development of the machine learning models etcetera that need to be accounted for, by these specialized reviewers, or senior reviewers or whatever.

20:19 Stephen Hibbs: Yeah. Anything you’d add to that, Juan Carlos, of kind of difficulties and challenges along the way with this project?

20:25 Juan Carlos: So my part, I would say, the first part, would be the quality of the data, because it includes a long period of time. So for instance, the criteria of diagnosis of myelofibrosis have changed over the time. For instance, the assessment of the bone marrow fibrosis criteria has also changed.

So how we could analyze this and how we could say we have to think about how to analyze this type of data… It was one particular problem.

The other one was the kind of preconceptions that some reviewers have – and I also have as well. So we have the preconception that genomic data is crucial. It seems that almost everything can be explained by the genomics. Although, with trying to do this analysis, we noticed that also the basic blood cell counts and the clinical characteristics also reflect the genomic data.

We had, for instance, information of somatic mutations in 80% of the data set. 80%. And it was not included in the final calculator, because it did not add any additional information to the prognostic value of clinical hematological parameters. Trying to understand this and trying to explain this to the reviewer was a tough job, I think.

And then of course, we have certain limitations. We didn’t have the information on additional somatic mutations in many patients. I understand that this is the limation of the study. But the first one, I mean, everybody thinks “Okay, if you have a category of myelofibrosis is the best prognosis”. It should be included in the model.

But actually, we tried that – and it did not add anything to the model. With simple variables, without cut-offs, we were able to do accurate predictions. And the first one who had to try to understand and digest this; is ourselves. I mean, myself, as a clinician.

22:34 Stephen Hibbs: Yes. That’s fascinating. So I guess it’s almost a challenge to some orthodoxy you hear, really, of everyone has got used to leaning quite heavily on jetting information – and after a while, I guess, you develop a sort of strong attachment to that, as being definitely the way forward.

And here, the humble full blood count hemogram has, with some clever computing and modelling has done more heavy lifting. And yet, it is a surprise. I can see how surprises are difficult, aren’t they? That’s fascinating.

So can I ask for you then, Juan Carlos… Now you’ve got this model. You mentioned it’s a start. It’s not the end of this work. Does it change, for you, either the decisions you’re making or the conversations you’re having with patients in clinic, with this incredibly difficult decision about who you take to transplant or not?

Or are we not there yet, for this being practice changing, for you?

23:44 Juan Carlos: Well, it’s not practice-changing, but it is another tool. I’m inducing this in the conversation that I have with my patients. So I am inducing, definitely, this prognostic scoring system. But in our recommendation in the paper, we said that, at the time being, this difficult decision should not be based on one scoring system. You need to have more information.

And in particular, we still think that the molecular data is important, particularly regarding their risk of acute transformation which is something that you should avoid. If the patient is high risk, try to avoid this transformation. I think molecular data is important for that purpose.

So I think in the future we should develop a kind of updated model, when all the patients have enough clinical and molecular data – all the patients. And then we try and we assess the real value, the diagnostic value, of each of these features. I think this should be done. I don’t know in which setting, but in the next year.

But we cannot say “Okay, we are happy with the conventional prognostic models and that is enough”, because, as Adrian said, I mean these techniques give you a lot of opportunities, and we are watching every day on the radio and on TV that it’s disrupting technology. It’s changing things. I think we have given readers a view of that and we are applying it in clinical practice at this time.

25:24 Stephen Hibbs: Thanks. So Adrian, I just want to pick up on one thing you’d said when you were you were talking about the review process here of the importance of having people who could look at this quite complicated, or specialized, work. I particularly wanted to touch on this idea of algorithmic fairness.

We’re increasingly aware of social and demographic disparities in peoples’ outcomes. So depending on your socioeconomic status or your ethnicity, or all sorts of other things that in theory shouldn’t have much to do with your outcomes, peoples’ outcomes are different; and how, actually, certain models in medicine can make that worse.

So a sort of high profile example was about kidney disease scoring. I’ve heard it said that, with machine learning, that can be even harder to spot, because the computer is doing so much of the work. You might end up with a model that is only fair or useful for some patients, but for others actually it doesn’t work or it’s not representative.

Is this something that you have had any thoughts about in this area?

26:29 Adrian: I totally agree with you view, because one of the things that you need to be very careful about with AI tools is over-fitting to the structure of your population in the clinic cohort and the possibility of including some biases that you cannot account for.

So this can also happen with other statistical tools, but it’s not so severe as with machine learning. So we actually introduced some techniques to account for that, such as cross-validation and testing split validation, and we are very happy because this entire group has rise to reproduce the superiority of the prognostic scoring system, compared to the AIPSS.

But anyway, we need to be very aware about the possibility of some biases occuring in any population. We believe that in this case this should be minor. At least, biases created to socioeconomic status are not so severe in this vein, because, as in other parts of Europe, the health scoring system was public.

And we can incorporate data from very different backgrounds. From small hospitals to large academic hospitals, that we think represent the reality, or is very close to the reality, of the myelofibrosis in a developed country.

er country. For example, from:

And maybe, maybe in other countries in second or third world, developing countries (sorry), these patients might not have access to JAK inhibitors, and therefore the machine learning might be over or under estimating the risk of some of these patients, just because they don’t have the availability of this drug.

Because in Spain, if the patient progresses, you can add the JAK inhibitor and the patient will probably have a response and prolong his or her survival, but this might not be the same in other countries.

So there are a lot of implications of these prognostic scores, and when trying to incorporate them into a different environment, at least a very different country with a very different socioeconomic background, we might need to share not only the algorithm but maybe to also share the methods, so they can develop their own prognostic scoring systems if this of interest for them, with the data that is representing their country.

For example, another thing that can have an impact is that these patients have long survival, normally. Although very intriguing, as many of these patients live very long. And average life expectancy in Spain in huge. I mean, I think it’s over 83 or 84 years. It’s difficult to know if this will generalize to countries whose life expectancy is ten, twenty or even more years less.

So these are things that need to be accounted for when dealing with survival models. And maybe this is just a starting point in the field to further discuss this with our peers in the big conferences.

30:21 Stephen Hibbs: Yes. Great. Thank you. I guess my final question is the sort of “blue sky thinking” question. I’ll come first to you, Juan Carlos, for this one.

If you could have unlimited money and access to the data for all myelofibrosis patients anywhere, everywhere… what would be the one question or piece of research that you’d want to do to try and forward our understanding of prognostication in myelofibrosis?

30:49 Juan Carlos: Well, I would create a huge data set of clinical and molecular data. I will ask this data set not only about survival but about risk of acute transformation in the next, in the following two years after the clinical factors.

With this, I think we could much better improve our transplant decision making based on prognostic data. I think this actually can be performed in the next year or something. It’s not a chimera or something like that.

31:29 Stephen Hibbs: Brilliant. And to you, Adrian? Anything else you’d like to add to that?

31:34 Adrian: Yeah. I will try to add — As Juan Carlos said, the key is to add more information, surely. But I would also try to add histological information. We can now digitalize the slides of the bone marrows and extract features, both quantitatively or even complex patterns that can only be derived from computer machine tools, which are very complex, that can also add some prognostic relevance.

I could also include follow-up data, so that we can make the model more dynamic. So a patient after two years can recalculate the score according to the previous evolution and make an expectation of survival for the coming two or three years.

And maybe, I would also try to put this into correlation with the response to disease modifying agents such as JAK inhibitors. This could also be of interest. So a multifunctional approach, ideally. Obviously.

32:43 Stephen Hibbs: Yeah. Thank you. And I guess when you’re dealing with — It’s almost like you’re dealing with a nuclear button option of “Do we go to transplant or not?” and it’s such a massive question that, I guess, anything that’s going to help along the way is going to be worth investigating.

That’s brilliant. Well, thank you both. I feel like we’ve learned a lot today about, I guess, thinking about this question of transplant decision making and learning about the possibilities of machine learning.

I think learning about challenging orthodoxies, as you have, by showing the full blood count, can sometimes give you as much (if not more) information that genomics. And to think about some of the road ahead and some of the things we’ve got to be a bit careful of in complex models like this.

So I’m really grateful for your input and I just want to highlight to any of our listeners that the paper is available on the website HemaSphere website to have a read and to learn a bit more.

Thanks all for listening and we’ll be back with another HemaSphere podcast episode soon.