The idea of a “pipeline” is hardly new. Defining and implementing a well-understood workflow has long been key to efficient and cost-effective physical manufacturing — just look at any factory floor.

When software development emerged and productized the intellectual property of modern businesses, the idea of a pipeline became a foundation of development efforts and effective software project management. And as machine learning and AI gain traction, the ideas and benefits of development pipelines can be directly applied to ML model creation and deployment. But it’s important to understand the role, benefits and features of pipelines in ML projects.

What is a machine learning pipeline?

An ML pipeline is the end-to-end process used to create, train and deploy an ML model. Pipelines help ensure that each ML project is approached in a similar manner, enabling business leaders, developers, data scientists and operations staff to participate in the final ML product.

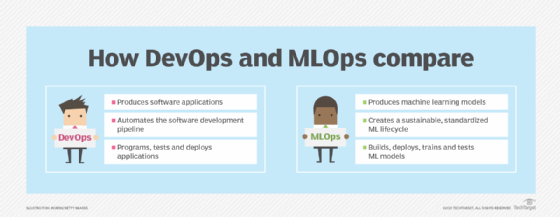

When implemented properly, an ML pipeline establishes cross-disciplinary collaboration among developers, data scientists and operations staff. The similarities of ML pipelines to other software development pipelines are so strong that the label MLOps has arisen for staff specializing in ML operations, drawing on the DevOps moniker.

Although the actual steps and complexity of an ML pipeline can vary dramatically based on a business’s needs and goals, ML pipelines typically involve the following broad phases:

- Definition of business goals and objectives.

- Data selection and processing.

- Model creation or selection and training.

- Model deployment and management.

Benefits of a machine learning pipeline

Establishing and refining an ML pipeline brings many of the same benefits that organizations depend on for other software development environments. These include speed, uniformity, common understanding, automation and orchestration.

Accelerated ML model deployment

There are many potential avenues for ML model development, training, testing and deployment. But when different ML teams or projects within the same organization take different approaches, the variations in processes and procedures can result in unwanted delays and unexpected problems.

Creating a uniform ML pipeline avoids that potential chaos. With an established pipeline, each ML project is approached the same way, using the same tools and processes. This means that a properly implemented and well-considered ML pipeline can accelerate projects, bringing an ML model from inception to deployment much faster than free-form approaches.

Favors reusable components

Establishing a pipeline in an ML project enables organizations to break down processes and workflows into clear and purposeful steps, such as model testing. Data and data sets are also important reusable components in ML projects.

Embracing the idea of reusability lets model developers create steps and data sets that can be selected and connected with orchestration and automation technologies to streamline the pipeline. This helps to both accelerate the project and reduce human error, which can lead to oversights and security problems. In addition, workflow steps in the form of reusable components can allow for variations in the overall pipeline — such as skipping or adding certain steps as needed — without requiring staff to create and support new pipelines for different purposes.

Easier model troubleshooting

ML projects are fundamentally software development efforts that blend data and data science into the project lifecycle. Consequently, like anything else in software development, ML projects entail inevitable design, coding, testing and analytical challenges that require troubleshooting and remediation.

Establishing a common ML pipeline with clear steps and reusable components helps ensure that each ML project follows a similar well-defined pattern. This makes ML projects easier to understand, evaluate and troubleshoot.

Better regulatory oversight

The enterprise is ultimately responsible for the ML model that is deployed and the outcomes it provides. As ML models are increasingly productized, they are also increasingly scrutinized for factors such as the following:

- Model performance — for example, speed of response to user queries.

- Bias in how the model is trained and makes decisions.

- Data security, including user preferences and other potentially sensitive information.

A pipeline enables an organization to codify and document the processes and elements of its ML projects. This encompasses data components, selection criteria, testing regimens, training techniques, monitoring and observability metrics, and KPIs. In addition, each component of the pipeline can be independently documented and evaluated for security, compliance, business continuity and alignment with business objectives — in other words, whether the ML project is driving the intended results for the business.

Blends DevOps and MLOps

Machine learning projects often involve considerable software development investment, particularly when creating or modifying models and algorithms. DevOps has long been a leading methodology for software projects, combining development with common operations tasks such as deployment and monitoring.

As ML projects gain traction in the enterprise, they’re increasingly handled by MLOps teams responsible for ML development, deployment and operations. In this context, pipelines play a crucial role in providing control and governance for both ML projects and MLOps environments.

Basic steps of a machine learning pipeline

There is no single way to build an ML pipeline, and the details can vary dramatically based on business size and industry requirements. However, most ML pipelines can be divided into four major phases: business goal setting, data preparation, model preparation and model deployment.

1. Business goal setting

Any ML project workflow should start with a careful consideration of business needs and goals. Business and technology leaders as well as ML project stakeholders should take the time to design an ML pipeline based on current and anticipated future requirements.

Common business goals include the following:

- Understand the purpose of the ML project and its expected outcomes for the business.

- Understand the prevailing regulatory environment for ML models, data sets and ML usage.

- Consider the relationship of ML to current and future business governance obligations.

For example, could the business be adversely affected by choices such as which data is selected or which models are trained? Could the business be subject to litigation in the event of algorithmic bias or other undesirable outcomes for users? Such issues should be addressed when developing the overall ML pipeline.

2. Data preparation

Data is the soul of ML and AI; without data, neither can exist. A major portion of any ML pipeline thus involves acquiring and preparing data used to train the ML model.

This phase of the ML pipeline can include several substeps, such as the following:

- Data selection. This step in the pipeline defines what data is used to train the model. Data can be selected or acquired from myriad sources, including user records, real-time devices such as IoT fleets, experimental test results such as scientific research and a wide range of third-party data sources. For example, if the business is developing an ML model to make purchase recommendations to online retail customers, the selected data will likely include user preferences and purchase history.

- Data exploration. This step focuses on vetting or validating the selected data to ensure that it contains the information that the ML model will need. For example, ML training data should be timely and complete, as data that is obsolete or missing desired elements could render the data set unusable.

- Data cleaning. The next step in data preparation involves data transformation and cleaning tasks. This includes removing invalid or unusable data entries; labeling data and identifying specific data elements; and normalizing data, or performing certain processes to standardize data sets. For example, a data cleaning step in an ML pipeline might involve acquiring temperature data, discarding missing or out-of-range temperature readings, and converting Fahrenheit measurements to Celsius for uniformity.

- Feature determination. This final stage involves selecting elements of the data set that are important or relevant for the ML model. For example, a team might want to limit a data set consisting of user purchase history and preferences to the last six months of purchase history, focusing on user preferences that are most relevant to the business and its product offerings. If the desired data features are missing or incomplete, the data set might not be usable to train the ML model.

3. Model preparation

The third general phase of an ML pipeline involves creating and training the ML model itself. If data is the soul of ML, the model is its heart.

The numerous possible steps in the model preparation phase include the following:

- Create or select the model. An ML model is an amalgam of data-driven algorithms. A business can opt to build its own ML model or use a suitable model from an outside source, such as an open source library. Creating an ML model from scratch requires a talented software development team to design the architecture and implement the coding, but the resulting model can meet the most stringent or specific business requirements.

- Validate the model. The next step is to validate the model with a testing data set. To do so successfully, ML engineers need an objective understanding of the model and how it works. The goal here is to ensure that the model works and delivers useful results based on a known — often standardized — data set. If the model behaves as expected with a known data set, it can be trained with the selected data set. If the model deviates from its expected test results, the model must be reevaluated and recoded. It’s also vital to prepare a model that adheres to any regulatory or governance obligations, and if ML engineers cannot understand or predict how an ML model works, the ML project will not stand up to regulatory scrutiny.

- Train and tune the model. This step involves loading the training data set into the model and then tuning the model — or refining its decision-making — by adjusting parameters and hyperparameters as needed. Hyperparameters are a kind of variable used to control the model’s behavior and are often iteratively adjusted to tailor its decision-making scope or other behaviors.

- Evaluate the model. Once the model is built, trained and tuned, the next step is to test it and verify its behavior. This is a continuation of the validation step above, but this time, the goal is to see how the model behaves with live data — as opposed to test data — and check that the results make sense. If the model behaves as expected, it can be considered for deployment. If not, the model might require recoding, retraining or retuning.

4. Model deployment

The final phase of a typical machine learning pipeline brings the trained and tuned ML model into a production environment where it is live and available to users. It’s important for business and technology leaders to have a clear deployment target, such as a local data center or cloud provider, as well as a detailed list of metrics or KPIs to monitor.

The steps within the model deployment phase typically include the following:

- Send the model to production. Just as DevOps professionals deploy apps, MLOps professionals deploy ML models to suitable production environments. Deployment often includes provisioning the resources and services needed to host the ML model and to store and access new data that becomes available as the model is used. Deployment plans should also include connecting monitoring and reporting services to gather details about the model’s performance.

- Monitor the model. The monitoring and reporting services connected to the ML model during deployment must be watched and the resulting data evaluated to ensure that the model is healthy, available and performing as expected. Performance KPIs can be used to drive infrastructure and cost justifications, such as provisioning more resources to meet increased compute demands.

- Retune the model. Some ML products remain static in their decision-making, but many others are designed to learn and respond to changing conditions or new data. For the latter category, the last step in many ML pipelines is retuning the model — a process that involves understanding how new or changing data shapes the ML model’s behavior over time. This can require periodically reevaluating and retesting the model to ensure that its behavior remains predictable and governable as data changes.

Automation in ML pipelines

Automation and orchestration are essential elements of machine learning pipelines.

Automation helps to streamline and standardize the pipeline’s execution and removes many of the errors and oversights that human interaction can introduce. In effect, automation helps ensure that the pipeline executes the same way every time, using the same established criteria or configurations. For example, when an ML model is trained and ready for deployment, automation is vital for provisioning and configuring the deployment environment for performance and security, moving the model into the environment, and then implementing the monitoring and instrumentation needed to oversee the model’s ongoing behavior.

A key to effective automation is reusable process steps, or actions, and data. When the pipeline is designed with many small steps using well-defined data sets, it’s much easier to apply automation and orchestration to build a robust workflow that can handle the end-to-end process — and allow for some variability based on circumstance, such as omitting or adding certain steps or data components when project criteria are met.

Testing is a vital element of any pipeline automation. Every step must be thoroughly tested and vetted, especially when the execution of those steps is conditional. Testing also produces documentation and lends itself to careful versioning, enabling process steps to use the same version control techniques used in other software components. This aids business governance and compliance efforts by helping the business show how the process steps and overall process behaviors operate in ML project development.

Automation can then be used to tie all the steps together to build the complete end-to-end process, providing conditional execution or varied pathways to completion based on project criteria and requirements. In other words, it’s better to use the same pipeline in 10 different ways than it is to build and maintain 10 different pipelines.

Stephen J. Bigelow is a senior technology editor in TechTarget Editorial’s Data Center and Virtualization Media Group.