Abstract

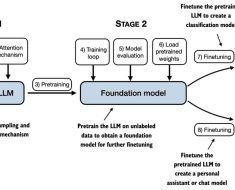

Objectives: Phenotyping is a core task in observational health research utilizing electronic health records (EHRs). Developing an accurate algorithm typically demands substantial input from domain experts, involving extensive literature review and evidence synthesis. This burdensome process limits scalability and delays knowledge discovery. We investigate the potential for leveraging large language models (LLMs) to enhance the efficiency of EHR phenotyping by generating drafts of high-quality algorithms.

Materials and Methods: We prompted four LLMs-ChatGPT-4, ChatGPT-3.5, Claude 2, and Bard-in October 2023, asking them to generate executable phenotyping algorithms in the form of SQL queries adhering to a common data model for three clinical phenotypes (i.e., type 2 diabetes mellitus, dementia, and hypothyroidism). Three phenotyping experts evaluated the returned algorithms across several critical metrics. We further implemented the top-rated algorithms from each LLM and compared them against clinician-validated phenotyping algorithms from the Electronic Medical Records and Genomics (eMERGE) network.

Results: ChatGPT-4 and ChatGPT-3.5 exhibited significantly higher overall expert evaluation scores in instruction following, algorithmic logic, and SQL executability, when compared to Claude 2 and Bard. Although ChatGPT-4 and ChatGPT-3.5 effectively identified relevant clinical concepts, they exhibited immature capability in organizing phenotyping criteria with the proper logic, leading to phenotyping algorithms that were either excessively restrictive (with low recall) or overly broad (with low positive predictive values).

Conclusion: Both ChatGPT versions 3.5 and 4 demonstrate the capability to enhance EHR phenotyping efficiency by drafting algorithms of reasonable quality. However, the optimal performance of these algorithms necessitates the involvement of domain experts.

Competing Interest Statement

The authors have declared no competing interest.

Funding Statement

This work was supported by R01GM139891, R01AG069900, F30AG080885, T32GM007347, K01HL157755, and U01HG011181.

Author Declarations

I confirm all relevant ethical guidelines have been followed, and any necessary IRB and/or ethics committee approvals have been obtained.

Yes

The details of the IRB/oversight body that provided approval or exemption for the research described are given below:

This study was approved by the institutional review boards at Vanderbilt University Medical Center (IRB #: 201434).

I confirm that all necessary patient/participant consent has been obtained and the appropriate institutional forms have been archived, and that any patient/participant/sample identifiers included were not known to anyone (e.g., hospital staff, patients or participants themselves) outside the research group so cannot be used to identify individuals.

Yes

I understand that all clinical trials and any other prospective interventional studies must be registered with an ICMJE-approved registry, such as ClinicalTrials.gov. I confirm that any such study reported in the manuscript has been registered and the trial registration ID is provided (note: if posting a prospective study registered retrospectively, please provide a statement in the trial ID field explaining why the study was not registered in advance).

Yes

I have followed all appropriate research reporting guidelines, such as any relevant EQUATOR Network research reporting checklist(s) and other pertinent material, if applicable.

Yes

Data Availability

Source code for results and LLM-generated SQL-formatted algorithms are shared at https://github.com/The-Wei-Lab/LLM-Phenotyping-2024.