Figure 1. The increasing popularity of the keyword “cloud LLM” on Google since the release of ChatGPT-3.5 in November 2022.

Large Language Models (LLMs) have become a hot topic for businesses, especially after the release of ChatGPT-3.5 to the market in 2022. The integration of generative AI into these models has further elevated their capabilities, creating a dilemma for companies between cost efficiency and reliability. In this article, we provide the readers with an extensive view on cloud LLMs, some case studies, and how they differ from local LLMs served in-house.

What is the Cloud Large Language Model (LLM)?

Cloud LLM refers to a Large Language Model hosted in a cloud environment. These models, like GPT-3, are AI systems that have advanced language understanding capabilities and can generate human-like texts. Cloud LLMs are accessible via the internet, making them easy to use in various applications, such as chatbots, content generation, and language translation.

However, large enterprises, despite extensive cloud initiatives and SaaS integration, usually have just 15%-20% of their applications in the cloud.1 This indicates that a significant portion of their IT infrastructure and applications still rely on on-premises or legacy systems.

Cloud LLMs are suitable for:

- Teams with low tech expertise: Cloud LLMs can be suitable for teams with limited technical expertise because they are often accessible through user-friendly interfaces and APIs, requiring less technical know-how to implement and utilize effectively.

- Teams with limited tech budget: Cloud LLMs eliminate the need for significant upfront hardware and software investments. Users can pay for cloud LLM services on a subscription or usage basis, which may be more budget-friendly.

Pros of Cloud LLMs

- No maintenance efforts: Users of cloud LLMs are relieved from the burden of maintaining and updating the underlying infrastructure, as cloud service providers handle these responsibilities, and the costs are added in the subscription prices.

- Connectivity: Cloud LLMs can be accessed from anywhere with an internet connection, enabling remote collaboration and use across geographically dispersed teams.

- Less financial costs: Users can benefit from cost-effective pay-as-you-go pricing models, reducing the initial capital expenditure associated with hardware and software procurement and leveraging the model whenever they need.

Cons of Cloud LLMs

- Security risks: Storing sensitive data or using LLMs may raise cloud security concerns due to potential data breaches or unauthorized access. This might be a burden for companies with strong privacy concerns as they might be vulnerable to sophisticated social engineering attacks.

What are Local LLMs?

Local LLMs refer to Large Language Models that are installed and run on an organization’s own servers or infrastructure. These models offer more control and potentially enhanced security but require more technical expertise and maintenance efforts compared to their cloud computing counterparts.

Suitable for:

- Teams with high-tech expertise: Ideal for organizations with a dedicated AI department, such as major tech companies (e.g., Google, IBM) or research labs that have the resources and skills to maintain complex LLM infrastructures.

- Industries with specialized terminology: Beneficial for sectors like law or medicine, where customized models trained on specific jargon are essential.

- Those invested in cloud infrastructure: Companies that have already made significant investments in cloud technologies. (i.e., Salesforce) can set up in-house LLMs more effectively.

- Those who can initiate rigorous testing: Necessary for businesses needing extensive model testing for accuracy and reliability.

Pros of local LLMs

High security operations: It allows organizations to maintain full control over their data and how it is processed, ensuring compliance with data privacy regulations and internal security policies.

If you plan to build LLM in-house, here is a guide to gathering LLM data.

Cons of local LLMs

Initial costs: Significant investment in GPUs and servers is needed, akin to a scenario where a mid-size tech company might spend a few hundred thousand dollars to establish a local LLM infrastructure.

Scalability: Difficulties in scaling resources to meet fluctuating demands, such as fine-tuning the model.

Environmental concerns: Training one large language model can emit about 315 tons of carbon dioxide.2

Comparison of local vs cloud LLMs

Here is a comparison of local and cloud LLMs based on different factors:

| Factor | In-house LLMs | Cloud LLMs |

|---|---|---|

| Tech expertise | Strongly needed | Less needed |

| Initial costs | High | Low |

| Overall costs | High | Medium to high* |

| Scalability | Low | High |

| Data control | High | Low |

| Customization | High | Low |

| Downtime risk | High | Low |

*Overall costs can accelerate depending on business needs.

If you are willing to invest in cloud GPUs, check out our vendor benchmarking.

How to choose between local vs cloud LLM?

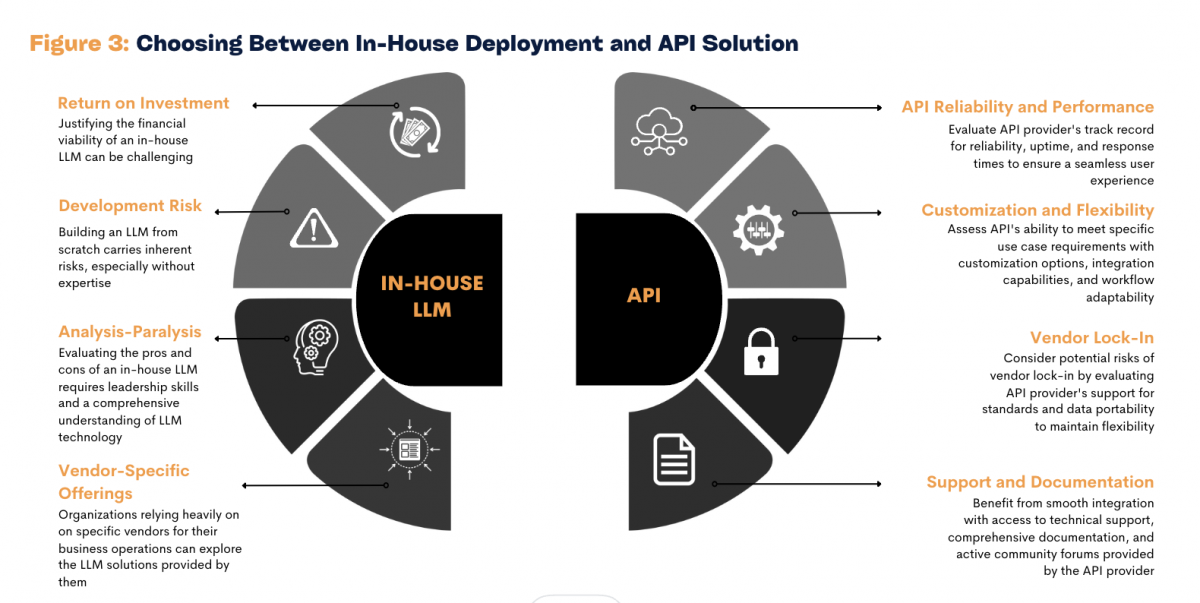

Source: AIM Research3

Figure 1. In-house vs API LLMs

While choosing between local or cloud LLMs, there are some questions you should consider:

1- Do you have in-house expertise?

Running LLMs locally requires significant technical expertise in machine learning and managing complex IT infrastructure. This can be a challenge for organizations without a strong technical team. On the other hand, cloud-based LLMs offload much of the technical burden to the cloud provider, including maintenance and updates, making them a more convenient option for businesses lacking specialized IT employees.

2- What are your budget constraints?

Local LLM deployment involves significant upfront costs, mainly due to the need for powerful computing hardware, especially GPUs. This can be a major hurdle for smaller companies or startups. Cloud LLMs, conversely, typically have lower initial costs with pricing models based on usage, such as subscriptions or pay-as-you-go plans.

3- What are your computational needs?

For businesses with consistent, high-volume computational needs and the infrastructure to support them, local LLMs can be a more reliable choice. However, cloud LLMs offer scalability that is beneficial for businesses with fluctuating demands. The cloud model allows for easy scaling of resources to handle increased workloads, which is particularly useful for companies whose computational needs may spike periodically (i.e., Cosmetics company on Black Friday season).

4- What are your risk management assets?

While local LLMs offer more direct control over data security and may be preferred by organizations handling sensitive information (such as financial or healthcare data), they also require robust internal security protocols. Cloud LLMs, while potentially posing higher risks due to data transmission over the internet, are managed by providers who typically invest heavily in security measures.

3 Cloud LLMs case studies

Manz & deepset Cloud

Manz, an Austrian legal publisher, employed deepset Cloud to optimize legal research with semantic search.4 Their extensive legal database necessitated a more efficient way to find relevant documents. They implemented a semantic recommendation system through deepset Cloud’s expertise in NLP and German language models. Manz significantly improved research workflows.

Cognizant & Google Cloud

Cognizant and Google Cloud are collaborating to leverage generative AI, including Large Language Models (LLMs), in the cloud to tackle healthcare challenges.5 They aim to streamline administrative processes in healthcare, like appeals and patient engagement, using Google Cloud’s Vertex AI platform and Cognizant’s industry expertise. This partnership highlights the potential of cloud-based LLMs to optimize healthcare operations and drive business efficiency.

Allied Banking Corporation & Finastra

Allied Banking Corporation, based in Hong Kong, has transitioned its core banking operations to the cloud and upgraded to Finastra’s next-generation Essence solution.6 They’ve also implemented Finastra’s Retail Analytics for enhanced reporting. This move reflects a strategic shift toward modern, cost-effective technology, enabling future growth and efficiency gains.

If you need help deciding between on-premise or cloud LLMs for your business, feel free to contact us:

- “In search of cloud value: Can generative AI transform cloud ROI?“. McKinsey. November 15, 2023. Retrieved January 16, 2024.

- “Strubell, E., Ganesh, A., & McCallum, A. (2019). “Energy and policy considerations for deep learning in NLP.” arXiv preprint arXiv:1906.02243. Retrieved January 16, 2024.

- “API or In-house LLM?“. AIM Research. Retrieved January 16, 2024.

- “Manz Case Study“. deepset. Retrieved January 16, 2024.

- “Cognizant expands generative AI partnership with Google Cloud, announces development of healthcare large language model solutions“. PR Newswire. August 2, 2023. Retrieved January 16, 2024.

- “Allied Banking Corporation migrates core banking operations to the cloud with Finastra“. PR Newswire. 17 January, 2024. Retrieved January 17, 2024.

![[2310.02567] Improving Automatic VQA Evaluation Using Large Language Models [2310.02567] Improving Automatic VQA Evaluation Using Large Language Models](https://aigumbo.com/wp-content/uploads/2023/12/arxiv-logo-fb-235x190.png)