Introduction

Chatbots have become an integral part of modern applications, providing users with interactive and engaging experiences. In this guide, we’ll create a chatbot using LangChain, a powerful framework that simplifies the process of working with large language models. Our chatbot will have the following key features:

- Conversation memory to maintain context

- Customizable system prompts

- Ability to view and clear chat history

- Response time measurement

By the end of this article, you’ll have a fully functional chatbot that you can further customize and integrate into your own projects. Whether you’re new to LangChain or looking to expand your AI application development skills, this guide will provide you with a solid foundation for creating intelligent, contextaware chatbots.

Overview

- Grasp the fundamental concepts and features of LangChain, including chaining, memory, prompts, agents, and integration.

- Install and configure Python 3.7+ and necessary libraries to build and run a LangChain-based chatbot.

- Modify system prompts, memory settings, and temperature parameters to tailor the chatbot’s behavior and capabilities.

- Integrate error handling, logging, user input validation, and conversation state management for robust and scalable chatbot applications.

Prerequisites

Before diving into this article, you should have:

- Python Knowledge: Intermediate understanding of Python programming.

- API Basics: Familiarity with API concepts and usage.

- Environment Setup: Ability to set up a Python environment and install packages.

- OpenAI Account: An account with OpenAI to obtain an API key.

Technical Requirements

To follow along with this tutorial, you’ll need:

- Python 3.7+: Our code is compatible with Python 3.7 or later versions.

- pip: The Python package installer, to install required libraries.

- OpenAI API Key: You’ll need to sign up for an OpenAI account and obtain an API key.

- Text Editor or IDE: Any text editor or integrated development environment of your choice.

What is LangChain?

LangChain is an opensource framework designed to simplify the development of applications using large language models (LLMs). It provides a set of tools and abstractions that make it easier to build complex, contextaware applications powered by AI. Some key features of LangChain include:

- Chaining: Easily combine multiple components to create complex workflows.

- Memory: Implement various types of memory to maintain context in conversations.

- Prompts: Manage and optimize prompts for different use cases.

- Agents: Create AI agents that can use tools and make decisions.

- Integration: Connect with external data sources and APIs.

Now that we’ve covered what LangChain is, how we’ll use it, and the prerequisites for this tutorial, let’s move on to setting up our development environment.

Setting-up the Environment

Before we dive into the code, let’s set up our development environment. We’ll need to install several dependencies to get our chatbot up and running.

First, make sure you have Python 3.7 or later installed on your system. Then, create a new directory for your project and set up a virtual environment:

Terminal:

mkdir langchainchatbot

cd langchainchatbot

python m venv venv

source venv/bin/activateNow, install the required dependencies:

pip install langchain openai pythondotenv coloramaNext, create a .env file in your project directory to store your OpenAI API key:

OPENAI_API_KEY=your_api_key_hereReplace your_api_key_here with your actual OpenAI API key.

Understanding the Code Structure

Our chatbot implementation consists of several key components:

- Import statements for required libraries and modules

- Utility functions for formatting messages and retrieving chat history

- The main run_chatgpt_chatbot function that sets up and runs the chatbot

- A conditional block to run the chatbot when the script is executed directly

Let’s break down each of these components in detail.

Implementing the Chatbot

Let us now look into the steps to implement chatbot.

Step1: Importing Dependencies

First, let’s import the necessary modules and libraries:

import time

from typing import List, Tuple

import sys

from colorama import Fore, Style, init

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain.memory import ConversationBufferWindowMemory

from langchain.schema import SystemMessage, HumanMessage, AIMessage

from langchain.schema.runnable import RunnablePassthrough, RunnableLambda

from operator import itemgetterThese imports provide us with the tools we need to create our chatbot:

- time: For measuring response time

- typing: For type hinting

- sys: For systemrelated operations

- colorama: For adding color to our console output

- langchain: Various modules for building our chatbot

- operator: For the itemgetter function

Step2: Define Utility Functions

Next, let’s define some utility functions that will help us manage our chatbot:

def format_message(role: str, content: str) > str:

return f"{role.capitalize()}: {content}"

def get_chat_history(memory) > List[Tuple[str, str]]:

return [(msg.type, msg.content) for msg in memory.chat_memory.messages]

def print_typing_effect(text: str, delay: float = 0.03):

for char in text:

sys.stdout.write(char)

sys.stdout.flush()

time.sleep(delay)

print()- format_message: Formats a message with the role (e.g., “User” or “AI”) and content

- get_chat_history: Retrieves the chat history from the memory object

- print_typing_effect: Creates a typing effect when printing text to the console

Step3: Defining Main Chatbot Function

The heart of our chatbot is the run_chatgpt_chatbot function. Let’s break it down into smaller sections:

def run_chatgpt_chatbot(system_prompt="", history_window=30, temperature=0.3):

#Initialize the ChatOpenAI model

model = ChatOpenAI(model_name="gpt3.5turbo", temperature=temperature)

Set the system prompt

if system_prompt:

SYS_PROMPT = system_prompt

else:

SYS_PROMPT = "Act as a helpful AI Assistant"

Create the chat prompt template

prompt = ChatPromptTemplate.from_messages(

[

('system', SYS_PROMPT),

MessagesPlaceholder(variable_name="history"),

('human', '{input}')

]

)This function does the following:

- Initializes the ChatOpenAI model with the specified temperature.

- Sets up the system prompt and chat prompt template.

- Creates a conversation memory with the specified history window.

- Sets up the conversation chain using LangChain’s RunnablePassthrough and RunnableLambda.

- Enters a loop to handle user input and generate responses.

- Processes special commands like ‘STOP’, ‘HISTORY’, and ‘CLEAR’.

- Measures response time and displays it to the user.

- Saves the conversation context in memory.

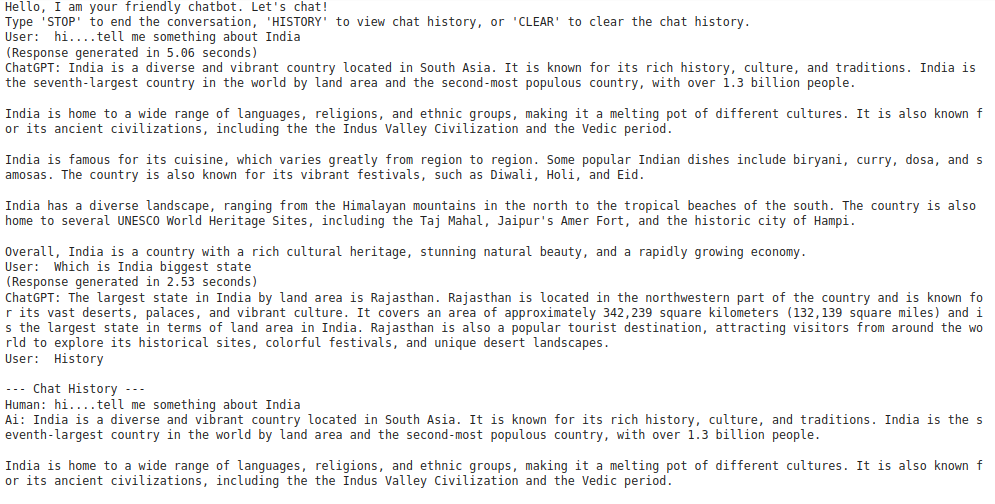

Step5: Running the Chatbot

Finally, we add a conditional block to run the chatbot when the script is executed directly:

if __name__ == "__main__":

run_chatgpt_chatbot()This allows us to run the chatbot by simply executing the Python script.

Advanced Features

Our chatbot implementation includes several advanced features that enhance its functionality and user experience.

Chat History

Users can view the chat history by typing ‘HISTORY’. This feature leverages the ConversationBufferWindowMemory to store and retrieve past messages:

elif user_input.strip().upper() == 'HISTORY':

chat_history = get_chat_history(memory)

print("\n Chat History ")

for role, content in chat_history:

print(format_message(role, content))

print(" End of History \n")

continueClearing Conversation Memory

Users can clear the conversation memory by typing ‘CLEAR’. This resets the context and allows for a fresh start:

elif user_input.strip().upper() == 'CLEAR':

memory.clear()

print_typing_effect('ChatGPT: Chat history has been cleared.')

continueResponse Time Measurement

The chatbot measures and displays the response time for each interaction, giving users an idea of how long it takes to generate a reply:

start_time = time.time()

reply = conversation_chain.invoke(user_inp)

end_time = time.time()

response_time = end_time start_time

print(f"(Response generated in {response_time:.2f} seconds)")Customization Options

Our chatbot implementation offers several customization options:

- System Prompt: You can provide a custom system prompt to set the chatbot’s behavior and personality.

- History Window: Adjust the history_window parameter to control how many past messages the chatbot remembers.

- Temperature: Modify the temperature parameter to control the randomness of the chatbot’s responses.

To customize these options, you can modify the function call in the if __name__ == “__main__”: block:

if __name__ == "__main__":

run_chatgpt_chatbot(

system_prompt="You are a friendly and knowledgeable AI assistant specializing in technology.",

history_window=50,

temperature=0.7

)

import time

from typing import List, Tuple

import sys

import time

from colorama import Fore, Style, init

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain.memory import ConversationBufferWindowMemory

from langchain.schema import SystemMessage, HumanMessage, AIMessage

from langchain.schema.runnable import RunnablePassthrough, RunnableLambda

from operator import itemgetter

def format_message(role: str, content: str) -> str:

return f"{role.capitalize()}: {content}"

def get_chat_history(memory) -> List[Tuple[str, str]]:

return [(msg.type, msg.content) for msg in memory.chat_memory.messages]

def run_chatgpt_chatbot(system_prompt="", history_window=30, temperature=0.3):

model = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=temperature)

if system_prompt:

SYS_PROMPT = system_prompt

else:

SYS_PROMPT = "Act as a helpful AI Assistant"

prompt = ChatPromptTemplate.from_messages(

[

('system', SYS_PROMPT),

MessagesPlaceholder(variable_name="history"),

('human', '{input}')

]

)

memory = ConversationBufferWindowMemory(k=history_window, return_messages=True)

conversation_chain = (

RunnablePassthrough.assign(

history=RunnableLambda(memory.load_memory_variables) | itemgetter('history')

)

| prompt

| model

)

print_typing_effect("Hello, I am your friendly chatbot. Let's chat!")

print("Type 'STOP' to end the conversation, 'HISTORY' to view chat history, or 'CLEAR' to clear the chat history.")

while True:

user_input = input('User: ')

if user_input.strip().upper() == 'STOP':

print_typing_effect('ChatGPT: Goodbye! It was a pleasure chatting with you.')

break

elif user_input.strip().upper() == 'HISTORY':

chat_history = get_chat_history(memory)

print("\n--- Chat History ---")

for role, content in chat_history:

print(format_message(role, content))

print("--- End of History ---\n")

continue

elif user_input.strip().upper() == 'CLEAR':

memory.clear()

print_typing_effect('ChatGPT: Chat history has been cleared.')

continue

user_inp = {'input': user_input}

start_time = time.time()

reply = conversation_chain.invoke(user_inp)

end_time = time.time()

response_time = end_time - start_time

print(f"(Response generated in {response_time:.2f} seconds)")

print_typing_effect(f'ChatGPT: {reply.content}')

memory.save_context(user_inp, {'output': reply.content})

if __name__ == "__main__":

run_chatgpt_chatbot()Output:

Best Practices and Tips

When working with this chatbot implementation, consider the following best practices and tips:

- API Key Security: Always store your OpenAI API key in an environment variable or a secure configuration file. Never hardcode it in your script.

- Error Handling: Add tryexcept blocks to handle potential errors, such as network issues or API rate limits.

- Logging: Implement logging to track conversations and errors for debugging and analysis.

- User Input Validation: Add more robust input validation to handle edge cases and prevent potential issues.

- Conversation State: Consider implementing a way to save and load conversation states, allowing users to resume chats later.

- Rate Limiting: Implement rate limiting to prevent excessive API calls and manage costs.

- Multiturn Conversations: Experiment with different memory types in LangChain to handle more complex, multiturn conversations.

- Model Selection: Try different OpenAI models (e.g., GPT4) to see how they affect the chatbot’s performance and capabilities.

Conclusion

In this comprehensive guide, we’ve built a powerful chatbot using LangChain and OpenAI’s GPT3.5turbo This chatbot serves as a solid foundation for more complex applications. You can extend its functionality by adding features like:

- Integration with external APIs for realtime data

- Natural language processing for intent recognition

- Multimodal interactions (e.g., handling images or audio)

- User authentication and personalization

By leveraging the power of LangChain and large language models, you can create sophisticated conversational AI applications that provide value to users across various domains. Remember to always consider ethical implications when deploying AIpowered chatbots, and ensure that your implementation adheres to OpenAI’s usage guidelines and your local regulations regarding AI and data privacy.

With this foundation, you’re wellequipped to explore the exciting world of conversational AI and create innovative applications that push the boundaries of human computer interaction.

Frequently Asked Questions

A. You should have an intermediate understanding of Python programming, familiarity with API concepts, the ability to set up a Python environment and install packages, and an OpenAI account to obtain an API key.

A. You need Python 3.7 or later, pip (Python package installer), an OpenAI API key, and a text editor or integrated development environment (IDE) of your choice.

A. The key components are import statements for required libraries, utility functions for formatting messages and retrieving chat history, the main function (run_chatgpt_chatbot) that sets up and runs the chatbot, and a conditional block to execute the chatbot script directly.