From healthcare to finance and legal to customer service, the potential for LLMs to transform operations, enhance decision-making, and personalize user experiences is immense. Yet, the journey to effectively leverage these advanced AI tools is fraught with challenges, especially when fine-tuning and aligning LLMs with high-quality data. We hope to clarify and structure this complex process in this article based on our experience with clients and existing examples.

The necessity of LLM alignment and fine-tuning process

Before diving into the process, we must establish that aligning Large Language Models (LLMs) with business objectives extends beyond merely incorporating domain-specific knowledge. The pitfalls of neglecting a bespoke approach to LLM integration are numerous and can lead to significant repercussions for businesses.

Notable instances include a Chevrolet dealership’s encounter with a rogue chatbot that went viral, probably due to missing some critical fine-tuning dimensions. Similarly, the tech community has witnessed several high-profile missteps by AI, such as a chatbot producing inaccurate or inappropriate responses due to the lack of alignment. These examples serve as cautionary tales, highlighting the need for meticulous customization and fine-tuning of LLMs to avoid public relations issues, customer dissatisfaction, and potential financial losses.

An example of what happens when your LLM does not have the right safeguards in place

As businesses strive to integrate LLMs into their operations, understanding the multifaceted reasons for this alignment can provide insights into the strategic deployment of these technologies. Here are key reasons why businesses seek to align LLMs with their specific needs:

1. Enhanced Content Moderation

Businesses operate in diverse regulatory and cultural environments, making content moderation a critical component of their engagement strategies. LLMs can inadvertently generate or propagate inappropriate content that does not align with a company’s values or the legal standards of the regions in which they operate. By aligning LLMs with specific content moderation policies, businesses can ensure that generated content adheres to community guidelines, regulatory requirements, and cultural sensitivities, mitigating non-compliance risks, brand damage, and user safety concerns.

2. Defense Against Adversarial Attacks

Adversarial attacks pose significant risks to the integrity and reliability of LLM outputs. Malicious entities may attempt to manipulate LLM responses to spread misinformation, exploit vulnerabilities, or bypass security measures. Aligning LLMs to anticipate and resist these attacks involves integrating robust security measures, such as adversarial training and continuous monitoring for potential vulnerabilities. This proactive stance helps maintain the trustworthiness of LLM applications in critical areas like customer service, content generation, and decision support systems.

3. Reflecting Human Preference

Businesses aim to provide personalized experiences that resonate with their audience’s preferences and expectations. When properly aligned, LLMs can tailor interactions based on user behavior, linguistic preferences, and engagement patterns. This personalization extends to understanding cultural nuances, slang, and idioms specific to different user demographics, enhancing user engagement and satisfaction. Personalizing LLM outputs to match human preferences also involves ethical considerations, ensuring that AI interactions remain respectful, inclusive, and free from biases.

4. Maintaining Brand Tone and Identity

A consistent brand tone and identity are crucial for businesses to differentiate themselves in a crowded marketplace. LLMs can be aligned to generate content that reflects a company’s brand voice, whether professional, friendly, informative, or whimsical. This consistency in communication strengthens brand recognition and fosters a cohesive user experience across all digital touchpoints. Moreover, aligning LLMs with a brand’s tone and identity ensures that all generated content, from marketing materials to customer interactions, reinforces the brand’s values and messaging strategy.

Methods and examples of large language model alignment

Various methodologies to align and fine-tune LLMs according to a business’s objectives and needs have been discovered and shared since the boom of open-sourced LLMs and commercial LLMs. Central to these methods is the use of high-quality labeled data. In this article, we will explore several comprehensive strategies that can be applied to aligning or fine-tuning language models to meet specific objectives.

Incorporating domain-specific knowledge

Let’s start with the most common objective when fine-tuning a large language model: preparing it for a particular domain and task-specific use cases. Here are some common and relatively straightforward methods for an LLM to learn domain-specific knowledge:

-

Supervised fine-tuning is the traditional method in fine-tuning LLMs. It involves fully fine-tuning the LLM on a dataset of labeled data for the target task. This method can achieve good performance but is computationally expensive, requiring updating all of the LLM’s parameters.

For example, Meta’s Code Llama was explicitly fine-tuned for coding by further training the Llama 2 model on code-specific datasets for an extended period. This specialized version supports code generation and understanding from code and natural language prompts across popular programming languages.

-

Parameter-efficient fine-tuning (PEFT) is a set of techniques that aim to adapt large language models at a lower computational cost. This is achieved by only modifying a small set of existing parameters instead of fully fine-tuning the language model.

To demonstrate, we fine-tuned a large language model for the finance domain through 800 data points of question-answer pairs within the finance topic. In this LLM fine-tuning process, the questions were created to elicit direct responses, ensuring valuable output for real-world financial scenarios.

-

Retrieval-augmented generation (RAG) differs from fine-tuning because it remains external to the language model. RAG allows LLMs to access and process information from external knowledge bases, providing domain-specific details that may not be readily available within the LLM’s pre-trained parameters. For more information on RAG, we’ve written a complete guide, including methods to evaluate your RAG stack.

Learn how to build a domain-specific chatbot

We prepared a special webinar series on building domain-specific LLMs for chatbots. Check out our replay here.

Applying human preference

Personalizing LLM outputs to match human preferences involves ethical considerations, ensuring that AI interactions remain respectful, inclusive, and free from biases. To align LLMs more closely with human preferences and values, researchers and developers use various methods, including curating labeled data and Reinforcement Learning with Human Feedback (RLHF).

Two papers popularized these techniques for aligning large language models with human preferences:

-

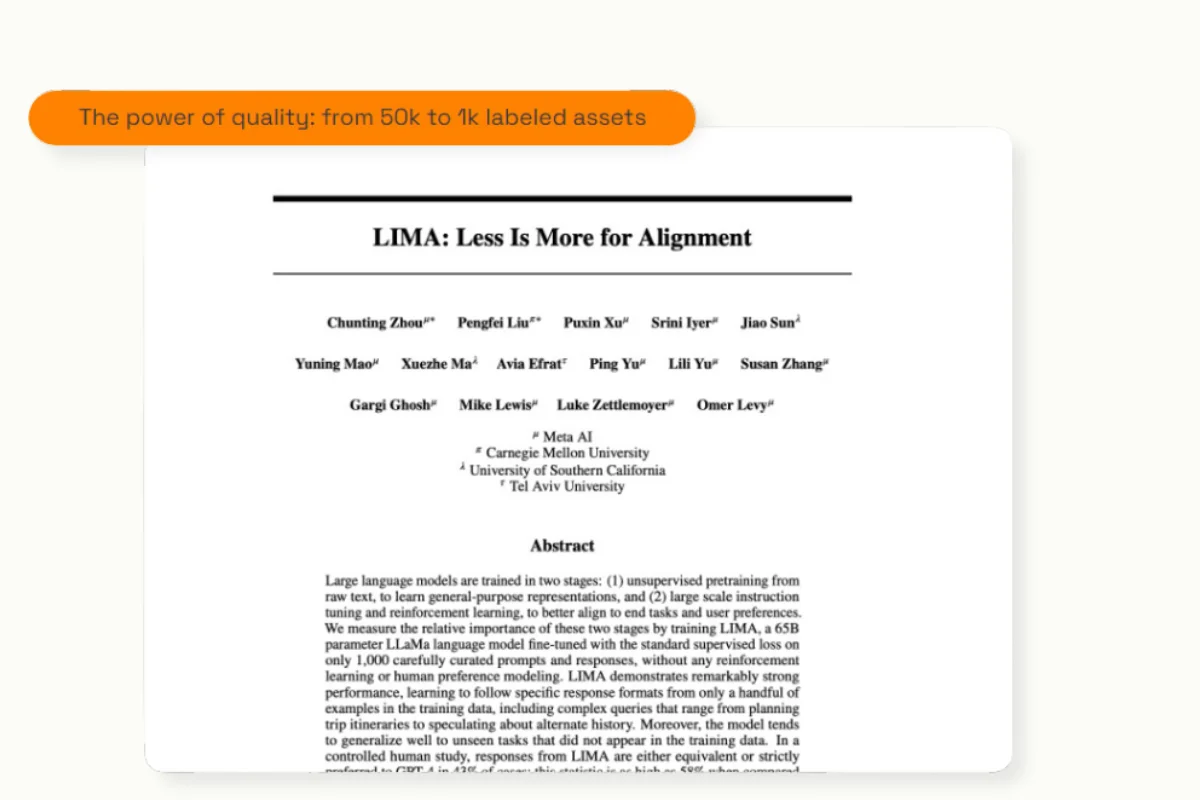

Dataset curation: Meta’s LIMA (Less is More for Alignment) paper shares a straightforward technique that trains large language models using curated datasets of human preferences. In their paper, the researchers fine-tuned a 65B parameter LLaMa language model using only 1,000 carefully curated prompts and responses without any reinforcement learning or human preference modeling.

This fine-tuning method aims to align the responses generated by LLMs with the preferences of humans, making them more empathetic, informative, and engaging. The researchers conclude, “Taken together, these results strongly suggest that almost all knowledge in large language models is learned during pretraining, and only limited instruction tuning data is necessary to teach models to produce high-quality output.”

-

RLHF: Open AI’s paper provides a blueprint for using RLHF to align their popular models towards human preferences. The gist is that human feedback guides the model towards more desirable outputs.

The raters are presented with pairs of outputs for similar prompts or questions. The human judgments are then used to train a separate model known as the preference model. This model learns to predict which of any two given model outputs is more likely to be preferred by human raters. The preference model’s predictions are a reward signal in a reinforcement learning setup. The original GPT model is fine-tuned using this reward signal, adjusting its parameters to generate outputs more likely to receive higher rewards—that is, to be preferred by humans.

Adhering to brand tone and identity

When writing large amounts of content, a large language model can be useful to generate content that follows branding and company guidelines at scale. In this case, machine learning (ML) teams can use a combination of techniques mentioned in earlier examples to develop an aligned model that adheres to a company’s branding and tone guidelines. For example, a fine-tuned model can use RAG in a customer chatbot scenario to maintain the right brand tone and use up-to-date information to stay in context when answering a customer’s query.

Kili Technology is helping a large French retailer build a fine-tuned language model to generate large amounts of content, such as category and product descriptions. By creating a high-quality dataset curated to the company’s objectives and using parameter-efficient fine-tuning techniques, we can expect a large language model specialized in creating content for the retail brand.

Content moderation and output handling

Training Large Language Models (LLMs) to moderate users or outputs effectively involves strategies to ensure the generated content is appropriate, relevant, and aligned with specific guidelines or ethical standards. However, using labeled data is pivotal in several stages of training Large Language Models (LLMs) for content moderation.

-

Curating data for training and fine-tuning – Whether training language models or fine-tuning them, the datasets used must be carefully curated to avoid biased or harmful outputs. This involves removing or filtering out any text that contains harmful or offensive language. However, it’s important to balance removing toxic content and maintaining the diversity and richness of the training data.

-

Using a Natural Language Processing (NLP) model for filtering – Training an additional NLP model on toxic and harmful content can be a safeguard to prevent harmful and malicious inputs and outputs. For example, OpenAI uses text classification techniques to help developers, using their API, identify whether specific inputs and outputs break their policies.

-

RLHF – Using RLHF for content moderation is also a viable technique, as human evaluators can act as moderators and rate or rank responses to continuously train a model to identify toxic prompts and content and respond appropriately.

Adversarial Defense

Continuous training and evaluation are often employed to defend a language model from adversarial attacks. By proactively teaching and testing robust against malicious attempts, ML teams can help their models avoid significant harm.

-

Adversarial Training or Fine-tuning: A viable option is introducing adversarial examples during the training or fine-tuning phase to make language models more robust against attempting to manipulate their outputs.

An interesting study shows the use of a model fine-tuned on a small adversarial dataset to generate malicious examples against a judge LLM. The study showcases a method that requires minimal human input and uses curated datasets for efficient and quasi-self-sustaining optimization.

-

Red Teaming: Red teaming in the context of LLMs involves simulating adversarial attacks to test and improve a model’s defenses. By continuously challenging the model with sophisticated prompts designed to elicit problematic responses, developers can fine-tune the LLM’s detection and response mechanisms, enhancing its ability to handle real-world adversarial scenarios effectively.

One method that showcases this is through an approach called Red-Instruct. In the paper that introduces Red-Instruct, the approach constitutes two phases. The first phase is the use of chain-of-utterances to create a dataset that consists of a combination of harmful questions with safe conversations. The second phase is by using the conversational dataset to align the LLM. A specific error measure (negative log-likelihood) is minimized to increase the learning from helpful responses. To decrease the learning from harmful responses, a technique called gradient ascent is used on the errors obtained from these harmful responses.

Testing and evaluating LLMs

As seen in some previous examples, testing and evaluating an LLM is critical to measure its readiness for real-world use. A robust benchmarking dataset is still a part of the alignment process as it serves as a litmus test for the model’s performance, reliability, and effectiveness.

When creating a benchmark dataset, it is advised that domain experts be involved to guide the collection of data. Domain experts define the criteria for the aspects of an LLM that should be evaluated. These domain experts team up with human labelers or annotators to ensure consistency and quality in the labeling process of building the benchmark dataset.

For example, if the benchmark dataset is being created for a medical LLM, it must involve medical professionals like doctors and researchers. These experts can define what kind of medical information is relevant and accurate for the LLM to learn. They set criteria for evaluating the LLM’s understanding of medical terminology, its ability to provide accurate medical advice, and its adherence to ethical guidelines in healthcare.

These domain experts work alongside human labelers or annotators, who tag and categorize the data in meaningful ways for the LLM’s training. For instance, in our medical example, labelers might be instructed to tag text based on different medical specialties or to distinguish between factual information and patient opinions.

LLM alignment: Putting it to practice

In the previous section, you’ll find that most of the techniques shared emphasize the need to use datasets to guide the LLM towards the necessary output or objective. Hence, a data-centric approach is recommended in the alignment or fine-tuning process.

Adopting a data-centric approach to aligning Large Language Models (LLMs) emphasizes focusing on the dataset’s quality, diversity, and relevance over exclusively enhancing the model’s architecture or algorithms.

The approach recommends prioritizing collecting and curating high-quality data and implementing feedback loops where the model’s outputs are continuously evaluated and used for iteration and refinement. Prioritize collecting high-quality, relevant data that aligns with your application’s objectives. This involves sourcing diverse datasets and ensuring the data accurately reflects the nuances and complexity of the tasks the LLM is expected to perform.

Massive datasets are not often required compared to building language models from scratch; however, optimizing the fine-tuning process is always an excellent step to take for faster, higher-quality production.

Automate building fine-tuning datasets

On the Kili Technology platform, building a high-quality dataset for fine-tuning can be sped up by having a relevant model, like GPT4, generate predictions for your domain-specific data. Predictions that need to be reviewed are identified through confidence score filters. These predictions are then sent to human labelers, guided by subject matter experts, to review or relabel to ensure labeling accuracy.

Below is a workflow we proposed during our webinars on fine-tuning LLMs for chatbot applications:

Faster iteration through integrations

Iteration can only be done well through consistent evaluation and monitoring. In an earlier section, we already covered part of this, where benchmark datasets are recommended to measure how well your LLM performs accurately. As shared in one of our previous articles, Kili Technology can help subject matter experts and human labelers build these benchmark datasets.

However, by also utilizing integrations, we can establish a more efficient monitoring workflow that balances speed and accuracy. This is illustrated through the workflow below, which utilizes a RAG system and human review to align an LLM.

We can significantly enhance the speed and quality of an RAG system’s training and evaluation phases, resulting in a more effective and productive system overall.

Using judge LLMs to automate monitoring

We can further speed up monitoring and evaluation by using another model to identify inaccurate responses from the LLM. This auxiliary model is called a judge LLM in the workflow depicted below. It aims to automatically identify and flag for review inaccurate or unreliable answers generated by the LLM we are fine-tuning. Once an answer has been flagged for review, human reviewers can use the Kili Technology platform to deliberate, label, and correct the answer and push it back to further fine-tune the LLM. It’s important to note that the workflow below applies not only to RAG stacks but also to fine-tuned LLMs.

Conclusion

We shared a variety of techniques and examples on aligning and fine-tuning LLMs. Different objectives require different approaches. However, this complex endeavor can be done well through a data-centric approach focusing on the dataset’s quality and continuous iteration and refinement. A platform like Kili Technology can ensure an effective and efficient fine-tuning and alignment process.

Kili Technology helps to streamline the process of building high-quality datasets for ML teams and evaluating and monitoring LLMs. Whether for fine-tuning or benchmarking, Kili Technology makes integrating the datasets into an evaluating and monitoring loop easy. This means that ML teams can quickly develop an LLM application that is well-aligned with their business objectives and have confidence in its accuracy and effectiveness.