Over the past year, Generative AI and AI-powered automation’s ability to quickly look at large datasets has led to informed decision-making and higher operational performance in many management areas in government, such as strategy, finance, human resources, IT and application delivery and the supply chain.

These emerging technologies have also helped agency leaders meet federal mandates requiring agencies to develop strategies that improve the federal customer experience and also overall compliance amid renewed calls to modernize legacy IT systems.

On the other hand, the federal government has emphasized the importance of responsible use of AI, addressing concerns about bias, security breaches, human rights protections, and a breakdown in public trust. In October, the White House issued an executive order entitled, “Safe, Secure, and Trustworthy Artificial Intelligence.” The EO outlines areas where agencies should focus to ensure AI safety and security through testing standards, public-private partnerships and the deployment of privacy-enhancing technologies.

The EO also mandates agencies to empower consumers and federal employees through AI-harm reduction measures. Moreover, the Senate introduced bipartisan legislation in November entitled “The Artificial Intelligence Research, Innovation, and Accountability Act of 2023. If passed, this legislation will establish a framework for AI innovation, ensuring transparency, accountability and security, while also establishing an enforceable testing standard for “high-risk” AI systems in agencies.

With the daily discourse among agency leaders about the future of AI in government, this begs the question: How can management teams effectively integrate these two emerging technologies responsibly into their agency’s mission strategies while also ensuring openness, flexibility and compliance?

Responsible results

The White House’s recent AI EO offers precise measures to guide agencies in responsible AI practices and AI-powered technologies, all of which should be considered as part of an agency’s management strategy. For example, it requires the appointment of chief artificial intelligence officers to coordinate AI use across agencies, foster innovation, manage risks and ensure adherence to federal regulations. In further support of the importance of this role, the OPM has authorized direct hire authority and excepted service appointments in support of these AI directives.

Additionally, agencies must establish an internal AI governance board to oversee AI concerns and develop AI strategies for high-impact use cases. These boards should be integrated into existing agency governance mechanisms to ensure that AI is considered as a core capability to executing mission and not treated as an island. The EO also mandates that operational authorizations should give more weight to GenAI and other emerging technologies, such as AI-powered automation.Agencies should also consider establishing an automation Center of Excellence that is staffed with a cross functional group of agency business leaders, AI experts and technology leaders.

This CoE should have much more of a digital operations focus than a technical focus, which is a prerequisite for leveraging the power of AI to impact mission imperatives. AI’s ability to impact measurable outcomes is dependent on it being incorporated and utilized in existing mission processes to enhance outcomes, which requires a true digital operations focus where the emphasis is on working with agency mission owners to solve the right problems first.

AI is different in some respects from other technologies in that it is fundamentally assistive in nature, engaging in and understanding what specifically an individual or process is trying to accomplish. This also requires COEs to transform from a technical emphasis to much more of an operations focused role and to understand how and what form of AI can best be deployed to support specific business objectives. CoE elements include establishing governance, continuously improving and scaling automation programs, empowering workers, customizing operations services to meet agency needs and assisting in capturing measurable impact and outcomes of AI on mission operations.

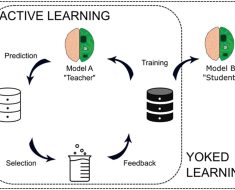

AI-powered automation

While AI is often talked about as a singular technology, it is important to realize it spans a range of technologies; from very specific locally applied AI to personal productivity forms of AI that come with collaboration software, to the large scale LLM’s of generative AI that have captured the public imagination. Deploying the right type of AI to drive the specific agency outcomes being sought is one of the first steps to effectively and responsibly using AI.

All responsible AI systems require data for improved performance, but federal employees and other users need assurance of data security. Once an agency determines which AI technology to apply, the next step is to understand the types of data needed to train and improve it. If an agency is using third party models, then it needs to ensure that legal and compliant methods were used in the model’s development. Further to that end, all AI-powered automation models should have strict and auditable precautions to safeguard all customer data.

AI-powered automation models and algorithms must also be based on reliable data, eliminate potential biases in training and comply with General Data Protection Regulations (GDPR) and other data compliance mandates in the U.S. and worldwide.

Governance protocols

As a management strategy, agency leaders should incorporate guardrails and governance mechanisms into AI-powered models to ensure the responsible and ethical deployment of AI in workflows. One key enabling technology helping them achieve this objective is the use of an automation platform to control how, where and in what way AI is incorporated into their mission processes. A software automation platform can help expressly control how AI is used in any given process, can include human in the loop protocols and strictly govern the data used as part of AI decision making.

A software automation platform can also provide enterprise AI trust layer, capturing every call made to a model, providing ongoing data auditability and privacy protections. This capability can provide governance and compliance across all types of AI technology, applications and use cases, increasing AI trust and transparency for agencies.

Developing standards and leveraging software automation will help agencies mitigate AI risks such as privacy concerns, bias and data security. They will also enable agencies to build trustworthy and compliant AI-powered automation models that integrate easily with legacy IT systems.

People-centric

AI at its core is an assistive technology that is enabling agencies to deploy ever more robust intelligent digital assistants that enhance and enrich how government employees get work done. A responsible approach to AI and AI-powered automation must look beyond reducing costs, improving speed or enhancing performance and recognize that employee experience and helping to shape the future of government work is one of the core tenets of any AI program.

Agency leaders should include these emerging technologies into their federal management strategy so that they are accessible to everyone, empowering users with the necessary training and tools to comprehend and use them effectively.

Federal employees, their vendor partners and other authorized users should have access to responsible AI systems to assist in decision-making to achieve mission outcomes. Furthermore, AI-powered systems can enhance upskilling within federal agencies. Federal human resource departments should build AI training programs that help their employees navigate these technologies.

Innovation with integrity

As emerging technologies evolve, the responsible development and use of AI and AI-powered automation stand out as critical factors in achieving the dual goals of managing effectively and achieving mission outcomes. By embracing these emerging technologies thoughtfully and conscientiously, agencies can deliver faster, more efficient services to citizens, empower their workforce and set a global standard for the ethical use of AI.

The journey toward responsible AI and AI-powered automation is ongoing, but with continued collaboration, education and transparency, the capabilities exist for agencies to embrace the transformative potential of these emerging technologies, all while ensuring that they protect and uphold the values that are important to their employees and citizens while meeting their expectations of government.

Mike Daniels is senior vice president of public sector at UiPath, a global maker of robotic process automation software.