Apple uses AI all throughout its products already, usually just referring to such features as “Machine Learning”, and even has custom AI inferencing hardware called a Neural Engine in all its chips. But when it comes to generative AI like sophisticated chatbots (ChatGPT, Microsoft CoPilot, Bard), image generators (Dall-E, Midjourney, Stable Diffusion), music generators (Amper, MuseNet), and code generation (GitHub CoPilot), Apple appears to be sitting on the sidelines.

But not for long! Multiple rumors say that iOS 18 will begin a big push into generative AI from Apple. We’re talking Siri with gen-AI chat, Apple Music-generated playlists, and maybe even things like image generation or tools for Photos, iMovie, and so on.

We expect Apple’s values and unique take on how it deploys software and services is going to make Apple’s gen-AI tools a little different from what we’re used to. The company’s push for privacy, on-device processing, and an urgent need to protect the company’s reputation for safety and trust will all have an impact on Apple’s AI tools.

Next-gen Siri

This is going to be Apple’s biggest challenge. Siri is the AI product from Apple, the one from which Apple’s AI prowess will be judged in the public eye. It’s the most visible, and one of the first and biggest brands in AI.

It’s also the most natural fit for the sort of large language model (LLM) AIs that are all the rage today, like ChatGPT and Bard. Apple has its own LLM foundation called Ajax, which some reports claim is quite sophisticated.

Siri will get a massive boost with the infusion of generative AI.

Foundry

Apple’s going to want to do as much of this on-device as possible. It’s critical from a privacy and safety standpoint, but it’s also a big advantage in responsiveness, battery efficiency, and just being able to use Siri when you may not have a decent internet connection.

What’s more, doing a lot more on-device could give Siri a big advantage in its ability to hold natural-seeming conversations. It can use data on your phone, like the places you go, the people and objects in your photos, the apps you use, and more, to “feel” like it “knows” you. Siri could feel more like a friend than any other conversational AI, all without ever sending any personal data anywhere.

That’s a huge technical challenge because LLMs require a lot of RAM to run. Even limited-size LLMs require gigabytes of RAM, and our smartphones often don’t have gigabytes to spare. And nobody’s going to care about the technical feat of running an LLM on-device if the output isn’t any good. Whether it’s fair or not, everyday users will compare Siri’s conversational capabilities against the latest online AI chatbots that run on big server farms.

Apple’s researchers recently published a paper called “LLM in a Flash: Efficient Large Language Model Inference with Limited Memory” that tackles this very issue. It describes an efficient way to use flash storage’s strengths to reduce memory overhead and increase the performance of LLMs. In other words, Apple is working hard to make sure a high-quality LLM can run entirely on-device on an iPhone.

But that’s just one of the big challenges a gen-AI-enhanced Siri will face. There have been numerous examples of users easily tricking today’s chatbots into saying something that seems racist, sexist, antisemitic, or other problematic speech, all because of the data upon which they were trained. They’ll also be “confidently wrong” about important information. Apple has a huge spotlight on everything it does, and this kind of performance, even unintended, could be very damaging to Apple’s reputation.

To at least help ensure that Apple’s AI gives reasonably accurate results about factual matters, Apple is looking to license content from major news organizations to help train it’s AI. It’s a good idea–it’s easy to make an AI that serves as a front-end to a search engine and create a new summary, but using high-quality and authoritative reporting about events is a good way to bake more trust into the core of the system.

Running a high-quality LLM entirely on the iPhone will be a tough task.

Chris Martin / Foundry

Obviously, while Apple will want to make as much of its generative AI run on-device as possible, it’s going to need to connect to the internet for much of the same information that Siri connects to the internet today (even though it can do a lot offline). Current weather or traffic information, current events, looking up random facts, “can my dog eat broccoli?”, and so many other queries require internet access, and this will certainly remain true in a new gen-AI-enhanced Siri. Apple will undoubtedly go to great lengths to let us know that the things we ask are anonymized and kept as private as possible.

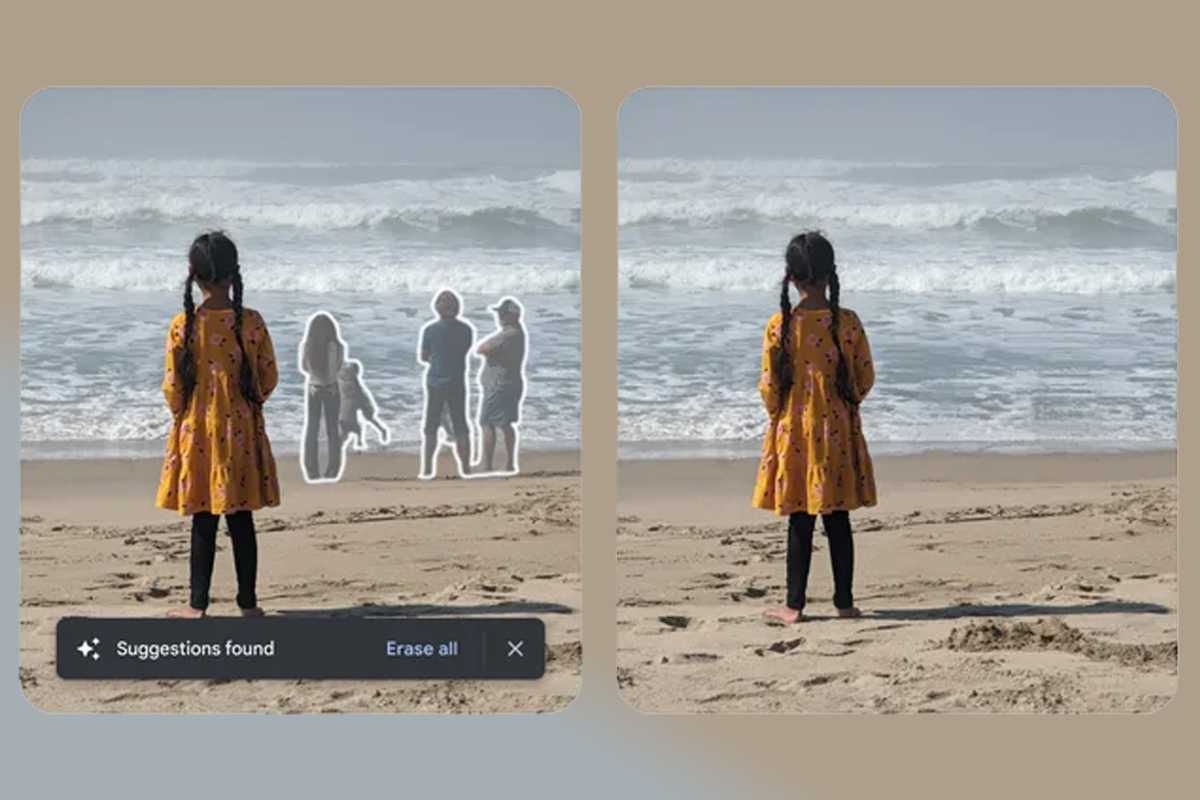

Using generative AI in creative tools is another place where Apple has huge opportunities in front of it, but a tricky minefield to navigate.

Google’s Magic Eraser removes objects from photos by identifying and segmenting them out then “inpainting” the area with generated imagery. It’s a neat trick and something Apple users would love to have. And it’s just the beginning. Generative AI could “extend” a photo beyond its original bounds. Apple can already identify specific people in your photo library–what if you could pick one by name to add to a photo?

These would be awesome and fun tools, but it breaks the trust in the camera system. iPhone pictures are already heavily manipulated, but at least they don’t wholly remove or add subjects. Apple would need to make sure that images manipulated with these kinds of tools have metadata and other identifiers that can be used to know they’ve been manipulated.

Google’s Magic Eraser is a good example of generative AI on a phone.

Foundry

One-tap consumer photo manipulations are one obvious area where Apple could add a lot of value with generative AI, but there are many more. Imagine finishing your iMovie or Final Cut Pro video project and creating a completely original, royalty-free music backing track by simply describing it in a few words. AI could take a 3D scan of your face (similar to Face ID) and generate the Memoji that most closely resembles the real you.

More creative image generation isn’t the kind of thing we expect Apple to get involved in. An Apple equivalent of Dall-E or Midjourney doesn’t seem to fit in their product portfolio. But there are lots of apps that use these tools to take existing photos of people and create avatars in wild styles, making you look like a Normal Rockwell painting, Disney princess, or Pixar character. They can also replace backgrounds and alter lighting to produce really creative and fun portraits.

It’s not hard to imagine Apple using generative AI in the “filters” it builds into features like the Contacts Poster editor or the Clips app to give you similar capabilities, or even running in real-time during FaceTime conversations.

The challenge with generative AI for creative tools is not in finding places where they can fit, it’s in making sure they’re safe—both from a legal standpoint (not plagiarizing or infringing copyright) and a social standpoint (not producing anything that doesn’t fit with Apple’s values).

Convenience and productivity features

Apple’s biggest opportunity may be in the least-sexy area of AI: building features for convenience and productivity. Just as Microsoft is building AI tools into Office, Apple will eventually have to build AI features into its iWork suite (Pages, Numbers, Keynote). It’s not the kind of stuff that grabs headlines or makes memes, but it can be very useful. Instead of static templates, you could ask an AI to “build a one-page resume” and it will take care of everything for you, perhaps with a few extra questions answered. It could generate an entire properly formatted cover letter from a short prompt. Need help building a presentation? Let AI build your deck from a few major bullet points, an audio recording of your accompanying speech, or some photos.

One of the best uses of generative AI is to create concise summaries of papers, articles, and so on. Apple is said to be considering such a feature, which may express itself as either a Siri feature or a Safari feature (or both).

AI-generated Apple Music playlists are rumored to be on the way. That’s not especially new or exciting, but maybe Apple intends to put a twist on it. The company could use the data it has access to on your iPhone to to determine your current activity (driving, working out, studying, walking outside), the current weather and time of day, and your Apple Music listening history to generate just the right playlist for right now, entirely on-device.

Shortcuts should be a huge target for Apple’s AI efforts. It’s such a powerful tool, but I have yet to meet a non-techie who even uses them at all. I would wager Shortcuts use is far less than 5 percent of iPhone users, and far fewer than 1 percent create their own shortcuts. It’s not programming, but the step-by-step scripting, as simple as it is, is far too intimidating for most users. Imagine AI that could take a simple text or voice description of what you want to do and build a shortcut for you! It’s a simpler task than code generation AI, and that’s already quite popular.

Speaking of which, it would be almost crazy for the next major version of Xcode not to include AI code generation (at least for Swift, which Apple would like to push more heavily). There are already Source Editor Extensions for Xcode that do AI code generation, but Apple would probably want something built-in that can run entirely on local hardware. Indeed, Mark Gurman’s latest Power On column suggests Apple will do just that.

These kinds of features are low-hanging fruit for Apple because they’re uncontroversial. They don’t stand much chance of making problematic images or audio, or of spitting out hate speech, all because the training data wasn’t curated carefully enough. But they’re also not the sort of things that get everyday users excited, that people share online and get millions of users to go out and get a new iPhone.

Whatever Apple does with AI in its software this year, it has one massive advantage over all the ChatGPTs, Bings, and Stable Diffusions of the world: It’s built-in. Apple’s tools will be automatically downloaded to a billion iPhones, and even in cases where they compete with third-party tools, they’ll be the default. That’s a huge advantage and opportunity, but it’s also a massive responsibility, so at least for a while the third-party tools will probably remain more capable if only because they don’t have to be quite so cautious.