ERIK POUNDS | Nvidia

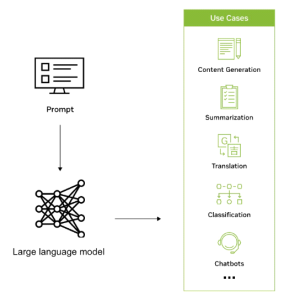

Large language models (LLMs) are deep learning algorithms that are trained on Internet-scale datasets with hundreds of billions of parameters. LLMs can read, write, code, draw, and augment human creativity to improve productivity across industries and solve the world’s toughest problems.

LLMs are used in a wide range of industries, from retail to healthcare, and for a wide range of tasks. They learn the language of protein sequences to generate new, viable compounds that can help scientists develop groundbreaking, life-saving vaccines. They help software programmers generate code and fix bugs based on natural language descriptions. And they provide productivity co-pilots so humans can do what they do best—create, question, and understand.

Effectively leveraging LLMs in enterprise applications and workflows requires understanding key topics such as model selection, customization, optimization, and deployment. This post explores the following enterprise LLM topics:

- How organizations are using LLMs

- Use, customize, or build an LLM?

- Begin with foundation models

- Build a custom language model

- Connect an LLM to external data

- Keep LLMs secure and on track

- Optimize LLM inference in production

- Get started using LLMs

Whether you are a data scientist looking to build custom models or a chief data officer exploring the potential of LLMs for your organization, read on for valuable insights and guidance.

How organizations are using LLMs

LLMs are used in a wide variety of applications across industries to efficiently recognize, summarize, translate, predict, and generate text and other forms of content based on knowledge gained from massive datasets. For example, companies are leveraging LLMs to develop chatbot-like interfaces that can support users with customer inquiries, provide personalized recommendations, and assist with internal knowledge management.

LLMs also have the potential to broaden the reach of AI across industries and enterprises and enable a new wave of research, creativity, and productivity. They can help generate complex solutions to challenging problems in fields such as healthcare and chemistry. LLMs are also used to create reimagined search engines, tutoring chatbots, composition tools, marketing materials, and more.

Collaboration between ServiceNow and NVIDIA will help drive new levels of automation to fuel productivity and maximize business impact. Generative AI use cases being explored include developing intelligent virtual assistants and agents to help answer user questions and resolve support requests and using generative AI for automatic issue resolution, knowledge-base article generation, and chat summarization.

A consortium in Sweden is developing a state-of-the-art language model with NVIDIA NeMo Megatron and will make it available to any user in the Nordic region. The team aims to train an LLM with a whopping 175 billion parameters that can handle all sorts of language tasks in the Nordic languages of Swedish, Danish, Norwegian, and potentially Icelandic.

The project is seen as a strategic asset, a keystone of digital sovereignty in a world that speaks thousands of languages across nearly 200 countries. To learn more, see The King’s Swedish: AI Rewrites the Book in Scandinavia.

The leading mobile operator in South Korea, KT, has developed a billion-parameter LLM using the NVIDIA DGX SuperPOD platform and NVIDIA NeMo framework. NeMo is an end-to-end, cloud-native enterprise framework that provides prebuilt components for building, training, and running custom LLMs.

KT’s LLM has been used to improve the understanding of the company’s AI-powered speaker, GiGA Genie, which can control TVs, offer real-time traffic updates, and complete other home-assistance tasks based on voice commands.

Use, customize, or build an LLM?

Organizations can choose to use an existing LLM, customize a pretrained LLM, or build a custom LLM from scratch. Using an existing LLM provides a quick and cost-effective solution, while customizing a pretrained LLM enables organizations to tune the model for specific tasks and embed proprietary knowledge. Building an LLM from scratch offers the most flexibility but requires significant expertise and resources.

NeMo offers a choice of several customization techniques and is optimized for at-scale inference of models for language and image applications, with multi-GPU and multi-node configurations. For more details, see Unlocking the Power of Enterprise-Ready LLMs with NVIDIA NeMo.

NeMo makes generative AI model development easy, cost-effective, and fast for enterprises. It is available across all major clouds, including Google Cloud as part of their A3 instances powered by NVIDIA H100 Tensor Core GPUs to build, customize, and deploy LLMs at scale. To learn more, see Streamline Generative AI Development with NVIDIA NeMo on GPU-Accelerated Google Cloud.

To quickly try generative AI models such as Llama 2 directly from your browser with an easy-to-use interface, visit NVIDIA AI Playground.

Begin with foundation models

Foundation models are large AI models trained on enormous quantities of unlabeled data through self-supervised learning. Examples include Llama 2, GPT-3, and Stable Diffusion.

The models can handle a wide variety of tasks, such as image classification, natural language processing, and question-answering, with remarkable accuracy.

These foundation models are the starting point for building more specialized and sophisticated custom models. Organizations can customize foundation models using domain-specific labeled data to create more accurate and context-aware models for specific use cases.

Foundation models generate an enormous number of unique responses from a single prompt by generating a probability distribution over all items that could follow the input and then choosing the next output randomly from that distribution. The randomization is amplified by the model’s use of context. Each time the model generates a probability distribution, it considers the last generated item, which means each prediction impacts every prediction that follows.

NeMo supports NVIDIA-trained foundation models as well as community models such as Llama 2, Falcon LLM, and MPT. You can experience a variety of optimized community and NVIDIA-built foundation models directly from your browser for free on NVIDIA AI Playground. You can then customize the foundation model using your proprietary enterprise data. This results in a model that is an expert in your business and domain.

Build a custom language model

Enterprises will often need custom models to tailor language processing capabilities to their specific use cases and domain knowledge. Custom LLMs enable a business to generate and understand text more efficiently and accurately within a certain industry or organizational context. They empower enterprises to create personalized solutions that align with their brand voice, optimize workflows, provide more precise insights, and deliver enhanced user experiences, ultimately driving a competitive edge in the market.

NVIDIA NeMo is a powerful framework that provides components for building and training custom LLMs on-premises, across all leading cloud service providers, or in NVIDIA DGX Cloud. It includes a suite of customization techniques from prompt learning to parameter-efficient fine-tuning, to reinforcement learning through human feedback (RLHF). NVIDIA also released a new, open customization technique called SteerLM that allows for tuning during inference.

When training an LLM, there is always the risk of it becoming “garbage in, garbage out.” A large percentage of the effort is acquiring and curating the data that will be used to train or customize the LLM.

NeMo Data Curator is a scalable data-curation tool that enables you to curate trillion-token multilingual datasets for pretraining LLMs. The tool allows you to preprocess and deduplicate datasets with exact or fuzzy deduplication, so you can ensure that models are trained on unique documents, potentially leading to greatly reduced training costs.

Connect an LLM to external data

Connecting an LLM to external enterprise data sources enhances its capabilities. This enables the LLM to perform more complex tasks and leverage data that has been created since it was last trained.

Retrieval Augmented Generation (RAG) is an architecture that provides an LLM with the ability to use current, curated, domain-specific data sources that are easy to add, delete, and update. With RAG, external data sources are processed into vectors (using an embedding model) and placed into a vector database for fast retrieval at inference time.

In addition to reducing computational and financial costs, RAG increases accuracy and enables more reliable and trustworthy AI-powered applications. Accelerating vector search is one of the hottest topics in the AI landscape due to its applications in LLMs and generative AI.

Keep LLMs on track and secure

To ensure an LLM’s behavior aligns with desired outcomes, it’s important to establish guidelines, monitor its performance, and customize as needed. This involves defining ethical boundaries, addressing biases in training data, and regularly evaluating the model’s outputs against predefined metrics, often in concert with a guardrails capability. For more information, see NVIDIA Enables Trustworthy, Safe, and Secure Large Language Model Conversational Systems.

To address this need, NVIDIA has developed NeMo Guardrails, an open-source toolkit that helps developers ensure their generative AI applications are accurate, appropriate, and safe. It provides a framework that works with all LLMs, including OpenAI’s ChatGPT, to make it easier for developers to build safe and trustworthy LLM conversational systems that leverage foundation models.

Keeping LLMs secure is of paramount importance for generative AI-powered applications. NVIDIA has also introduced accelerated Confidential Computing, a groundbreaking security feature that mitigates threats while providing access to the unprecedented acceleration of NVIDIA H100 Tensor Core GPUs for AI workloads. This feature ensures that sensitive data remains secure and protected, even during processing.

Optimize LLM inference in production

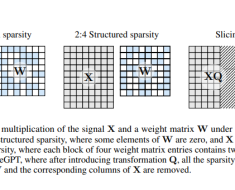

Optimizing LLM inference involves techniques such as model quantization, hardware acceleration, and efficient deployment strategies. Model quantization reduces the memory footprint of the model, while hardware acceleration leverages specialized hardware like GPUs for faster inference. Efficient deployment strategies ensure scalability and reliability in production environments.

NVIDIA TensorRT-LLM is an open-source software library that supercharges large LLM inference on NVIDIA accelerated computing. It enables users to convert their model weights into a new FP8 format and compile their models to take advantage of optimized FP8 kernels with NVIDIA H100 GPUs. TensorRT-LLM can accelerate inference performance by 4.6x compared to NVIDIA A100 GPUs. It provides a faster and more efficient way to run LLMs, making them more accessible and cost-effective.

These custom generative AI processes involve pulling together models, frameworks, toolkits, and more. Many of these tools are open source, requiring time and energy to maintain development projects. The process can become incredibly complex and time-consuming, especially when trying to collaborate and deploy across multiple environments and platforms.

NVIDIA AI Workbench helps simplify this process by providing a single platform for managing data, models, resources, and compute needs. This enables seamless collaboration and deployment for developers to create cost-effective, scalable generative AI models quickly.

NVIDIA and VMware are working together to transform the modern data center built on VMware Cloud Foundation and bring AI to every enterprise. Using the NVIDIA AI Enterprise suite and NVIDIA’s most advanced GPUs and data processing units (DPUs), VMware customers can securely run modern, accelerated workloads alongside existing enterprise applications on NVIDIA-Certified Systems.

Get started using LLMs

Getting started with LLMs requires weighing factors such as cost, effort, training data availability, and business objectives. Organizations should evaluate the trade-offs between using existing models and customizing them with domain-specific knowledge versus building custom models from scratch in most circumstances. Choosing tools and frameworks that align with specific use cases and technical requirements is important, including those listed below.

The Generative AI Knowledge Base Chatbot lab shows you how to adapt an existing AI foundational model to accurately generate responses for your specific use case. This free lab provides hands-on experience with customizing a model using prompt learning, ingesting data into a vector database, and chaining all components to create a chatbot.

NVIDIA AI Enterprise, available on all major cloud and data center platforms, is a cloud-native suite of AI and data analytics software that provides over 50 frameworks, including the NeMo framework, pretrained models, and development tools optimized for accelerated GPU infrastructures. You can try this end-to-end enterprise-ready software suite is with a free 90-day trial.

NeMo is an end-to-end, cloud-native enterprise framework for developers to build, customize, and deploy generative AI models with billions of parameters. It is optimized for at-scale inference of models with multi-GPU and multi-node configurations. The framework makes generative AI model development easy, cost-effective, and fast for enterprises. Explore the NeMo tutorials to get started.

NVIDIA Training helps organizations train their workforce on the latest technology and bridge the skills gap by offering comprehensive technical hands-on workshops and courses. The LLM learning path developed by NVIDIA subject matter experts spans fundamental to advanced topics that are relevant to software engineering and IT operations teams. NVIDIA Training Advisors are available to help develop customized training plans and offer team pricing.

Summary

As enterprises race to keep pace with AI advancements, identifying the best approach for adopting LLMs is essential. Foundation models help jumpstart the development process. Using key tools and environments to efficiently process and store data and customize models can significantly accelerate productivity and advance business goals.