What is LLM Fine-tuning in Artificial Intelligence?

We need more than just artificial intelligence. We need virtual experts that are accurate, authoritative, and effective.

It’s not enough to deploy AI technologies to answer customer service questions, assist a doctor’s medical diagnosis, identify a negotiator’s key clauses in a contract, provide customers with personalized content, or troubleshoot a mechanic’s equipment issue.

It must do these things well.

This is where fine-tuning large language models (LLMs) comes into play.

LLMs are initially trained on a broad array of data sources and topics in order to recognize and apply various linguistic patterns. Fine-tuning involves algorithmic modifications to these models, enhancing their efficiency and accuracy in narrower, more specific knowledge domains.

Picture an LLM as an all-around athlete who is competent in many sports. Fine-tuning is like this athlete training intensively for a specific event, such as a marathon, to enhance their performance and endurance uniquely for that race.

What are the Benefits of Fine-tuning an LLM?

LLM fine-tuning improves knowledge domain specificity

Fine-tuning customizes a large language model to perform optimally in a specific area of knowledge, helping it get better at using the right words and knowing the ins and outs of that field. Here are some examples:

Customer Support Example for an Energy Utility

Fine-tuned LLMs support more subtle identification of customer types and needs, such as those between single-home residential versus multi-family or industrial energy inquiries.

Renewable Energy Technology

Fine-tuned LLMs support more precise assessment for specific photovoltaic system performance within specific operating envelopes, unlike non-fine-tuned ones that will not interpret these nuances.

LLM fine-tuning improves accuracy and precision

A fine-tuned large language model offers more accurate and precise responses, reducing errors and misunderstandings.

Complex Billing Inquiries

Fine-tuned LLMs support handling intricate queries accurately, preventing customer dissatisfaction, unlike their general counterparts.

Personalized Content

Fine-tuned LLMs support customized travel itineraries aligning to the preferences of tourists, as opposed to generic or misaligned suggestions from non-fine-tuned models.

Equipment Troubleshooting

Fine-tuned models support diagnosing specific smartphone software issues, unlike broad solutions from non-fine-tuned models.

LLM fine-tuning improves user experience and increases quality of life

Fine-tuning enhances user interaction with more relevant, engaging, and context-aware responses. Inaccurate information can create inefficient and frustrating or failed task completions. Here are two examples.

E-learning

Fine-tuned LLMs support delivering content more appropriate to the learner’s age, learning style, comprehension abilities, culture, and other nuances. If information is delivered ineffectively, the system may fail to educate the child. Or worse, the child may become increasingly frustrated with the education process altogether.

Healthcare Support

Fine-tuned LLMs support providing more tailored wellness information, enhancing compliance and health outcomes, unlike non-fine-tuned models resulting in an increased risk that people will fail to make behavior changes, resulting in reduced quality of life and even reduced lifespan.

Adaptability to Evolving Trends and Data:

Fine-tuning allows an LLM to adapt to the latest trends, terminology, and emerging data in a specific field.

Healthcare Support Example

- Finetuned: Stays updated with the latest treatments and research in oncology.

- Non-fine tuned: Relies on medical information that may be outdated, potentially missing recent oncological advancements.

Customer Service Support Example:

- Finetuned: Quickly integrates new product information and policies in the tech industry for customer queries.

- Non-fine tuned: Lags in providing current information about new tech products or updates.

Personalized Content Example:

- Finetuned: Incorporates the latest trends and news in fashion blogging.

- Non-fine tuned: Generates content that is out of touch with current fashion trends.

Equipment Troubleshooting Example:

- Finetuned: Updates troubleshooting guides for the latest software updates in gaming consoles.

- Non-fine tuned: Offers solutions based on outdated software versions of gaming consoles.

Each of these benefits illustrates how a fine-tuned LLM can significantly outperform a non-fine-tuned counterpart by providing more specialized, accurate, user-friendly, and up-to-date interactions and solutions across various contexts.

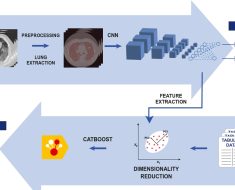

How does the process of fine-tuning work?

The process of fine-tuning a Large Language Model (LLM) involves several key steps. The overarching goal is to tailor a pre-trained general language model to perform exceptionally well on specific tasks or understand particular domains.

1. Selection of a Pre-Trained Model

The process begins with an LLM pre-trained on a vast and diverse corpus of text data, encompassing a wide range of topics, styles, and structures.

2. Identifying the Target Domain or Task

The next step is to clearly define the domain or specific task for which the LLM needs to be finetuned. This could range from specialized areas like legal or medical domains to tasks like investigative document analysis, sentiment analysis, diagnostics, question-answering, or language translation.

3. Curating a Domain-Specific Dataset for the Target Domain

This dataset must be representative of the task or domain-specific language, terminology and context. A fine-tuning dataset typically adheres to an instruction-answer format, enhancing the LLM’s ability to effectively follow explicit instructions relevant to the specific application. For instance, for a medical LLM, the dataset may contain doctor-patient conversations, combinations of symptoms and diagnoses, patient case studies, and clinical guidelines. For a legal LLM, relevant information types might involve case histories, legislation, and information indicative of viable cases for representation or potential targets for complaints.

4. Data Preprocessing

The selected data is then preprocessed. This involves cleaning the data (removing irrelevant or corrupt data), anonymizing personally identifiable information, and ensuring it is in a format suitable for training the LLM. Tokenization, where text is broken down into smaller units like words or phrases, is also part of this step.

5. Adapting the Model’s Architecture (if necessary)

Depending on the fine-tuning requirements, the LLM might need adjustments. This could involve modifying layers, neurons, or connections within the model.

6. Fine-tuning Training

Unlike the initial training, which was broad, this training is focused and concentrated on the chosen area and dataset. The model adjusts its internal parameters (weights) based on this new data, learning to predict and generate text that is specific to the domain or task.

7. Regularization and Optimization for Adaptability

During fine-tuning, techniques like regularization are employed to prevent overfitting, where the model performs well on the training data but poorly on new, unseen data. Optimization algorithms are also used to efficiently adjust the model’s parameters for better performance.

8. Evaluation and Testing

Post-fine tuning, the model is evaluated using a separate set of domain-specific test data. This helps assess how well the model has adapted to the domain and how it performs on tasks it wasn’t directly trained on.

9. Iterative Improvement

Based on the evaluation, further adjustments might be made. This could involve additional training, tweaking the model architecture, or refining the dataset until the model achieves the desired performance.

10. Deployment

Once fine-tuned and tested, the model is deployed for practical use.

11. Continuous Learning and Updating

In some applications, the LLM may continue to learn from new data encountered during its operation, requiring mechanisms to incorporate this data without drifting away from its core expertise.

This process involves a combination of machine learning expertise, domain knowledge, computational resources, and careful planning and execution. The end result is an LLM that is not just a jack-of-all-trades in language understanding but a master in specific, targeted applications.

How do I know if I need LLM Fine-tuning?

Determining if you need to finetune a Large Language Model (LLM) for your application requires evaluating key factors:

Specialized knowledge requirements

If your application demands expertise in a specialized field (e.g., legal, medical, technical) with specific terminologies and contexts, fine-tuning is essential. General LLMs may lack the depth and nuanced understanding required for these areas.

Accuracy and precision needs and risks

Assess the need for accuracy and precision. In areas where errors can lead to significant consequences (such as medical diagnosis or financial forecasting), a fine-tuned LLM is more dependable.

Unique Language Styles and Formats

If your application involves distinct linguistic styles (like creative writing) or specific formats (such as legal contracts), and effective text generation or interpretation in these styles is crucial, fine-tuning is advantageous.

Language and Cultural Nuances

For targeting specific geographic regions or cultural groups, understanding local dialects, idioms, and cultural nuances is vital. A general LLM may struggle with these nuances without fine-tuning.

Benchmark Performance

Evaluate a standard LLM’s performance on your tasks. If it doesn’t meet the desired understanding or output generation, fine-tuning with more relevant data can enhance its performance.

User Experience Expectations

Consider user experience requirements. If users anticipate highly tailored, context-aware interactions (as in personalized chatbots or recommendation systems), a fine-tuned LLM can provide a more satisfying experience.

Competitive Advantage

If a more accurate, efficient, and specialized LLM offers a competitive edge in your field, fine-tuning is a valuable investment.

Success factor questions for Fine-tuning and AI deployment

Specific Objectives

What specialized tasks or domain-specific needs must the LLM address?

Good Data

Do you have access to a sufficient volume of high-quality, relevant data?

Compliance, Ethics, and Risk Management

Is your data privacy-compliant and free of biases? Do you risk intellectual property violations? Identify technical, ethical, and operational risks.

Performance Management

What are your key performance indicators? Accuracy, speed, user satisfaction, etc.

System Integration

How will the LLM fit into your current technological setup?

Computational Resources

Do you have the necessary computational power?

Skilled Human Resources

Identify human resources and skills required.

Ongoing Maintenance

How you will maintain and update the model based on changing data and circumstances?

Timelines

What is your timeline for fine-tuning and deployment? How will this intersect with other timelines impacted by the LLM project?

User Experience Feedback?

How will you gather feedback for LLM improvement as a part of overall AI effectiveness and adoption?

What budget is required?

Consider end-to-end budget requirements for fine-tuning to deployment and ongoing optimization.

What are the Various Types of Fine-Tuning an LLM?

Fine-tuning Large Language Models (LLMs) can be approached in various ways, each with its own methodology and specific use cases. These techniques range from full model tuning to more parameter-efficient methods. Here’s an overview of the primary types:

Full Model and Parameter-Efficient LLM Fine-Tuning

Full Model fine-tuning

- Updates every parameter of the LLM.

- Useful when the new data’s domain greatly diverges from the model’s initial training data.

- Demands significant computational power and time due to retraining the entire model.

Parameter-efficient fine-tuning

- Modifies a minimal portion of an LLM’s parameters

- More suitable for situations with constrained computational resources or when rapid adaptation is essential.

- It retains most of the original parameters, minimizing overfitting risks while preserving the expansive knowledge gained in pre-training.

Transfer learning

- Transfer learning utilizes the knowledge an LLM has gained during pre-training and applies it to a new, but related task.

- Often involves some retraining or fine-tuning using a smaller, task-specific dataset.

Prompt Tuning or Prompt Engineering

- Instead of changing the model’s weights, the input format or ‘prompt’ is engineered to guide the model to generate desired outputs.

- Useful for tasks where the goal can be achieved by cleverly designing the input prompts, without the need for extensive retraining.

Adapter Tuning

- Adds small, trainable layers or ‘adapters’ within the existing model architecture while keeping the original parameters frozen.

- Strikes a balance between maintaining the general knowledge of the LLM and adapting it to specific tasks with less computational cost.

Layer Freezing

- Only a subset of the layers (usually the top layers) are fine-tuned, while the rest are kept frozen.

- Beneficial for tasks requiring some specialization but also need to retain the broad understanding captured in the lower layers of the model.

Sparse Fine-Tuning

- A small, selected set of parameters is updated during the fine-tuning process.

- Computationally efficient and reduces the risk of overfitting, especially useful in resource-constrained environments.

Domain-Adaptive Fine-Tuning

- The model is specifically fine-tuned on a dataset that is representative of the domain of interest but not necessarily task-specific.

- Aimed at making the model more proficient in the language and nuances of a particular field.

Task-Adaptive Fine-Tuning

- Fine-tunes the LLM on a dataset specifically designed for a particular task.

- This approach is highly targeted and can yield excellent performance on the task it is fine-tuned for.

Self-Supervised Learning

- Trains LLM on a dataset where the learning targets are generated from the data itself.

- Doesn’t rely on external labels but instead finds patterns directly from the input data (like predicting the next word in a sentence).

- Especially powerful for understanding unstructured data like text and images.

Supervised Learning

- LLM learns from labeled datasets, meaning each input data point is paired with an explicit output label.

- The model’s task is to learn the mapping from inputs to outputs, making it suitable for classification and regression tasks.

- Performance heavily depends on the quality and size of the labeled dataset.

Reinforcement Learning

- Trains LLMs through a system of rewards and penalties.

- The model, often called an agent, learns to make decisions by performing actions in an environment to maximize some notion of cumulative reward.

- Typically used in scenarios where an agent must make a sequence of decisions, like in robotics or game playing, learning from trial and error.

Fine-tuning Drives Innovation Forward

This introduction to fine-tuning Large Language Models underscored its vital role in transforming general AI systems into more accurate and precise virtual experts, able to successfully support business and user outcomes.

In essence, fine-tuning an LLM isn’t merely about refining an AI tool; it’s about crafting a bespoke solution that resonates with specific needs, augments human expertise, and drives innovation forward in a world increasingly reliant on AI-driven solutions.