Scientists in China have designed a tiny, modular chip that is powered by light rather than electricity — and they want to use it to train and run a future artificial general intelligence (AGI) model.

The new chiplet, called “Taichi,” is one small piece of a wider jigsaw formed of many individual chiplets (including Taichi modules) that, together, could form a sophisticated and powerful computing system. If scaled up sufficiently, this would be powerful enough to train and run an AGI in the future, the scientists argued in their paper, published April 11 in the journal Science.

AGI is a hypothetical advanced form of artificial intelligence (AI) that would, in theory, be just as smart as humans in terms of its cognitive reasoning abilities. AGI could be applied across many disciplines, whereas today’s AI systems can only be applied very narrowly.

Some experts believe such systems are many years away, with a bottleneck in computing power being a key blocker, while others believe we’ll build an AGI agent as soon as 2027.

In recent years, scientists have begun to reach the limitations of conventional electronics-based components, especially given the growth of AI and the sheer amount of power required to service these increasingly demanding systems.

Related: Light-powered computer chip can train AI much faster than components powered by electricity

Graphics processing units (GPUs) have emerged as key components in training AI systems, because they are better at performing parallel calculations than central processing units (CPUs). But the energy consumption levels required are becoming unsustainable as systems become larger, the scientists argued.

Light-based components could be one way to overcome the limitations of conventional electronics — including the energy efficiency problems.

Looking to the light for superhuman AI

Scientists previously outlined the design for a new type of photonic microchip in February, which uses photons, or particles of light, instead of electrons to operate transistors — tiny electrical switches that turn on or off when voltage is applied. Generally speaking, the more transistors a chip has, the more computing power it has and the more power it requires to operate. Light-based chips are far less energy-intensive and can perform calculations much faster than traditional chips, as they can perform calculations in parallel.

Current photonic chip architectures for AI models consist of hundreds or thousands of parameters, or training variables. This makes them powerful enough for basic tasks like pattern recognition, but large language models (LLMs) like ChatGPT are trained using billions or even trillions of parameters.

An AGI agent would likely require many orders of magnitude greater — as part of a broader network of AI architectures. Today, the blueprints for building an AGI system do not exist.

In the new study, the scientists designed Taichi to work the same way as other light-based chips, but it can be scaled much better than competing designs, they said in their paper. This is because it combines several advantages of existing photonic chips — including “optical diffraction and interference,” which are ways of manipulating the light in the component.

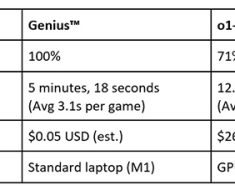

To test the design, the researchers stitched together several Taichi chiplets and compared their architecture with other light-based chips in key areas.

RELATED STORIES

—Scientists create light-based semiconductor chip that will pave the way for 6G

—New DNA-infused computer chip can perform calculations and make future AI models far more efficient

Their architecture achieved a network scale of 13.96 million artificial neurons — compared to 1.47 million in the next biggest competing design — with an energy efficiency metric of 160.82 trillion operations per watt (TOPS/W). The next best result they highlighted in their paper came from research published in 2022, in which a photonic chip achieved 2.9 TOPS/W. Many conventional neural processing units (NPUs) and other chips achieve well under 10 TOPS/W.

The researchers also claimed that their Taichi-based architecture is twice as powerful as other photonic systems, but they did not directly cite these. In tests, meanwhile, they used the distributed Taichi network to perform tasks including image categorization and classification, as well as image content generation, as a proof of concept rather than to benchmark performance.

“Taichi indicates the great potential of on-chip photonic computing for processing a variety of complex tasks with large network models, which enables real-life applications of optical computing,” the scientists said. “We anticipate that Taichi will accelerate the development of more powerful optical solutions as critical support for the foundation model and a new era of AGI.”