@inproceedings{chen-etal-2023-beyond,

title = "Beyond Factuality: A Comprehensive Evaluation of Large Language Models as Knowledge Generators",

author = "Chen, Liang and

Deng, Yang and

Bian, Yatao and

Qin, Zeyu and

Wu, Bingzhe and

Chua, Tat-Seng and

Wong, Kam-Fai",

editor = "Bouamor, Houda and

Pino, Juan and

Bali, Kalika",

booktitle = "Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2023",

address = "Singapore",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.emnlp-main.390",

doi = "10.18653/v1/2023.emnlp-main.390",

pages = "6325--6341",

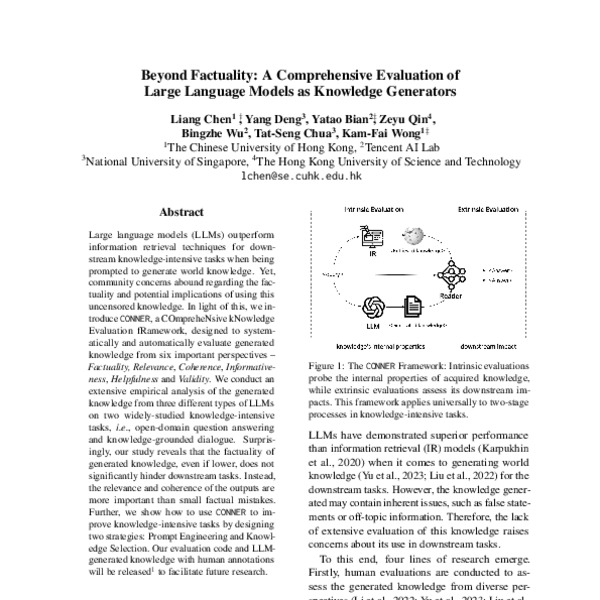

abstract = "Large language models (LLMs) outperform information retrieval techniques for downstream knowledge-intensive tasks when being prompted to generate world knowledge. However, community concerns abound regarding the factuality and potential implications of using this uncensored knowledge. In light of this, we introduce CONNER, a COmpreheNsive kNowledge Evaluation fRamework, designed to systematically and automatically evaluate generated knowledge from six important perspectives {--} Factuality, Relevance, Coherence, Informativeness, Helpfulness and Validity. We conduct an extensive empirical analysis of the generated knowledge from three different types of LLMs on two widely studied knowledge-intensive tasks, i.e., open-domain question answering and knowledge-grounded dialogue. Surprisingly, our study reveals that the factuality of generated knowledge, even if lower, does not significantly hinder downstream tasks. Instead, the relevance and coherence of the outputs are more important than small factual mistakes. Further, we show how to use CONNER to improve knowledge-intensive tasks by designing two strategies: Prompt Engineering and Knowledge Selection. Our evaluation code and LLM-generated knowledge with human annotations will be released to facilitate future research.",

}

<?xml version="1.0" encoding="UTF-8"?>

<modsCollection xmlns="http://www.loc.gov/mods/v3">

<mods ID="chen-etal-2023-beyond">

<titleInfo>

<title>Beyond Factuality: A Comprehensive Evaluation of Large Language Models as Knowledge Generators</title>

</titleInfo>

<name type="personal">

<namePart type="given">Liang</namePart>

<namePart type="family">Chen</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Yang</namePart>

<namePart type="family">Deng</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Yatao</namePart>

<namePart type="family">Bian</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Zeyu</namePart>

<namePart type="family">Qin</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Bingzhe</namePart>

<namePart type="family">Wu</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Tat-Seng</namePart>

<namePart type="family">Chua</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Kam-Fai</namePart>

<namePart type="family">Wong</namePart>

<role>

<roleTerm authority="marcrelator" type="text">author</roleTerm>

</role>

</name>

<originInfo>

<dateIssued>2023-12</dateIssued>

</originInfo>

<typeOfResource>text</typeOfResource>

<relatedItem type="host">

<titleInfo>

<title>Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing</title>

</titleInfo>

<name type="personal">

<namePart type="given">Houda</namePart>

<namePart type="family">Bouamor</namePart>

<role>

<roleTerm authority="marcrelator" type="text">editor</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Juan</namePart>

<namePart type="family">Pino</namePart>

<role>

<roleTerm authority="marcrelator" type="text">editor</roleTerm>

</role>

</name>

<name type="personal">

<namePart type="given">Kalika</namePart>

<namePart type="family">Bali</namePart>

<role>

<roleTerm authority="marcrelator" type="text">editor</roleTerm>

</role>

</name>

<originInfo>

<publisher>Association for Computational Linguistics</publisher>

<place>

<placeTerm type="text">Singapore</placeTerm>

</place>

</originInfo>

<genre authority="marcgt">conference publication</genre>

</relatedItem>

<abstract>Large language models (LLMs) outperform information retrieval techniques for downstream knowledge-intensive tasks when being prompted to generate world knowledge. However, community concerns abound regarding the factuality and potential implications of using this uncensored knowledge. In light of this, we introduce CONNER, a COmpreheNsive kNowledge Evaluation fRamework, designed to systematically and automatically evaluate generated knowledge from six important perspectives – Factuality, Relevance, Coherence, Informativeness, Helpfulness and Validity. We conduct an extensive empirical analysis of the generated knowledge from three different types of LLMs on two widely studied knowledge-intensive tasks, i.e., open-domain question answering and knowledge-grounded dialogue. Surprisingly, our study reveals that the factuality of generated knowledge, even if lower, does not significantly hinder downstream tasks. Instead, the relevance and coherence of the outputs are more important than small factual mistakes. Further, we show how to use CONNER to improve knowledge-intensive tasks by designing two strategies: Prompt Engineering and Knowledge Selection. Our evaluation code and LLM-generated knowledge with human annotations will be released to facilitate future research.</abstract>

<identifier type="citekey">chen-etal-2023-beyond</identifier>

<identifier type="doi">10.18653/v1/2023.emnlp-main.390</identifier>

<location>

<url>https://aclanthology.org/2023.emnlp-main.390</url>

</location>

<part>

<date>2023-12</date>

<extent unit="page">

<start>6325</start>

<end>6341</end>

</extent>

</part>

</mods>

</modsCollection>

%0 Conference Proceedings %T Beyond Factuality: A Comprehensive Evaluation of Large Language Models as Knowledge Generators %A Chen, Liang %A Deng, Yang %A Bian, Yatao %A Qin, Zeyu %A Wu, Bingzhe %A Chua, Tat-Seng %A Wong, Kam-Fai %Y Bouamor, Houda %Y Pino, Juan %Y Bali, Kalika %S Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing %D 2023 %8 December %I Association for Computational Linguistics %C Singapore %F chen-etal-2023-beyond %X Large language models (LLMs) outperform information retrieval techniques for downstream knowledge-intensive tasks when being prompted to generate world knowledge. However, community concerns abound regarding the factuality and potential implications of using this uncensored knowledge. In light of this, we introduce CONNER, a COmpreheNsive kNowledge Evaluation fRamework, designed to systematically and automatically evaluate generated knowledge from six important perspectives – Factuality, Relevance, Coherence, Informativeness, Helpfulness and Validity. We conduct an extensive empirical analysis of the generated knowledge from three different types of LLMs on two widely studied knowledge-intensive tasks, i.e., open-domain question answering and knowledge-grounded dialogue. Surprisingly, our study reveals that the factuality of generated knowledge, even if lower, does not significantly hinder downstream tasks. Instead, the relevance and coherence of the outputs are more important than small factual mistakes. Further, we show how to use CONNER to improve knowledge-intensive tasks by designing two strategies: Prompt Engineering and Knowledge Selection. Our evaluation code and LLM-generated knowledge with human annotations will be released to facilitate future research. %R 10.18653/v1/2023.emnlp-main.390 %U https://aclanthology.org/2023.emnlp-main.390 %U https://doi.org/10.18653/v1/2023.emnlp-main.390 %P 6325-6341

Markdown (Informal)

[Beyond Factuality: A Comprehensive Evaluation of Large Language Models as Knowledge Generators](https://aclanthology.org/2023.emnlp-main.390) (Chen et al., EMNLP 2023)