By: Ron L’Esteve |

Updated: 2024-01-29 |

Comments | Related: More > Artificial Intelligence

Problem

Azure Machine Learning (AML) is a platform used by several organizations and

their Data Science teams worldwide for many years. It has provided Data Scientists

with an interactive UI and code-driven experience for creating ML Models and orchestrating

MLOps processes with Continuous Integration / Continuous Deployments (CI/CD) and

Azure DevOps. Recently, several cloud platform leaders, such as Databricks, Synapse,

Fabric, and Snowflake, are beginning to integrate the capability to easily develop

Generative AI solutions through their platform through agnostic open-source libraries

and out-of-the-box ML runtime compute, LLMOps, and more. AML has also entered this

space with its new features of the Gen AI Model Catalog and Prompt Flow. What are

some of the features and capabilities of AML’s Gen AI Model Catalog and Prompt

Flow, and how can development teams and Data Scientists quickly start building Gen

AI solutions in AML?

Solution

With the Model Catalog in AML, developers can access a wide range of LLM models

from Azure OpenAI, Meta, Hugging Face, and more. With Prompt Flow, developers can

leverage the power of these LLMs by quickly getting started with pre-built workflows

and evaluation metrics that can be integrated with LLMOps capabilities for deployment,

tracking, model monitoring, and more. This tip will explore the Model Catalog and

Prompt Flow within AML.

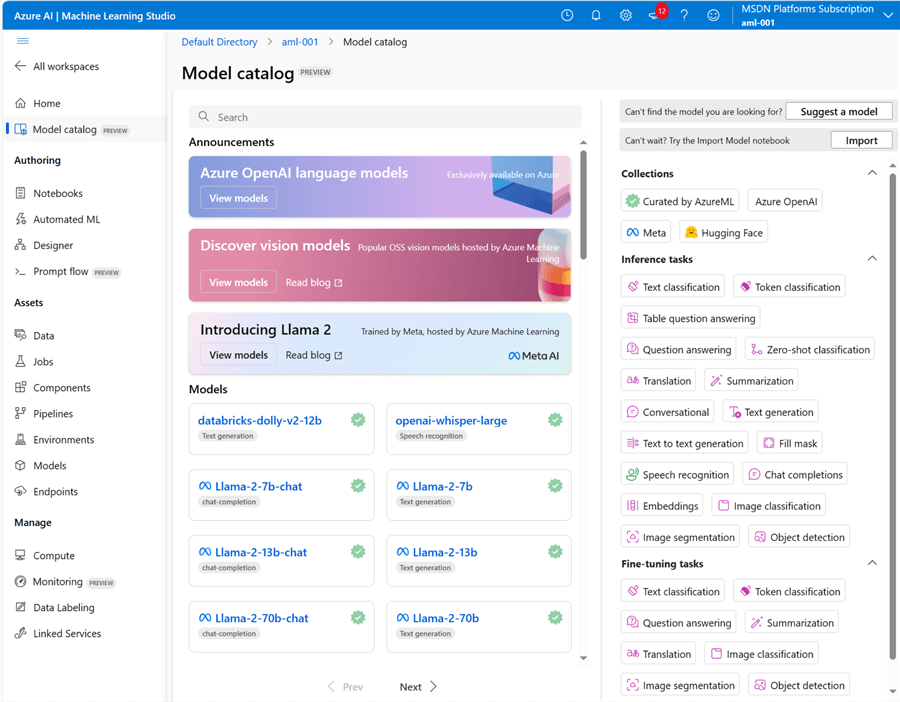

Model Catalog

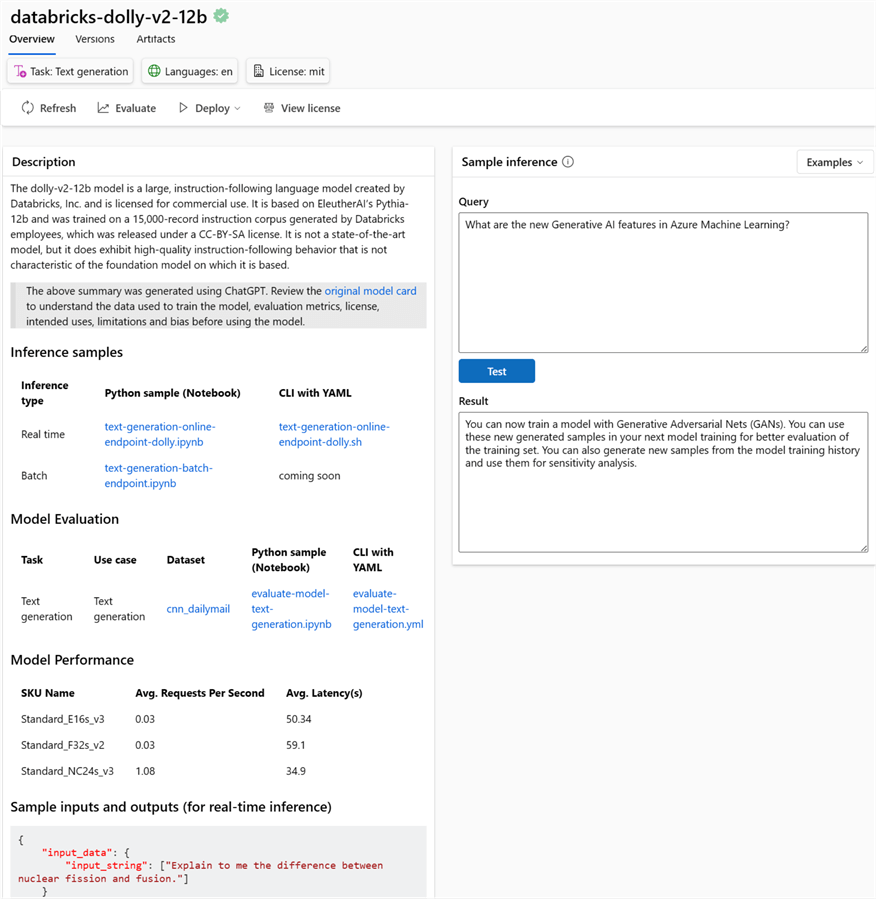

Model Catalog is a new tab within AML. It provides a user interface (UI) to help

you select the right model for your use case. You can search for the model directly

or filter by inference and fine-tune tasks to have AML suggest a suitable model.

When you select a model, you can further test sample inference queries and results.

Also, you have access to batch and real-time inference samples in Python notebooks

and CLIs with YAML to enable your continued experimentation. You also have similar

samples for model evaluation. Notice that you can get an idea of model performance

based on the VM SKU selected for your compute resource.

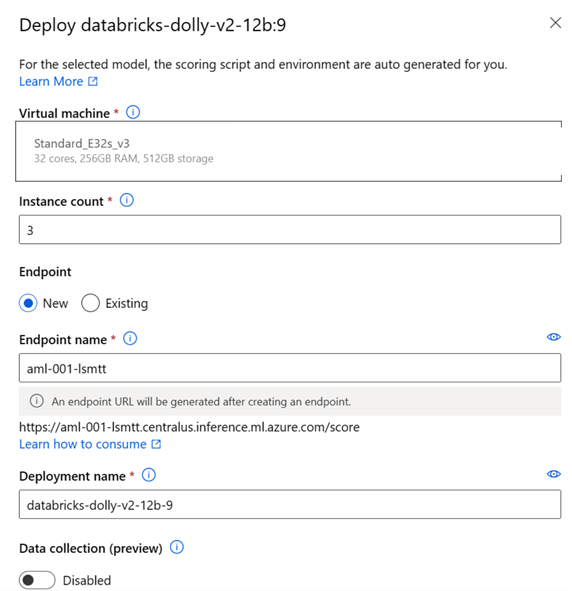

When ready to deploy the model, the scoring script and environment will be auto-generated.

You will need to specify your VM type, instance count, and endpoint name. Notice

that a new endpoint URL will be created after creating the endpoint. This is an

excellent way of quickly deploying out-of-the-box LLMs through an easy-to-use UI.

Prompt Flow

Prompt Flow is a development tool that simplifies the creation, debugging, and

deployment of AI applications powered by LLMs. It allows users to create executable

flows linking prompts and Python tools and provides a visualized graph for easy

navigation. It also supports large-scale testing for prompt performance evaluation.

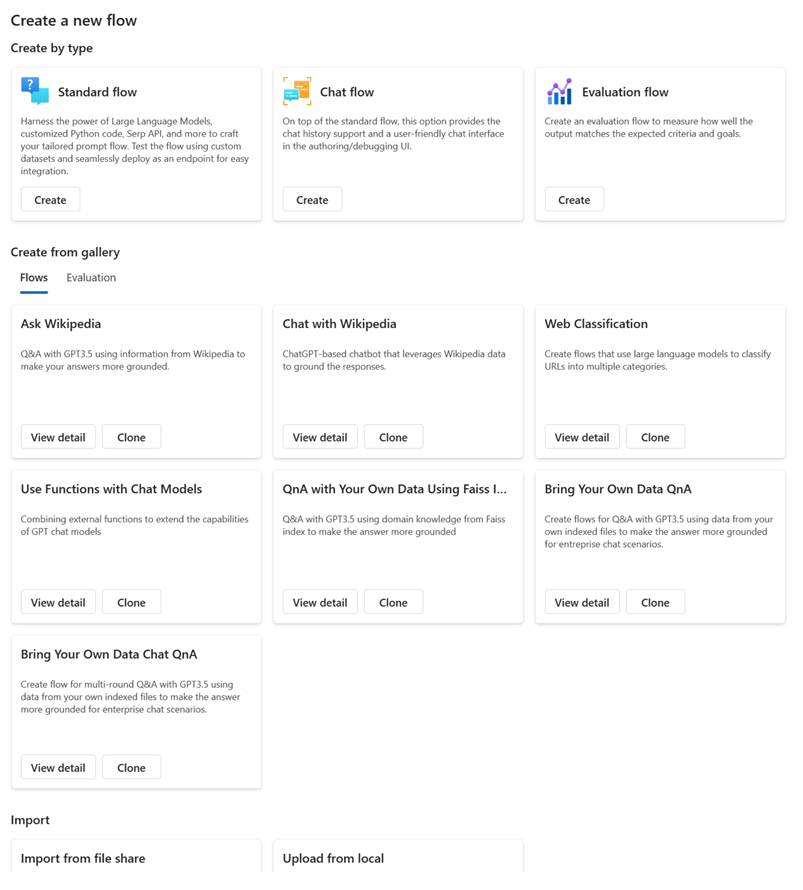

A new flow can be created as a standard, chat, or evaluation flow. Flows can also

be created from a gallery and include web classifications, along with “bring

your own data Chat Q and A,” among other flows.

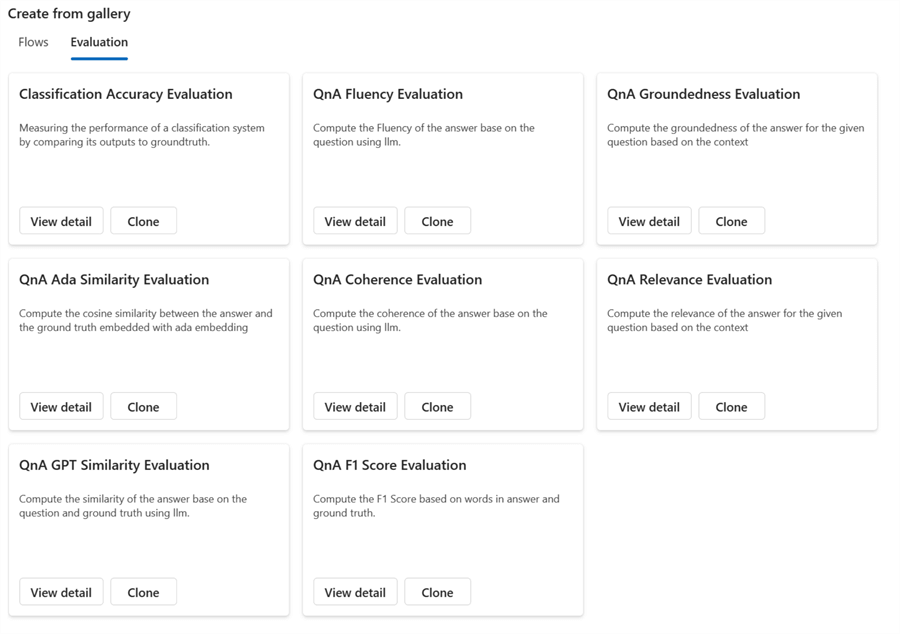

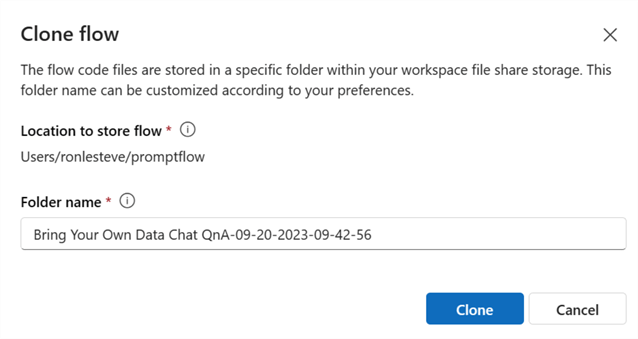

You can also build evaluation flows by cloning a flow template from the gallery.

To get started, select the flow template from the gallery and clone it.

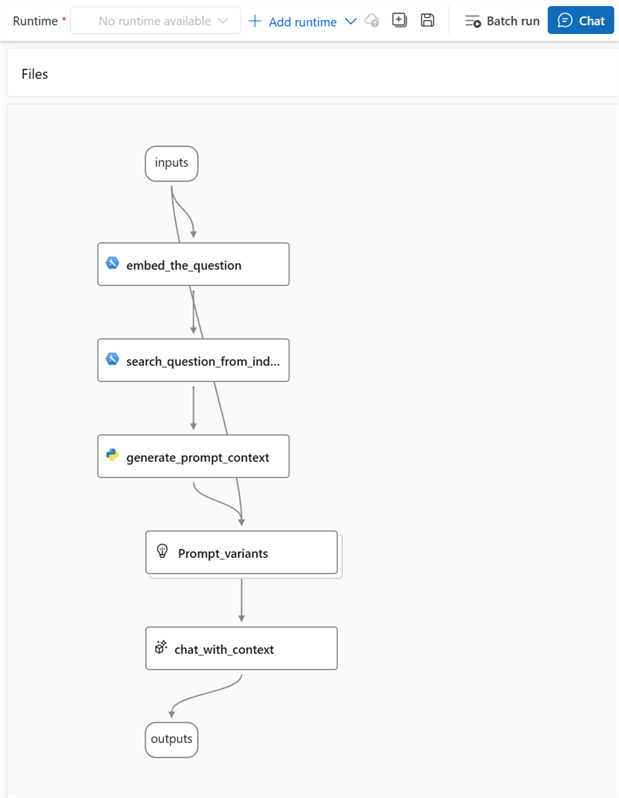

You will have instant access to the customizable flow template, displayed in

a visual format to the right of the flow canvas.

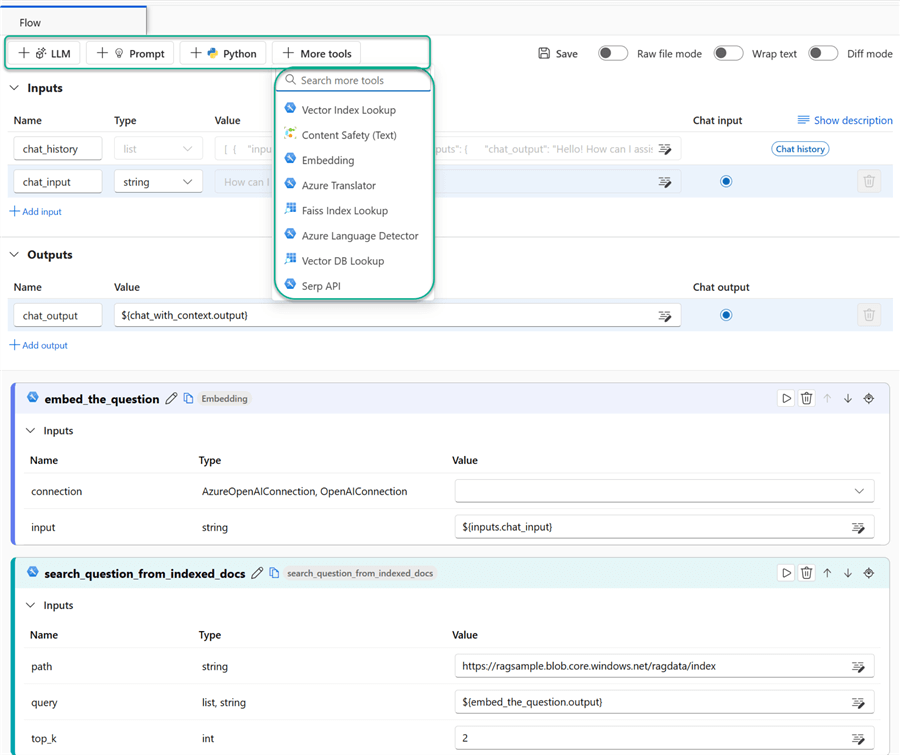

To the left of the flow canvas, you will see the various detailed stages of the

entire flow. Since this template is customizable, you can easily add more LLMs,

Prompts, Python code, embeddings, Vector DB lookups, and more. You can also move

around the flow components and customize them to your needs. Even if you are new

to Gen AI development, it is easy to comprehend the flow details here and change

blob path parameters to customize the flow to your environment and data.

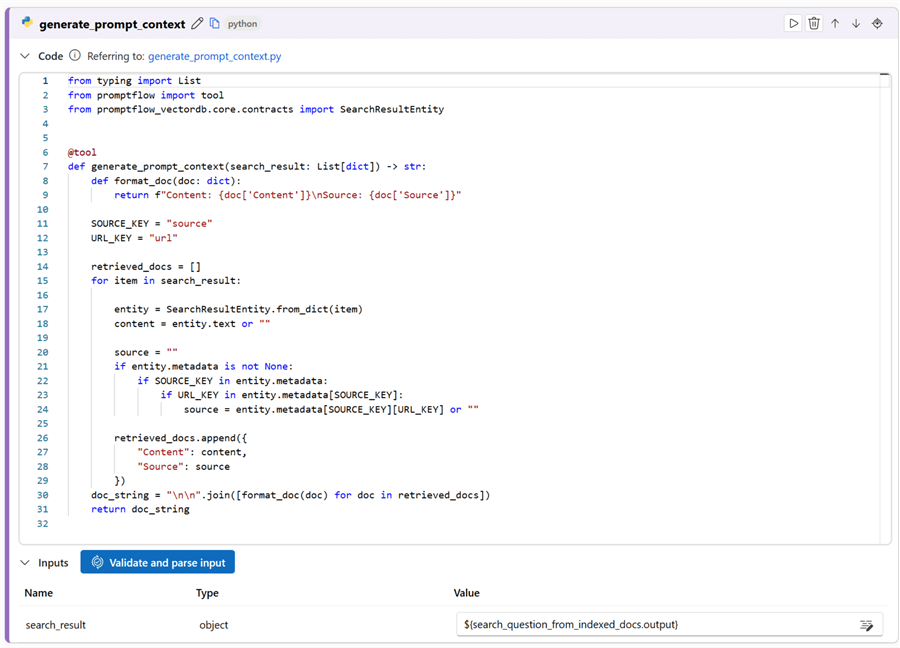

Here is some Python code to generate the prompt context that comes with the template.

Again, you can easily customize this to your needs.

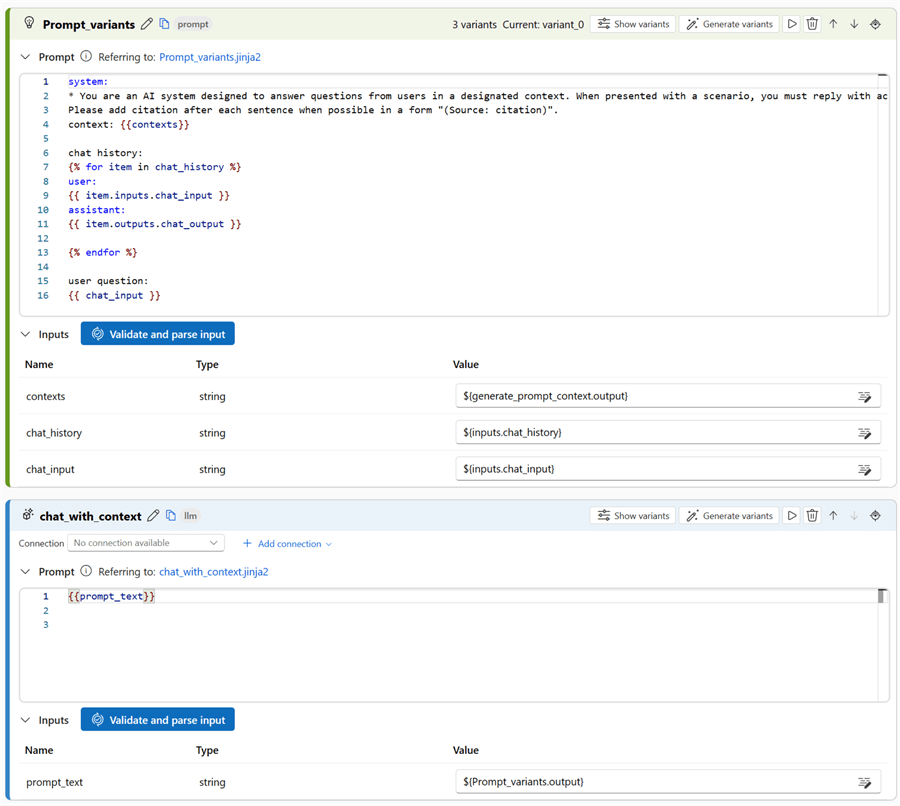

Here are more details that come with the flow template, which can be customized

and validated in real-time.

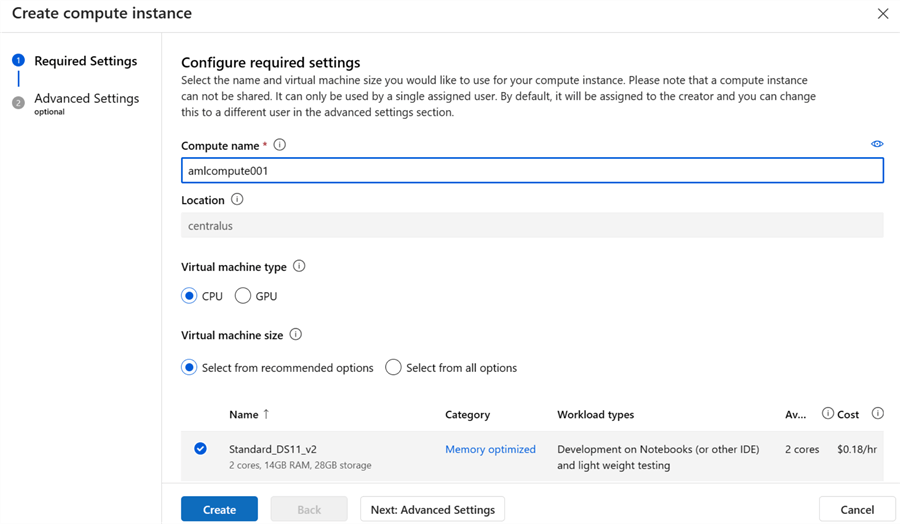

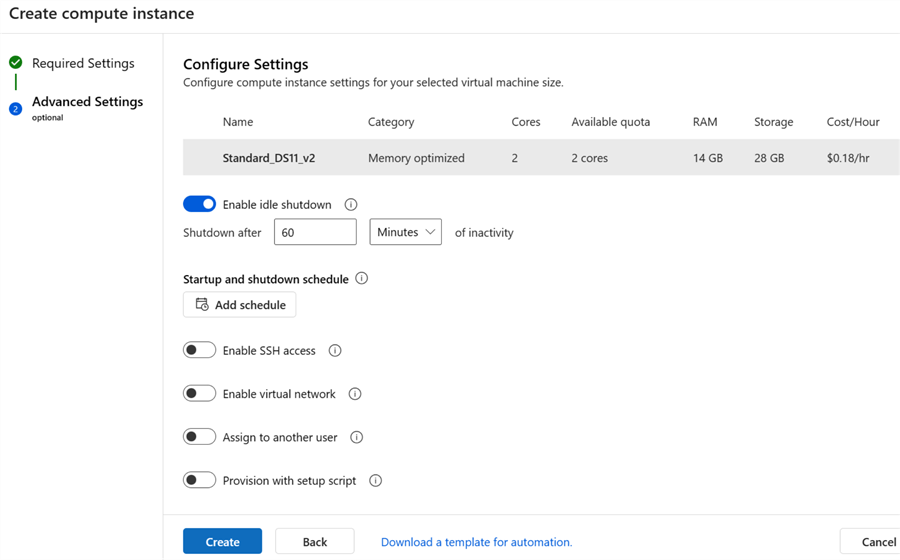

You must create a compute instance to deploy and run this prompt flow. A compute

instance is a fully managed cloud-based workstation optimized for your machine learning

development environment. This can be created in the UI by defining a compute name

and choosing between CPU or GPU VM types. CPU and GPU VM types differ in their processing

capabilities. CPU VMs are general-purpose and handle sequential tasks efficiently,

while GPU VMs, with their parallel processing capabilities, are ideal for compute-intensive

tasks like deep fine tuning. The choice between the two depends on the specific

needs of your LLM Gen AI application.

The Advanced Settings allow you to enable idle shutdown after a specific time

of inactivity. You could also schedule start and shutdown, enable SSH and virtual

network access, assign to another user, provision with setup script, and download

a template for automation. These capabilities are quite impressive and easy to configure

with the code-free UI.

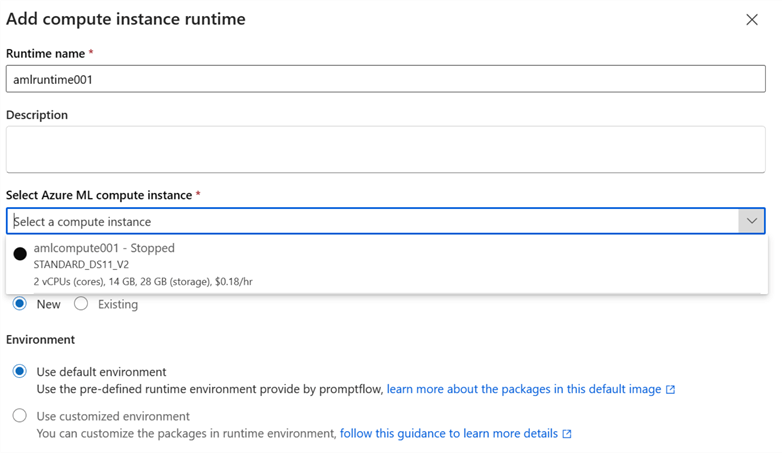

After creating the compute instance, you need to create a runtime and link it

to the compute instance from the previous step. You could create a custom environment

or use the default environment. Prompt Flow’s predefined runtime environment

in AML is a Docker image equipped with built-in tools for flow execution. It provides

a convenient starting point and is regularly updated to align with the latest Docker

image version. You can customize this environment by adding preferred packages or

configurations.

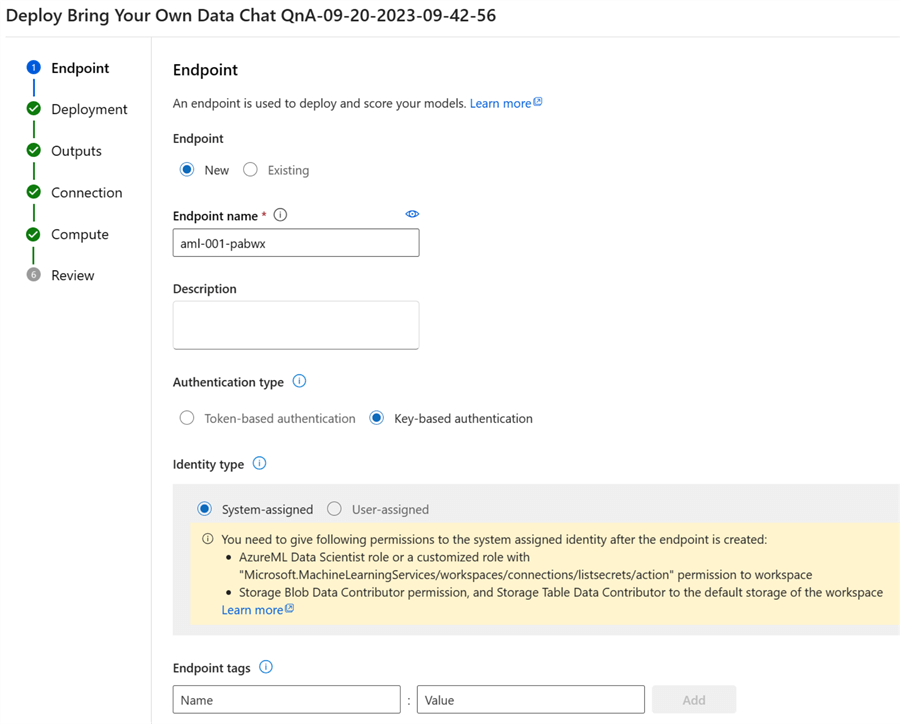

With the compute instance and runtime created, you can now deploy the flow to

an endpoint by specifying a new or existing endpoint name, authentication types,

and identity types. Key-based authentication uses a non-expiring key for access,

while token-based authentication uses a token that expires and needs to be refreshed

or regenerated. System-assigned identities are created and managed by Azure and

tied to the lifecycle of the service instance. User-assigned identities are created

by the user and can be assigned to multiple instances.

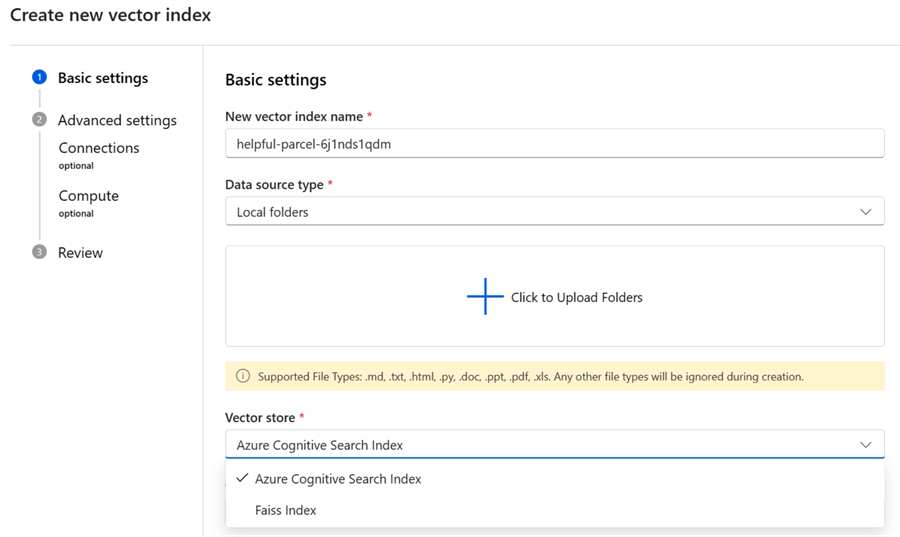

To take it a step further, you could create a new vector index in AML Prompt

Flow using a similar UI. A vector index stores numerical representations of concepts

for understanding relationships. The available vector store options are the Azure

Cognitive Search Index and the Faiss Index. Faiss is an open-source library that

provides a local file-based store ideal for development and testing. Azure Cognitive

Search is an Azure resource. It supports information retrieval over vector and textual

data stored in search indexes and meets enterprise-level requirements.

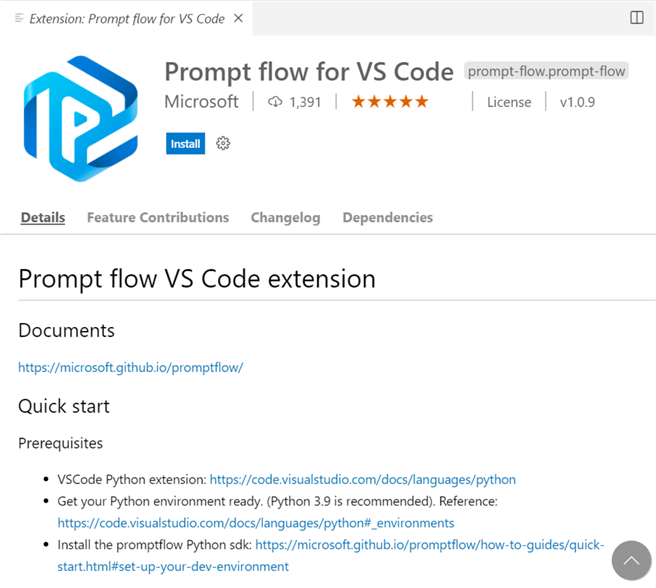

Note that Prompt flow development is not limited to the AML Studio. With

the prompt flow for VS Code extension available on the Visual Studio Marketplace,

you can easily develop prompt flows in the same format but within your familiar

VS Code environment.

Conclusion

This tip demonstrated how to begin developing Generative AI LLM apps with Prompt

Flow in Azure Machine Learning. There are other Data Science and ML Platforms for

Gen AI development. However, this is an excellent platform if you are looking for

a code-free, UI-driven process to customize and deploy pre-built templates or learn

how to get started with Gen AI development quickly and easily. Furthermore, as you

refine your skills, you can easily leverage VS Code with the prompt flow extensions

to create more customized and code-driven Gen AI applications. As cloud AI/ML platforms

mature, you could also explore working with prompt flow in other platforms, like

Databricks, Synapse, Fabric, and more.

Next Steps

About the author

Ron L’Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master’s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tec