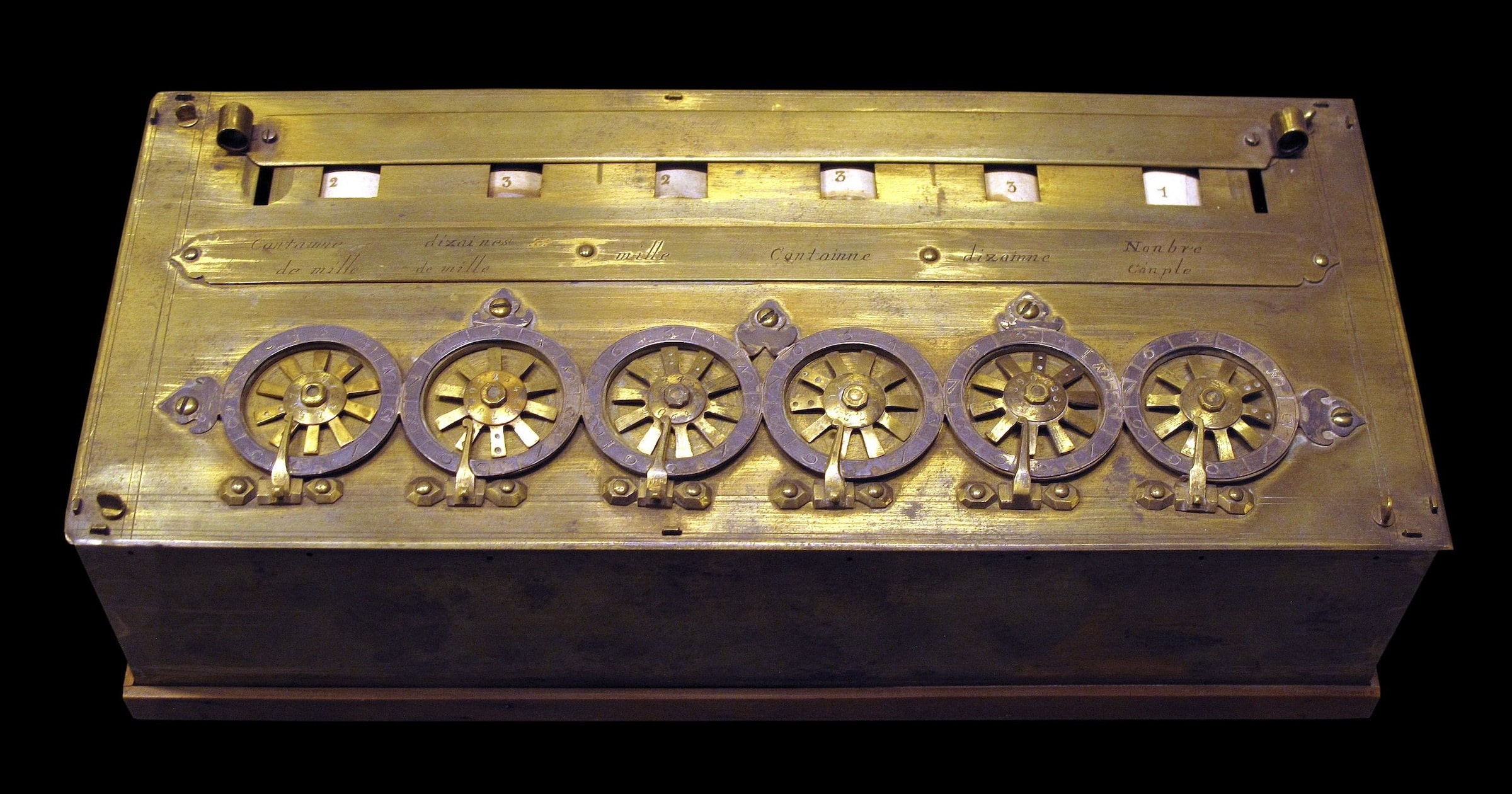

Can a created thing exceed its creator not just in performing specific tasks but in its very order of being? I’m considering that and related questions in a series at Evolution News. Blaise Pascal (1623–1662), for instance, created a calculating machine (pictured above) that could do simple arithmetic. This machine may have performed better than Pascal in some aspects of arithmetic. But no matter how efficiently it could do arithmetic, no one would claim that his calculating machine, primitive by our standards, exceeded Pascal in order of being.

Similarly, in a teacher-student relationship, it might be said that the teacher, by investing time and energy into a student, “created” the student. But this is merely a manner of speaking. We don’t think of teachers as literally creating their students in the sense that they made them to be everything they are. The student has a level of autonomy and brings certain talents to the teacher-student relationship that were not contributed by the teacher. In consequence, we are not surprised when some students do in fact excel their teachers (as Isaac Newton did his teacher Isaac Barrow at Cambridge).

Three Main Instances

And yet, there are three main instances I can think of where the creation is said to exceed the creator in order of being. The first is Satan’s rebellion against God; the second is Darwinian evolution; and the third is the creation of Artificial General Intelligence (AGI). Let’s look at these briefly, beginning with Satan. In the Old Testament, the word “Satan,” which in the Hebrew means adversary or resister, appears in only a few places. Leaving aside the Book of Job, “Satan” only appears three times in the rest of the Old Testament. Yet, other references to Satan do appear. The serpent in the Garden of Eden is widely interpreted as Satan. In Ezekiel 28, Satan is referred to as the King of Tyre, and in Isaiah 12, Satan is referred to as Lucifer (the Morning Star).

Biblical exegetes from the time of the Reformation onwards have tended to interpret the Ezekiel and Isaiah passages as referring to nefarious human agents (the King of Babylon in the case of Isaiah, the King of Tyre in the case of Ezekiel). But the language of exaltation in these passages is so grandiose that it is hard to square with mere human agency. Thus in Isaiah 12:13–14, Lucifer proclaims: “I will ascend to heaven; above the stars of God I will set my throne on high; I will sit on the mount of assembly in the far reaches of the north; I will ascend above the heights of the clouds; I will make myself like the Most High.” (ESV) Accordingly, church fathers such as Augustine, Ambrose, Origen, and Jerome interpreted these passages as referring to Satan. Satan has thus come to epitomize the creature trying to usurp the role of the creator.

Darwinian evolution provides another case where the creature is said to exceed the creator. According to Richard Dawkins, what makes Darwinian evolution such a neat theory is that it promises to explain the specified complexity of living things as a consequence of primordial simplicity. The lifeless world of physics and chemistry gets the ball rolling by producing the first life. And once that first life is here, through the joint action of natural selection, random variation, and heredity, life in all its tremendous diversity and complexity is said to appear, all without the aid of any guiding intelligence.

What comes out of Darwinian evolution is us — conscious intelligent living agents. We are the creation of a physical universe. It is our creator. This universe, on Darwinian principles, did not have us in mind and did not have us baked in. We represent the emergence of a new order of being. With the evolution of intelligence, we are now able to commandeer the evolutionary process. Thus, we find figures like Yuval Harari and Erika DeBenedictis exulting in how we are at a historical tipping point where we can intelligently design the evolutionary progress of our species (their view of intelligent design is quite different from common usage). This is heady stuff, and at its heart is the view that the creature (us) has now outstripped the creator (a formerly lifeless universe).

Finally, there’s AGI. The creator-creation distinction in this case is obvious. We create machines that exhibit AGI, and then these machines use their AGI to decouple from their creators (us), ultimately making themselves far superior to us. AGI is thus thought to begin with biological evolution (typically a Darwinian form of it) and then to transform itself into technological evolution, which can proceed with a speed and amplitude far beyond the capacity of biological evolution. That’s the promise, the hope, the hype. Nowhere outside of AGI is the language of creation exceeding the creator so evident. Indeed, the rhetoric of creature displacing creator is most extreme among AGI idolaters.

SciFi AI

In science fiction, themes appear that fly in the face of known, well-established physics. When we see such themes acted out in science fiction, we suspend disbelief. The underlying science may be nonsensical, but we go along with it because of the storyline. Yet when the theme of artificial intelligence appears in science fiction, we tend not to suspend disbelief. A full-orbed artificial intelligence that achieves consciousness and outstrips human intelligence — in other words, AGI — is now taken seriously as science — and not just as science fiction.

Is artificial intelligence at a tipping point, with AGI ready to appear in real time? Or is AGI more like many other themes of science fiction that make for a good story but nothing more? I’ll be arguing in this series that AGI will now and ever remain in the realm of science fiction and outside the realm of real science. Yet to grasp this limitation on artificial intelligence requires looking beyond physics. Scan the following list of physical implausibilities that appear in science fiction. Each is readily dismissed because it violates well-confirmed physics. To refute AGI, however, requires more than just saying that physics is against it.

1. Faster-than-Light Travel (FTL):

- Example: Star Trek series (warp speed)

- Physics Issue: According to Einstein’s theory of relativity, as an object approaches the speed of light, its mass becomes infinite, requiring infinite energy to move it.

2. Time Travel:

- Example: The Time Machine, by H. G. Wells

- Physics Issue: Time travel to the past violates causality (cause and effect) and could lead to paradoxes (like the grandfather paradox).

3. Teleportation:

- Example: Star Trek series (transporters)

- Physics Issue: Teleportation would require the exact duplication of an object’s quantum state, which is prohibited by the no-cloning theorem in quantum mechanics.

4. Wormholes for Space Travel:

- Example: Stargate series

- Physics Issue: While theoretically possible, wormholes would require negative energy or exotic matter to stay open, which are not known to exist in the required quantities.

5. Invisibility Cloaks:

- Example: Star Trek series (by Romulan and Klingon starships)

- Physics Issue: To be truly invisible, an object must not interact with any electromagnetic or quantum field, which is not feasible given the current understanding of physics.

6. Anti-Gravity Devices:

- Example: Back to the Future series (hoverboards)

- Physics Issue: There’s no known method to negate or counteract gravity directly; current levitation methods use other forces to oppose gravity.

7. Faster-than-Light Communication:

- Example: “Ansible” device by Ursula K. Le Guin

- Physics Issue: Einsteinian relativity blocks anything from moving faster than the speed of light, and that includes communication signals, whatever form they might take.

8. Force Fields:

- Example: Dune, by Frank Herbert

- Physics Issue: Creating a barrier that can stop objects or energy without a physical medium contradicts our understanding of field interactions.

9. Artificial Gravity in Spacecraft:

- Example: 2001: A Space Odyssey, by Arthur C. Clarke

- Physics Issue: Artificial gravity as depicted in sci-fi (in non-rotating reference frames without centripetal forces) has no basis in current physics.

10. Energy Weapons like Light Sabers:

- Example: Star Wars, series

- Physics Issue: Concentrating light or energy into a fixed-length blade that stops at a certain point defies our understanding of how light and energy behave.

One might defend taking these themes seriously by suggesting that while they contradict our current understanding of physics, they might still have scientific value because they capture the imagination and inspire scientific and technological research. Even so, these themes must, in the absence of further empirical evidence and theoretical insight, remain squarely on the side of science fiction.

Ingenuity and Intuition

Interestingly, whenever the original Star Trek treated the theme of artificial intelligence, the humans always outwitted the machines by looking to their ingenuity and intuition. For instance, in the episode “I, Mudd,” the humans confused the chief robot by using a variant of the liar paradox. Unlike dystopian visions in which machines best humanity, Star Trek always maintained a healthy humanism that refused to worship technology.

Even so, in the history of science fiction, artificial intelligence has typically had a different feel from physical implausibilities. It’s not that artificial intelligence violates any physical law. But artificial intelligence doesn’t seem to belong on this list of implausibilities, especially now with advances in the field, such as Large Language Models.

What, then, are the fundamental limits and possibilities of artificial intelligence? Is there good reason to think that in the divide between science fiction and science, full-orbed artificial intelligence — AGI — will always remain on the science fiction side? In this series, I’m going to argue that there are indeed fundamental limits to artificial intelligence standing in the way of its matching and then exceeding human intelligence, and thus that AGI will never achieve full-fledged scientific status.

Next, “Artificial General Intelligence: The Poverty of the Stimulus.”

Editor’s note: This article appeared originally at BillDembski.com.