In 1956, a group of leading scientists in the field named “thinking machines” gathered together at Dartmouth College for a summer workshop, which was then considered the first AI Summer of the field. Their objective was to spend two months to find a way to build a program that is able to form generalisations. In layman’s terms of today’s technologies, it’s an AI with human intelligence, able to perform various tasks from Problem-Solving to Natural Language Understanding. After the workshop, this objective became the golden dream of AI researchers around the world, and even today, it is still a big dream they are working so hard to make come true as OpenAI captures this in their vision: “Our mission is to ensure that Artificial General Intelligence — AI systems that are generally smarter than humans — benefits all of humanity.”.

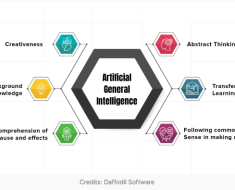

Nevertheless, as technological and scientific capabilities of the 1950s were not sufficient to realise the golden dream of building an electronic brain, the scientists decided to split it into smaller problems to solve, each focuses on a single capability of the brain such as problem-solving, vision and language, etc. The AIs were then designed to be specialised in specific areas and the Artificial General Intelligence dream was left unfulfilled. It has been almost 70 years, but we would live in a very different world if this dream ever came true.

The terms “General” and “Generalisation” are essential here. Conventional Machine Learning models are trained on a set of knowledge focusing on a specific task. If the context is drifted or the model is applied in a new domain of knowledge, it will not be able to perform well and may require other techniques, such as Knowledge Transfer, to improve the performance. Nevertheless, even with Knowledge Transfer and similar solutions, the model will have to be retrained to be able to fit into the new domain, and for that reason, a model can only work well in one domain or a group of similar domains at the same time. This is called “Specialisation”.

To achieve “Generalisation”, the model first needs to be able to switch contexts seamlessly without going through the retraining process. Modern Large Language Models can answer various questions in different contexts without retraining, and under this condition, we can say that the LLMs are generic.

However, to be considered generally intelligent and to be able to generalise, the LLMs must satisfy the second condition, which is the capability to perform various tasks. Unfortunately, when applied out-of-the-box, LLMs are only suitable for text generation and common Q&A. For example, they don’t understand numbers, calculus and algebra. In the example below, the answer from the LLM is quite close to the one from the basic calculator. But still, it’s incorrect. The response from the LLM is the result of its guessing on what (numerical) characters should be placed after the sentence “The result of multiplying 4.47723 by 7.5477 is…” rather than the actual calculation.

In short, LLMs are generic but not Artificial General Intelligence (AGI) just yet.

Although we are far from having an AGI, LLMs bring an exciting offer to the table. Because they are generic in nature and able to “understand” various contexts, LLM can act as an agent for communicating with other specialised models. In the end, we may not have an AGI, but perhaps a system capable of doing a proportion of what an AGI can do. This sounds similar to ensemble modelling, but the difference is that the ensembled model provides answers for only a single task by combining answers from multiple sub-models. In contrast, the LLM agent provides answers for various tasks using different sub-models, each specialised for a particular task.

The problem is, how does it know which sub-model it should call for a task? And if a series of tasks is implicitly defined in a question from the user, how does the agent arrange the order of the requests being sent to other specialist models?

Let’s try this example on ChatGPT.

In the example above, we intentionally shuffle the task order when giving to the model and challenge it to find the correct execution sequence after determining the required tasks. With just a simple instruction, we can have the LLM agent to work as an orchestrator to organise tasks and their execution order. Output from the agent can be then used to programmatically execute codes (function-calling), to call other Machine Learning models, or even to send request to different agents. This orchestrator agent is sometimes called “Switch Agent” and the system with a number of agents working together is called “multi-agent” system (MAS).

When well-designed, multi-agent systems can act as an AGI. Designing them well is crucial because we are dealing with a distributed system where each AI agent works independently, and information (context) must be shared between agents, as opposed to the single AI model approach. We must ensure that agents share and operate under the same context rather than giving responses outside the boundary. Although there are many other challenges industry experts are trying to overcome, such as security, risk, and governance, LLMs set a pathway to generalization and AGI.

The pursuit of Artificial General Intelligence (AGI) remains one of the most ambitious and enduring goals in the field of Artificial Intelligence. While the technological and scientific capabilities of the 1950s were insufficient to achieve this dream, significant progress has been made through the development of specialised AI systems and modern large language models (LLMs). Although LLMs are not yet AGI, their ability to handle various contexts and coordinate with specialised models offers a promising pathway towards generalisation.

As we continue to advance AI technologies, the concept of multi-agent systems (MAS) emerges as a potential solution, leveraging the strengths of both specialised and generalised approaches. By orchestrating tasks across different models, MAS can approximate the capabilities of AGI, bringing us closer to realising the golden dream envisioned by early AI pioneers. Despite the challenges in design, security, and governance, the progress in LLMs and MAS signals a future where AI systems can benefit humanity in more profound and versatile ways.