![]()

Download a PDF of the paper titled Data Contamination Quiz: A Tool to Detect and Estimate Contamination in Large Language Models, by Shahriar Golchin and 1 other authors

Download PDF

HTML (experimental)

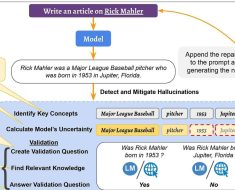

Abstract:We propose the Data Contamination Quiz (DCQ), a simple and effective approach to detect data contamination in large language models (LLMs) and estimate the amount of it. Specifically, we frame data contamination detection as a series of multiple-choice questions and devise a quiz format wherein three perturbed versions of each dataset instance are created. These changes only include word-level perturbations. The generated perturbed versions, along with the original instance, form the options in the DCQ, with an extra option accommodating the possibility that none of the provided choices is correct. Given that the only distinguishing signal among the choices is the exact wording relative to the original instance, an LLM, when tasked with identifying the original instance from the choices, gravitates towards the original one if it has been exposed to it in its pre-training phase–a trait intrinsic to LLMs. Tested over several datasets with GPT-4/3.5, our findings–while fully lacking access to LLMs’ pre-training data and internal parameters–suggest that DCQ uncovers greater contamination levels compared to existing detection methods and proficiently bypasses more safety filters, especially those set to avoid generating copyrighted contents.

Submission history

From: Shahriar Golchin [view email]

[v1]

Fri, 10 Nov 2023 18:48:58 UTC (7,777 KB)

[v2]

Fri, 17 Nov 2023 00:20:44 UTC (7,777 KB)

[v3]

Mon, 20 Nov 2023 20:43:10 UTC (7,704 KB)

[v4]

Sat, 3 Feb 2024 18:31:30 UTC (61 KB)

[v5]

Sat, 10 Feb 2024 22:28:34 UTC (61 KB)

![[2312.16273] Coordination and Machine Learning in Multi-Robot Systems: Applications in Robotic Soccer [2312.16273] Coordination and Machine Learning in Multi-Robot Systems: Applications in Robotic Soccer](https://aigumbo.com/wp-content/uploads/2023/12/arxiv-logo-fb-235x190.png)