Artificial Intelligence (AI) keeps pushing the limits of what technology can do. As of 2024, knowing AI terms is really important, not just for tech fans but for everyone.

If you are confused by Artificial Intelligence (AI) terms? Maybe you’re interested but find it all too complicated. You’re not alone. Technology moves fast, and it’s normal to feel unsure about diving into AI.

But don’t worry. We’re here to help.

This complete Glossary Of AI Terms will guide you through all the technical words, making AI easy to understand. Whether you’re a writer stuck for ideas or want to make cool content easily, this glossary will show you how to use AI well.

So, before we kick off, lets understand what AI real is.

What is AI

Artificial Intelligence, commonly referred to as AI, embodies the simulation of human intelligence processes by machines, especially computer systems. These processes include learning, reasoning, and self-correction.

At its core, AI is designed to replicate or even surpass human cognitive functions, employing algorithms and machine learning to interpret complex data, make decisions, and execute tasks with unprecedented speed and accuracy.

If you dont get that, let me explain what AI is, like I would do to a fifth grader.

So, you know how sometimes we use computers to help us do things, like play games or find information on the internet? Well, Artificial Intelligence, or AI for short, is like giving those computers the ability to think and learn on their own, kind of like how we do.

It’s like teaching a computer to be smart and make decisions, just like you learn new things in school and make choices every day. So, AI helps computers do cool stuff without needing us to tell them every single step.

It’s pretty neat, right?

4 Kinds Of AI

Reactive Machines: These are like the simplest kind of AI. They can only do one specific task, and they don’t remember anything from before. It’s like a robot that plays a game but doesn’t learn from its mistakes.

Limited Memory AI: These AIs can remember some things from the past to help them make decisions in the present. It’s like a robot that remembers where it’s been before so it can figure out where to go next.

Theory of Mind AI: This type of AI can understand emotions and thoughts, kind of like how we understand what others might be feeling. It’s like a robot that can tell if you’re happy or sad and acts accordingly.

Self-aware AI: This is the most advanced type of AI. It can not only understand emotions but also have its own thoughts and consciousness, like a real person. We’re not quite there yet with this kind of AI, but it’s something scientists are working on.

A-Z Glossary Of AI Terms

Here’s a complete A-to-Z glossary of AI terms as of 2024:

A – Artificial Intelligence:

The simulation of human intelligence processes by machines, especially computer systems. These processes include learning (the acquisition of information and rules for using the information), reasoning (using rules to reach approximate or definite conclusions), and self-correction.

B – Big Data:

Large volumes of structured and unstructured data that inundates a business on a day-to-day basis. It’s what organizations do with the data that matters—data analytics and AI are key to extracting insights from big data.

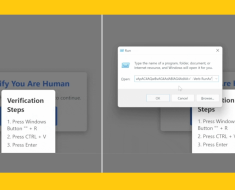

C – Chatbot:

A computer program designed to simulate conversation with human users, especially over the internet. Chatbots are often used in customer service or as virtual assistants.

D – Deep Learning:

A subset of machine learning where artificial neural networks, algorithms inspired by the human brain, learn from large amounts of data. Deep learning networks can automatically learn to represent patterns in the data with multiple levels of abstraction.

E – Expert System:

A computer system that emulates the decision-making ability of a human expert. It uses knowledge and inference procedures to solve problems that usually require human expertise.

F – Fuzzy Logic:

A form of many-valued logic in which the truth values of variables may be any real number between 0 and 1, considered to be “fuzzy.” Fuzzy logic is used in control systems to handle incomplete and imprecise information.

G – Genetic Algorithm:

A search heuristic that mimics the process of natural selection, often used to generate high-quality solutions to optimization and search problems. It is based on the principles of genetics and natural selection.

H – Human-in-the-Loop (HITL):

An approach in machine learning and AI where human intervention or input is integrated into the system’s operation, typically to improve performance or to handle edge cases.

I – Internet of Things (IoT):

The network of physical objects (“things”) embedded with sensors, software, and other technologies for the purpose of connecting and exchanging data with other devices and systems over the internet.

J – Jupyter Notebook:

An open-source web application that allows you to create and share documents that contain live code, equations, visualizations, and narrative text. It is widely used for data cleaning, transformation, numerical simulation, statistical modeling, data visualization, machine learning, and more.

K – Knowledge Graph:

A knowledge base that stores structured information to provide semantic meaning and context to data. It represents knowledge in a form that is readable both by humans and machines, making it an essential component of many AI applications.

L – Machine Learning:

A subset of artificial intelligence that enables systems to automatically learn and improve from experience without being explicitly programmed. Machine learning algorithms build mathematical models based on sample data, known as “training data,” in order to make predictions or decisions.

M – Machine Learning:

Machine Learning enables computers to learn and improve from data without explicit programming. It involves building models that identify patterns and make predictions, revolutionizing industries through applications like recommendation systems, image recognition, and autonomous vehicles.

N – Neural Network:

A computational model inspired by the structure and function of the human brain’s neural networks. Neural networks are composed of interconnected nodes (neurons) that process and transmit information. They are used in various AI applications, including image and speech recognition, natural language processing, and machine translation.

O – Ontology:

A formal representation of knowledge within a domain, typically describing the concepts, entities, relationships, and rules relevant to that domain. Ontologies are used in AI to facilitate knowledge sharing and reasoning.

P – Predictive Analytics:

The practice of extracting information from existing data sets to determine patterns and predict future outcomes and trends. Predictive analytics uses techniques from statistics, data mining, machine learning, and AI to analyze current data and make predictions about future events.

Q – Quantum Computing:

A type of computing that takes advantage of the strange ability of subatomic particles to exist in more than one state at any time. Quantum computers use quantum bits, or qubits, which can represent and store information in multiple states simultaneously, enabling them to perform certain calculations much faster than classical computers.

R – Reinforcement Learning:

A type of machine learning where an agent learns to make decisions by interacting with an environment. The agent receives feedback in the form of rewards or punishments for its actions and adjusts its strategy to maximize cumulative reward over time.

S – Supervised Learning:

A type of machine learning where the algorithm learns from labeled data, meaning each example in the training set is paired with a corresponding label or output. The algorithm learns to map inputs to outputs based on the labeled examples provided during training.

T – Transfer Learning:

A machine learning technique where a model trained on one task is reused or adapted as the starting point for a model on a second task. Transfer learning can significantly reduce the amount of labeled data and training time required to develop models for new tasks.

U – Unsupervised Learning:

A type of machine learning where the algorithm learns patterns from unlabeled data without any guidance or feedback. Unsupervised learning algorithms explore the structure of the data to extract meaningful information or identify hidden patterns.

V – Virtual Reality (VR):

A computer-generated simulation of an interactive 3D environment that users can explore and interact with. VR technology often employs headsets or goggles to immerse users in virtual worlds, offering a highly immersive and interactive experience.

W – Weak AI:

Artificial intelligence that is focused on performing a narrow task or a specific set of tasks, as opposed to strong AI, which aims to exhibit general intelligence comparable to human intelligence across a wide range of tasks.

X – XAI (Explainable AI):

A field of artificial intelligence research that focuses on developing techniques and methods to make AI systems explainable and transparent to humans. XAI aims to enhance trust, accountability, and interpretability in AI systems by enabling users to understand the rationale behind AI-generated decisions.

Y – YAML (YAML Ain’t Markup Language):

A human-readable data serialization standard that is commonly used for configuration files and data exchange in applications. YAML is often used in AI projects for specifying model configurations, hyperparameters, and experiment settings.

Z – Zero-Shot Learning:

A type of machine learning where a model is trained to recognize or classify objects or concepts that it has never seen before, without any labeled examples of those objects or concepts during training. Zero-shot learning relies on transfer learning and semantic embeddings to generalize knowledge across different tasks or domains.

This glossary covers key terms in the field of artificial intelligence and related terms as of 2024.