Last November, the Autodesk crowd returned to familiar grounds: the Venetian in Las Vegas for the annual Autodesk University. In the convention center a few corridors away from the slot machines, roulette wheels and Blackjack tables, Autodesk CEO Andrew Anagnost decided it was time to show his hands.

“For better or worse, AI [artificial intelligence] has arrived, with all the looming implications for all of us,” he said. “We’ve been working to get you excited about AI for years. But now we’re moving from talking about it to actually changing your businesses.”

Autodesk’s big bet comes in the form of Autodesk AI, based in part on the technology from BlankAI it acquired. The implementation will lead to “3D models that can be rapidly created, explored and edited in real time using semantic controls and natural language without advanced technical skills,” the company announced.

More details come from Jeff Kinder, executive vice president of product development and manufacturing. “Debuting in our automotive design studio next year, BlankAI will allow you to pull from your historical library of design work, then use it to rapidly generate new concepts that build on your existing design style,” he said. This is where general AI ends, and personalized machine learning begins.

Beyond Pretrained Algorithms

The ability to use natural language to generate 3D assets, as described by Autodesk in its announcement, would be a big achievement itself. But the next step is more tantalizing. The initial AI will no doubt be trained based on publicly available design data and Autodesk data. But the company also revealed it planned to give users a way to further refine the algorithms using their own proprietary data.

“At some point, as some of these capabilities get to a level of automation, we may actually license a model to a particular customer, and they can train and improve that model on top of their capabilities. That’s the business model that Microsoft is using right now for some of their tools. I think it’s a very robust model,” said Anagnost during an industry press Q&A.

“Although initially debuting in Autodesk’s automotive portfolio, Autodesk ultimately aims to include these capabilities as part of its Fusion industry cloud,” said Stephen Hooper, Autodesk vice president of design and manufacturing. “If the algorithm is trained on your historical data, it understands your design cues, styling cues and brand identity,” making it much more helpful in generating your preferred designs. While it’s clearly on Autodesk’s roadmap, the exact mechanism remains unclear. “We’re still evaluating how and when we might provide a private model,” Hooper said.

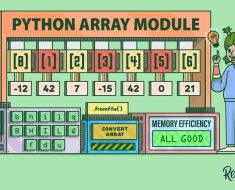

Printed Circuit Brain

Last year, at PCB West in Santa Clara, CA, Kyle Miller, research and product manager at Zuken, unveiled a new offering from Zuken for its CR-8000 printed circuit board design software: the Autonomous Intelligent Place and Route (AIPR). Miller pointed out AI-optimized layout tends to be cleaner, simpler, with fewer clashes, because the software could process complex design hierarchy and signal clusters much better than humans could. “What takes Autorouter [another product] a set-up time of 30 minutes and auto-routing time of 15 minutes, might just take AIPR 30 seconds,” he said.

AIPR is just a launchpad for the PCB software maker’s long-term goal. The next step, according to Miller, is to apply machine learning to all the PCB designs available in Zuken’s library. The outcome is what the company calls the Basic Brain, which “enhances the user experience by routing the design utilizing the Smart Autorouter based on learned approaches and strategies.”

After that, Zuken plans to offer a tool that its customers can use to apply machine learning to their own library. The company calls it the Dynamic Brain, which “learns from your PCB designers, utilizing past design examples and integrating them into AI algorithms.” Ultimately, the goal is the Autonomous Brain, “an AI-driven powerhouse in continuous learning mode, pushing the boundaries of creativity.”

Zuken’s roadmap is a multiyear roadmap; therefore, Dynamic Brain is not expected to show up in the portfolio soon. “Our first goal is to make the base product—the Basic Brain—as capable as possible before delivering the Dynamic Brain,” Miller said.

The plan to let customers use the AI tool to ingest proprietary data also invites certain questions about security. “It has been very important to Zuken from the beginning of this process that we have no internal access to any customer data … All training of the Dynamic Brain will be done on the customer site. We have no plan to use cloud-based services for this (unless specifically agreed with a customer). No data is shared with Zuken servers,” said Miller.

The training will be done via the Zuken CR-8000 platform, Miller explained. “This communicates on the local network only (or wider network if the customer has a secure multisite network) with the AIPR server, which handles the training,” he added.

Ansys SimAI

On the first day he took the job as Ansys’s CTO, Prith Banerjee decided he was going to focus on AI-powered simulation.“ In 2018, we started investing in it, specifically to explore opportunities in two areas: Can AI or ML make simulation faster? Can it make simulation easier to use?” he asked.

The investment appears to be bearing fruit. Last October, Ansys launched Ansys SimAI, described as “a cloud-enabled, physics-neutral platform that will empower users across industries to greatly accelerate innovation and reduce time to market.”

Once trained with customer data, Ansys SimAI predicts simulation results, which align with Ansys Fluent calculations. However, Ansys SimAI takes a mere 5 minutes. Image courtesy of Ansys.

The software is a departure from typical simulation products. It’s better to think of it as a way to use AI to train the software to develop good finite element analysis (FEA) instincts.

“SimAI is a giant leap forward compared to our previous technology, in that the users do not need to parametrize the geometry,” said Banerjee.

You feed the software a set of simulation results, then let the AI-like software learn the correlations between the topology and the outcomes. “The users can take their simulation results, upload them to SimAI and train the model. After uploading the data, the users select which variables they are interested in and how long they are willing to wait for the training to complete. Once the training is done, they can upload new geometries and make predictions,” explained Banerjee.

This is similar to how, over time, a veteran FEA user learns to anticipate certain stress distributions, deformation and airflow patterns based on the design’s topology. Except, with high-performance computing (HPC), the software can learn in a few hours what would have taken a human months or years to learn. But with HPC comes the need to rely on the cloud—Ansys cloud, based on Amazon Web Services (AWS) infrastructure.

“AWS infrastructure provides state-of-the-art security and is used by many security-sensitive customers spanning defense, healthcare and financial organizations,” Banerjee said. In 2018, Ansys launched Ansys Discovery, a fast-paced simulation tool targeting the designers. Since then, cloud has become an integral part of the company’s strategy and offerings.

A New Playground

The personalization of machine learning is “a trend that we’ve been seeing in the last five years,” said Johanna Pingel, AI product marketing manager at MathWorks. “Essentially, you start with out-of-the-box algorithms, but then you want to incorporate your own engineering data,” she added.

MathWorks offers MATLAB and Simulink. “MATLAB apps let you see how different algorithms work with your data. Iterate until you’ve got the results you want, then automatically generate a MATLAB program to reproduce or automate your work,” according to the company.

Once you have an executable program, you may deploy it in Simulink to build system models to conduct what-if analyses.

Suppose you’re an autonomous vehicle developer. It’s relatively easy to develop or find an out-of-the-box lane-detection algorithm, Pingel pointed out.

“But that’s just the starting point. You may want to refine it to work for nighttime, or for the UK, where the drivers drive on the left.” Without such refinement options, the algorithm’s scope will likely be too broad to be effective for your enterprise’s specific needs, she said.

MATLAB is ideal for such training, according to Pingel. She explained, “You can import your data through a [graphical user interface (GUI)], and train a model through a GUI. You use a low-code, app-based workflow for training models.”

MathWorks has also taken note of its own customers’ growing interest in ChatGPT-like interactions. In response, in November 2023, the company launched the MATLAB AI Chat Playground, trained on ChatGPT. It appears as a chat panel in MATLAB, allowing users to query the software using natural language. However, the tool is experimental and still evolving, Pingel cautioned.

Although natural language-based input might make engineering tools more accessible, Pingel pointed out the domain knowledge and expertise of the human still remains essential in crafting the input and assessing the output.

“Engineers must use their inherent knowledge of the problem when they’re talking to the software about the kind of structural capabilities they want. They have to bring that to the table when they’re using generative AI,” she said.

The Dilemma with Data

Former SolidWorks CEO and Onshape cofounder John McEleney warned, “I’m not dismissing the technology, but there’s a lot of AI washing happening. Everyone wants to jump on the AI bandwagon with AI this, AI that.”

For AI training to be reliable, the sample data pool has to be large enough to represent a rich variety of scenarios.

“The question is, do you have enough models to train your AI engine?” he asked. “If you’re a large automotive or aerospace company, sure. But for most midsize manufacturers, maybe not. If your training is based on 50 to 100 models, are you reaching a critical mass?” he asked.

McEleney revealed Onshape is currently exploring some internal models to gain insights. “It would be logical and reasonable to assume that design assistant-type suggestions will be how we would introduce these features,” he said.

Considering how speaking to AI chatbots such as Siri on smartphones has become the norm, McEleney said, “You can imagine being able to tell your software, ‘Go do this,’ and the system being able to find samples from your previous work to execute it for you.”

He also foresees users being highly protective of their proprietary data even if they want to benefit from AI training.

“So I can see that, at least in the beginning, people will want to do that type of training internally,” he added.

Most people would like access to others’ data, because a larger sample pool makes the AI algorithm more reliable. But the same people are also highly protective of their proprietary data, because it contains IP that gives them a competitive advantage. That’s the dilemma of the AI era.