Princeton researchers have created an artificial intelligence (AI) tool to predict the behavior of crystalline materials, a key step in advancing technologies such as batteries and semiconductors. Although computer simulations are commonly used in crystal design, the new method relies on a large language model, similar to those that power text generators like ChatGPT.

By synthesizing information from text descriptions that include details such as the length and angles of bonds between atoms and measurements of electronic and optical properties, the new method can predict properties of new materials more accurately and thoroughly than existing simulations—and potentially speed up the process of designing and testing new technologies.

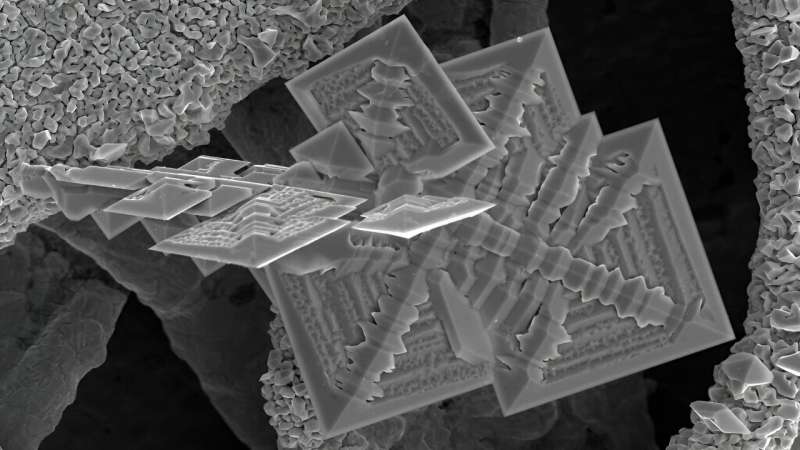

The researchers developed a text benchmark consisting of the descriptions of more than 140,000 crystals from the Materials Project, and then used it to train an adapted version of a large language model called T5, originally created by Google Research. They tested the tool’s ability to predict the properties of previously studied crystal structures, from ordinary table salt to silicon semiconductors. Now that they’ve demonstrated its predictive power, they are working to apply the tool to the design of new crystal materials.

The method, presented Nov. 29 at the Materials Research Society’s Fall Meeting in Boston, represents a new benchmark that could help accelerate materials discovery for a wide range of applications, according to senior study author Adji Bousso Dieng, an assistant professor of computer science at Princeton.

The paper outlining the method, “LLM-Prop: Predicting Physical And Electronic Properties Of Crystalline Solids From Their Text Descriptions,” is now posted to the arXiv preprint server.

Existing AI-based tools for crystal property prediction rely on methods called graph neural networks, but these have limited computational power and can’t adequately capture the nuances of the geometry and lengths of bonds between atoms in a crystal, and the electronic and optical properties that result from these structures. Dieng’s team is the first to tackle the problem using large language models, she said.

“We have made tremendous advances in computer vision and natural language,” said Dieng, “but we are not very advanced yet when it comes to dealing with graphs [in AI]. So, I wanted to move from the graph to actually translating it to a domain where we have great tools already. If we have text, then we can leverage all these powerful [large language models] on that text.”

The language model-based approach “gives us a whole new way to look at the problem” of designing materials, said study co-author Craig Arnold, Princeton’s Susan Dod Brown Professor of Mechanical and Aerospace Engineering and vice dean for innovation. “It’s really about, how do I access all of this knowledge that humanity has developed, and how do I process that knowledge to move forward? It’s characteristically different than our current approaches, and I think that’s what gives it a lot of power.”

More information:

Andre Niyongabo Rubungo et al, LLM-Prop: Predicting Physical And Electronic Properties Of Crystalline Solids From Their Text Descriptions, arXiv (2023). DOI: 10.48550/arxiv.2310.14029

Citation:

Researchers harness large language models to accelerate materials discovery (2024, January 29)

retrieved 29 January 2024

from https://techxplore.com/news/2024-01-harness-large-language-materials-discovery.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.